48 Operations on functions

Block 1 introduced the idea of mathematical modeling: creating a representation of some aspect of the world out of mathematical “stuff.” The relevant “stuff” includes the concept of a function with its inputs and output, units and dimensions of quantities, frameworks such as the basic modeling functions and ways of combining functions via linear combination, composition, and multiplication.

Our emphasis in calculus has been and will continue to be functions. This contrasts with high-school algebra where the emphasis was on equations and manipulations such as the movement of quantities from one side of the equal sign to another.

It pays to think a little about what equations mean and what information they are intended to convey. Consider an equation like \[{\mathbf{\text{equation:}}}\ \ \ x^2 - 4 x + 3 = 0\] which you might see in a beginning algebra text. What does this equation mean?

A simple equation like \(3 + 4 = 7\) is a statement of fact: three plus four is indeed the same as seven. But \(x^2 - 4 x + 3 = 0\) is not a fact. The equality might be true or false, depending on what \(x\) happens to be. In an algebra course, the equation is intended to be an instruction to a person: \[\text{Given}\ x^2 - 4 x + 3 = 0, \ \ \text{find x.}\] or, equivalently, \[\text{Solve}\ x^2 - 4 x + 3 = 0\ \ \text{for}\ x.\] “Find \(x\)” or “Solve for \(x\)” direct you to determine which numerical values (if any) when substituted for \(x\) in the equation will produce a true statement.

“Solve for \(x\)” is an example of a mathematical task. We undertake such tasks to extract useful information from a mathematical object. For instance, textbook “word problems” involve two phases: i) a modeling phase where you translate a verbal description of a situation—often involving paddling canoes across a flowing river—into a matching mathematical form and ii) having constructed a suitable mathematical form, you apply some mathematical task to the form to reveal the answer you seek.

This chapter looks at a small list of mathematical tasks, calling them operations on functions. These operations, combined in various ways, enable you to extract relevant information from the functions you build in your models. A simple important part of this introduction is to give a name to each task. That way, confronted with a mathematical problem, you will be able to look down the short mental menu of opertions to decide which ones are applicable to your circumstance. Even better, once each operation has a name, you can tell a computer to do it for you.

Here are four common mathematical tasks that you’ve already encountered in Blocks 1 through 3 of this book:

- Given a function and specific values for the inputs, apply the function to the inputs to produce an output. This is also called evaluating a function on inputs.

- Given a function and the name of a with-respect-to input, construct a new function that is the derivative of the given function. The name for this task is to differentiate the function.

- Like (2), given a function and the name of a with-respect-to input, anti-differentiate the function.

- Given a function and an interval of the domain of that function, accumulate the function on that interval. This is named to integrate the function on the interval. (You may recognize that you can perform this task by breaking it down into task (3) and then applying task (1) twice to the result. That is, \(\int_a^b f(t) dt = F(b) - F(a)\).)

In this chapter, we focus on the following operations on functions that you may not yet have mastered.

- Given a function and an output value from the function, find values for an input (or inputs) which will generate that output value. This is the solving task. A closely related task is zero-finding, which is to find an input that will cause the function to produce the output zero.

- Given a function and an interval of the domain, find an input value that will produce an output value that is higher than would be produced for nearby inputs. As you might recognize, this is called finding an argmax. The problem of finding an argmin is the same kind of problem, and can be solved by finding the argmax of the negative of the function.

- Given a function and an input value, iterate the function to produce a new input that will be better than the original for some purpose.

These seven tasks allow you to perform the mathematical work of extracting useful information from a model. Human judgement and creativity is needed to construct the model. And judgement and experience is needed to figure out which tasks to perform and in what order. But carrying out the tasks does not require judgement, experience, or creativity. Performing the tasks requires only an algorithm and the tools to step through the algorithm. Computers are excellent for this; you just have to give them the function and whatever additional input is required (e.g. the name of a with-respect-to input), and then tell the computer which task it is to perform.

48.1 Task: Solve

Starting materials:

- a function \(f(x)\),

- a known output value \(v\), and

- a candidate for a suitable input value \(\color{brown}{x_0}\)

Ideal result from the algorithm: A new candidate \(\color{magenta}{x^\star}\) such that \(f(\color{magenta}{x^\star}) = v\) or, equivalently, that \[\left\|\strut f(\color{magenta}{x^\star}) - v \right\| = 0\ .\]

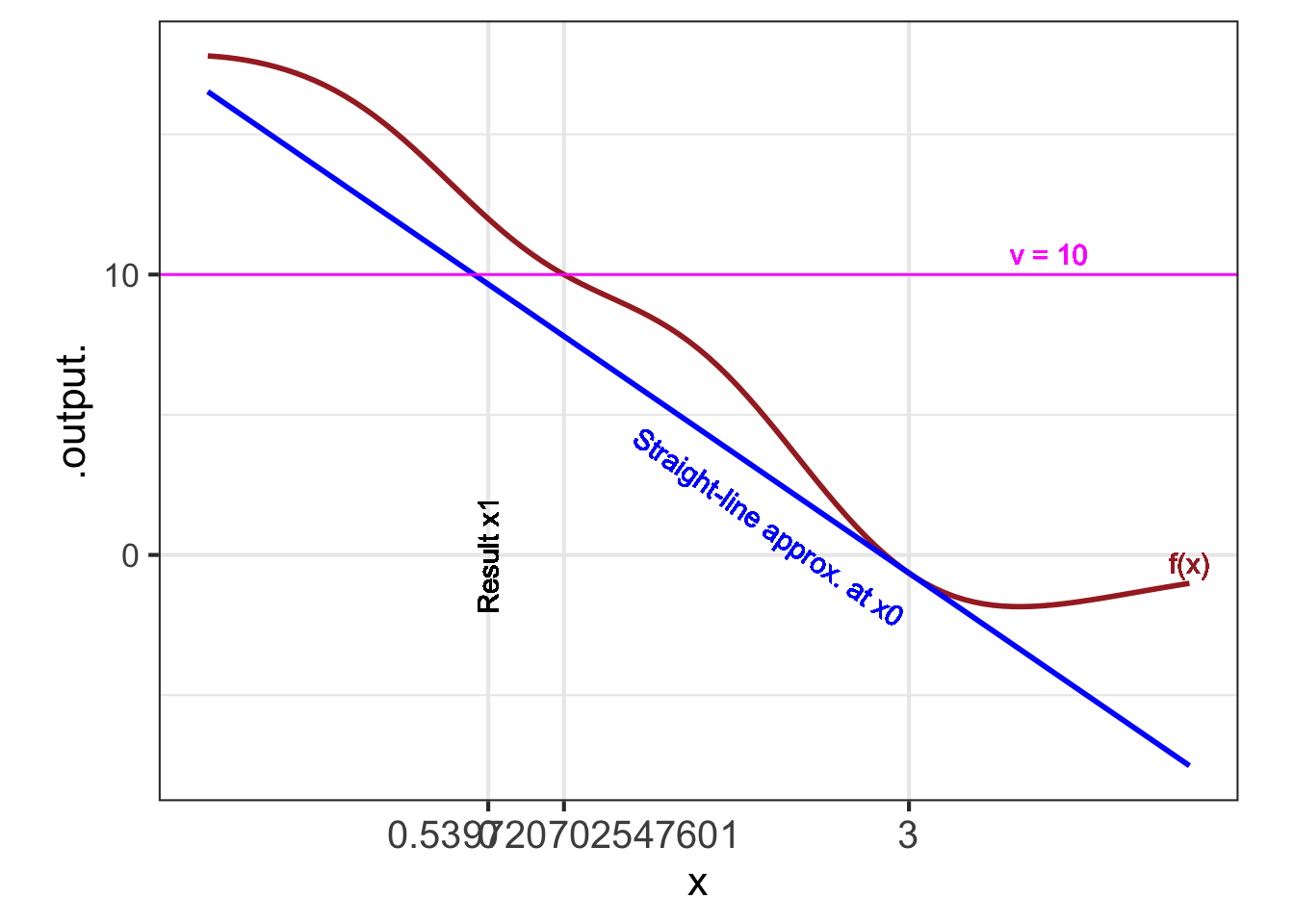

Realistic result from the algorithm: The new candidate \(\color{magenta}{x^\star}\) will be better than \(x_0\), that is, \[ \left\|\strut f(\color{magenta}{x^\star}) - v\right\|\ \ {\mathbf <}\ \ \left\|\strut f(\color{brown}{x_0}) - v\right\|\] One algorithm for the operation involves approximating the function \(f(x)\) with a straight-line function \(\widehat{f}(x) = a x + b\). For straight-line functions, the solution \(x^\star\) can be found by simple arithmetic:

\[a x^\star + b - v = 0 \ \ \implies \ \ \ x^\star = \frac{b-v}{a}\] You saw in Block 2 how to construct the straight-line approximation to a function \(f()\) in a region of interest near \(x_0\) by evaluating the function and its derivative at \(x_0\). In other words, \[\widehat{f}(x) \equiv f(x_0) + \partial_x f(x_0) \left[\strut x - x_0 \right]\ .\]

Because \(\widehat{f}(x)\) is a straight-line function, it is easy to find an input \(x_1\) that will generate exactly the desired output value \(v\). In other words, to solve \(\widehat{f}(x_1) = v\) for \(x_1\).

\[\begin{equation} x_1 = x_0 + \frac{v-f(x_0)}{\partial_x f(x_0)} \end{equation}\]

Although \(x_1\) is an exact solution to the approximate problem, all we can hope is that for nonlinear \(f(x)\), \(x_1\) will be an approximate solution to the actual problem. In particular, we want \(x_1\) to be a better guess than \(x_0\): \[\|f(x_1) - v\| \underbrace{\ \ <\ \ }_\text{We hope!} \|f(x_0) - v\|\]

This (hopefully) improved solution \(x_1\) can become the starting guess for a new round of improvement based on the straight-line approximation to \(f(x)\) around \(x_1\). The refinement of \(x_1\) will be calculated as \[\begin{equation} x_2 = x_1 + \frac{v-f(x_1)}{\partial_x f(x_1)} \end{equation}\]

Each round of improvement—that is, “iteration”—calculates a new value \(x_{i+1}\) from the previous \(x_i\). The improvement can be encapsulated as a function, which we will call solve_step(): \[\text{solve-step}(z) \equiv z + \frac{v-f(z)}{\partial_x f(z)}\ .\]

This particular form of solve_step() is called a Newton step. The idea is to take successive steps, each refining the previous approximation, to get closer and closer (hopefully!) to the actual answer \(x^\star\):

\[x_1 = \text{solve-step}(x_0)\] \[x_2 = \text{solve-step}(x_1)\] \[x_3 = \text{solve-step}(x_2)\] \[\vdots\] \[x_{i+1} = \text{solve-step}(x_{i})\] \[\text{until eventually}\ \|f(x_{i+1}) - v\|\ \text{is practically zero.}\]

Newton’s method involves creating a custom solve_step() function for each new problem. The process is simple enough that we can create such functions automatically:

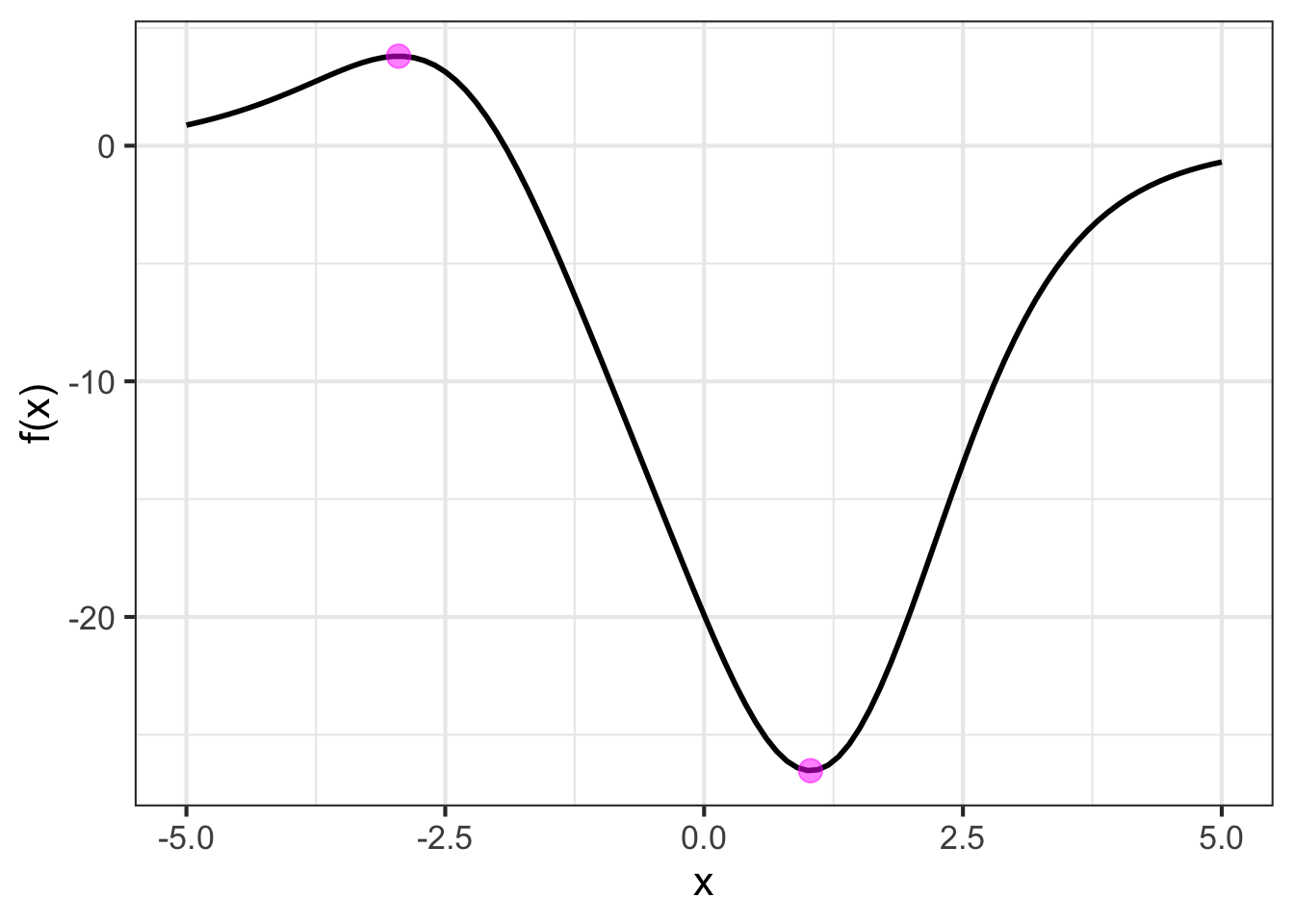

Let’s test it out with this function:

Construct the take-a-step function:

Take three steps starting at \(x_0 = 3\):

The Newton-step process is not guaranteed to work. By exploring cases where it fails, computational mathematicians1 have developed strategies for increasing the range of situations for which it works. Some of these strategies are incorporated in the R/mosaic function Zeros().

Zeros()

Zeros() takes two arguments: a function and a domain. The function is specified, as with other R/mosaic operators such as D(), slice_plot(), etc., as a tilde expression. Zeros() searches the domain for an input which makes the value of the function zero. If, instead, you want to find an input that makes the function value some other value, say \(f(x^\star) = v\), you construct an intermediate expression f(x) - v ~ x. Finding the zero of the intermediate function corresponds to finding \(f(x^star) = v\).

Sometimes there will be multiple zeros on the specified domain. To handle such situations, Zeros() returns a data frame with two columns. The first gives input values that correspond to an output near zero. The second column, named .output. calculates the output (and will be near zero). We will illustrate by solving \(x^3 = 6\) for \(x\).

48.2 Task: Argmax

The task of finding the input value that corresponds to a local maximum is called argmax finding. We don’t need to know the value of the local maximum to solve this problem. Instead, we designate a locale by specifying an initial guess \(x_0\) for the argmax. For argmax finding of an objective function \(f(x)\), we seek a \(x^\star\) such that \(f(x^\star) > f(x_0)\).

To accomplish this, we will approximate \(f(x)\) with a low-order polynomial, as we so often do. We will call the approximation \(\widehat{f(x)}\). In the solving task, the approximation was with a first-order polynomial. But first-order polynomials—that is, straight-line functions—don’t have a local argmax. We need to use a second-order polynomial. Easy enough: construct the second-order Taylor polynomial around \(x_0\):

\[\widehat{f}(x) \equiv f(x_0) + f'(x_0) \left[x - x_0\right] + \frac{1}{2} f''(x_0) \left[x-x_0\right]^2\] Remember that \(f(x_0)\), \(f'(x_0)\) and \(f''(x_0)\) are all fixed quantities; the output of the functions for the specific input \(x_0\).

To find the argmax of \(\widehat{f}(x)\), differentiate it with respect to \(x\) and find the zero of the derivative: \[\partial_x \widehat{f(x)} = f'(x_0) \underbrace{\partial_x\left[x - x_0\right]}_{{\large\strut}1} + \frac{1}{2} f''(x_0) \underbrace{\partial_x\left[x-x_0\right]^2}_{2 \left[x - x_0\right]} = 0 \]

This gives \[f'(x_0) + f''(x_0) \left[x - x_0\right] = 0\ .\] We will solve this equation for \(x\) and, having in mind the iterative process of the previous section, call the result \(x_1\) \[x_1 = x_0 - \frac{f'(x_0)}{f''(x_0)}\ .\] In other words, our new guess \(x_1\) will be a step away from the old guess \(x_0\), with the step being \(-f'(x_0) / f''(x_0)\). This also is called a Newton step. What’s different from the Newton step of the previous section is that the function whose zeros are being sought is not \(f(x)\) but \(f'(x)\).

48.3 Task: Iterate

In everyday language, to iterate means simply to repeat: to do something over and over again. In mathematics and in computing, “iterate” has a more specific meaning: to repeatedly perform an operation, each time taking the output from the previous round as the input to the current round.

For our purposes, it suffices to define iteration in terms of the use of a function \(g(x)\). The function must be such that the output of the function can be used as an input to the function; the output must be the same kind of thing as the input. The iteration starts with a specific value for the input. We will call this value \(x_0\). Iteration then means simply to compose the function with itself starting with \(x_0\) as the initial input. Here, for instance, is a four-step iteration: \[g(g(g(g(x_0))))\] Or, you might choose to iterate for ten steps: \[g(g(g(g(g(g(g(g(g(g(x_0))))))))))\] However many iteration steps you take, the output from the final step is what you work with.

Iteration is the mathematical engine behind many function operations. You’ve already seen it at work for the “solve” task and the “argmax” task.

48.4 Software for the tasks

Evaluation of a function—number one in the list at the head of this chapter—is so central to the use of computer languages generally that every language provides a direct means for doing so. In R, as you know, the evaluation syntax involves following the name of the functions by a pair of parentheses, placing in those parenthesis the values for the various arguments to the function. Example: log(5)

The other six operations on functions listed above, there is one (or sometimes more) specific R/mosaic functions. Every one of them takes, as a first argument, a tilde expression describing the function on which the operation is to be formed; on the left side is a formula for the function (which can be in terms of other, previously defined functions), on the right side is the with-respect-to input.

- Differentiate:

D(). Returns a function. - Anti-differentiate:

antiD(). Returns a function. - Integrate:

Integrate(). Returns a number. - Solve:

Zeros(). Returns a data frame with one row for each solution found. - Argmax:

argM()Finds one argmax and one argmin in the domain.local_argM()looks for all the local argmaxes and argmins. Returns a data frame with one row for each argmax or argmin found. - Iterate:

Iterate()Returns a data frame with the value of the initial input and the output after each iteration.

Each of operations 4-6 involves the specification of a domain. For Integrate(), this is, naturally, the domain of integration: the upper and lower bounds of the integral

For Zeros() and argM() the domain specifies where to search for the answer. Iterate() is slightly different. After the tilde expression comes an initial value \(x_0\) and then n= which you use to set the number of times to iterate.

A traditional name for such a person is “numerical analyst.”↩︎