33 Change & accumulation

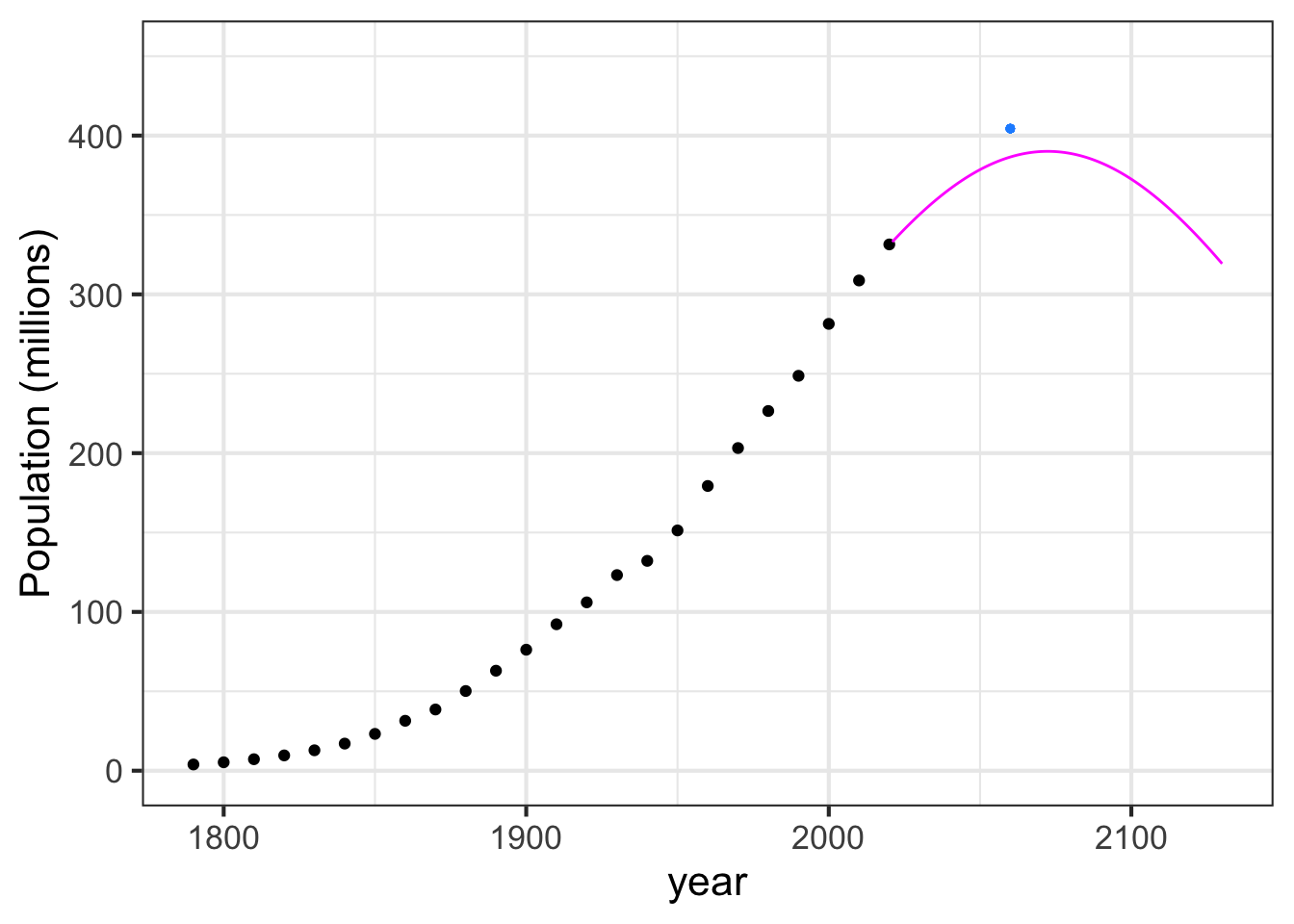

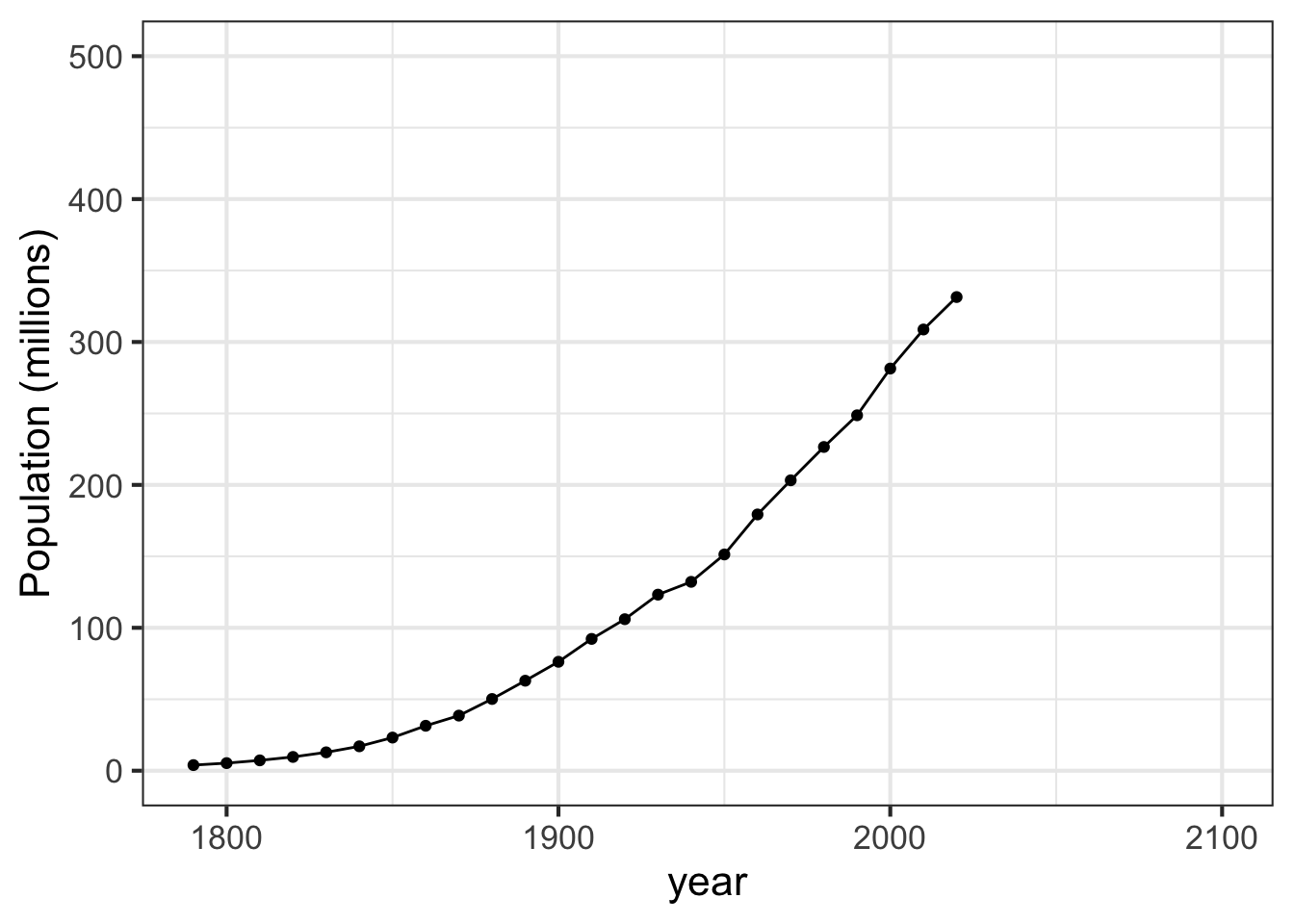

Every 10 years, starting in 1790, the US Census Bureau carries out a constitutionally mandated census: a count of the current population. The overall count as a function of year is shown in Figure fig-pop-graph. [Source]

In the 230 years spanned by the census data, the US population has grown 100-fold, from about 4 million in 1790 to about 330,000,000 in 2020.

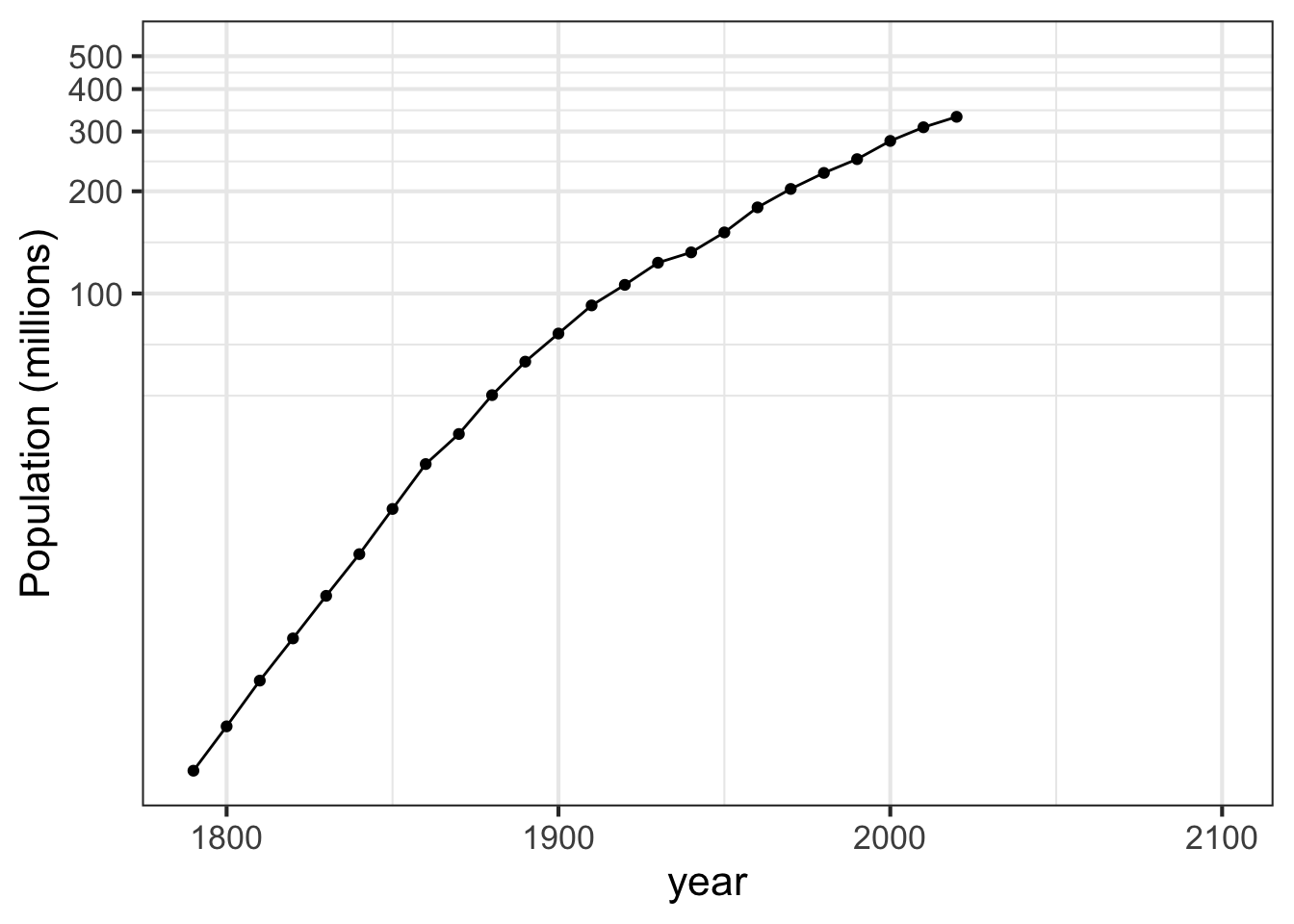

It is tempting to look for simple patterns in such data. Perhaps the US population has been growing exponentially. A semi-log plot of the same data suggests that the growth is only very roughly exponential. A truly exponential process would present as a curve with a constant derivative, but the derivative of the function in the graph is decreasing over the centuries.

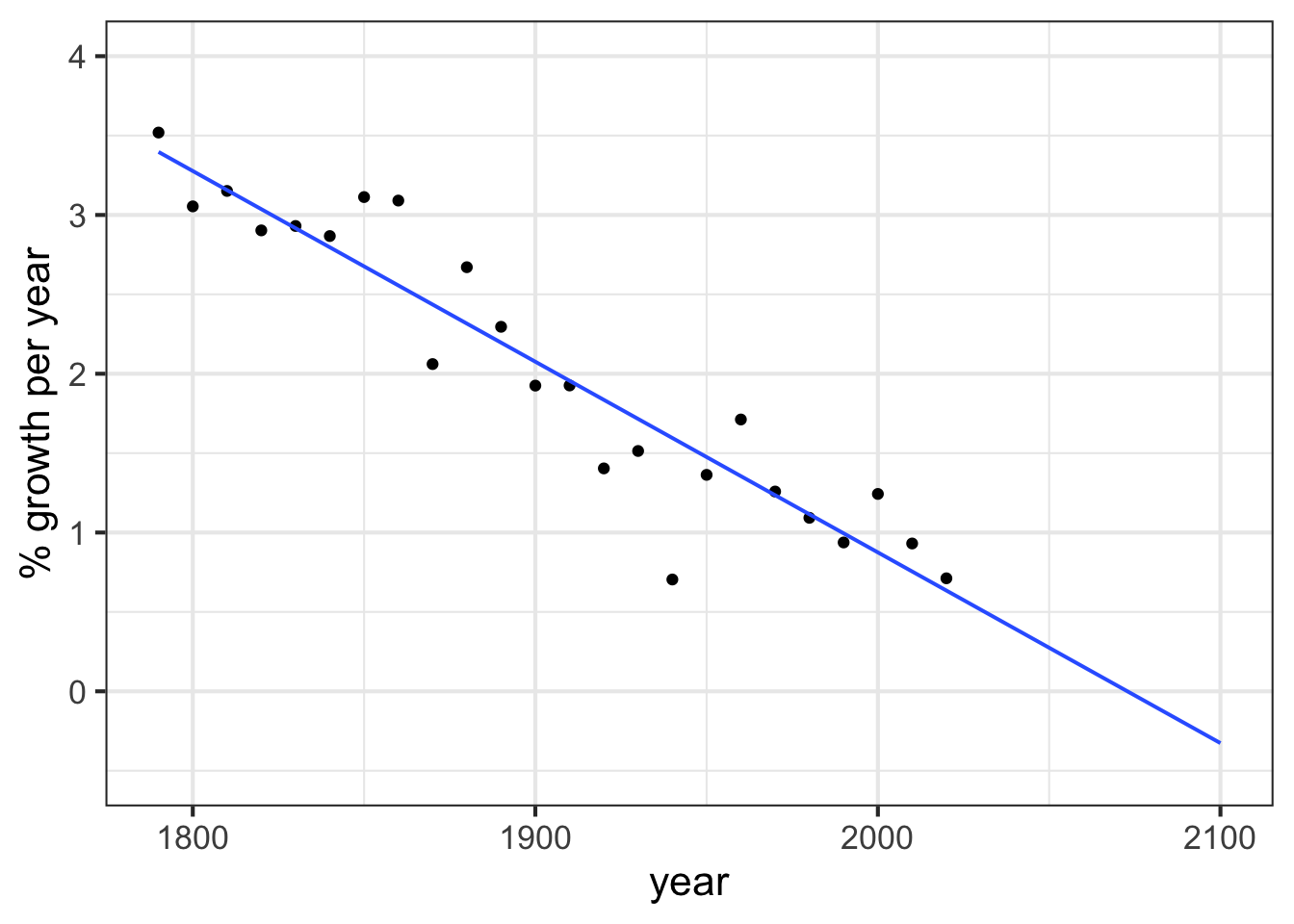

Insofar as the slope over the semi-log graph is informative, it amounts to this quantity: \[\partial_t \ln(\text{pop}(t)) = \frac{\partial_t\, \text{pop}(t)}{\text{pop}}\] This is the per-capita rate of growth, that is, the rate of change in the population divided by the population. Conventionally, this fraction is presented as a percentage: percentage growth in the population per year, as in Figure fig-pop-growth.

The dots in the graph are a direct calculation from the census data. There is a lot of fluctuation, but an overall trend stands out: the population growth rate has been declining since the mid-to late 1800s. The deviations from the trend are telling and correspond to historical events. There is a relatively low growth rate seen from 1860 to 1870: that is the effect of the US Civil War. The Great depression is seen in the very low growth from 1930 to 1940. Baby Boom: look at the growth from 1950-1960. The bump from 1990 to 2000? Not coincidentally, the 1990 Immigration Act substantially increased the yearly rate of immigration.

If the trend in the growth rate continues, the US will reach zero net growth about 2070, then continue with negative growth. Of course, negative growth is just decline. A simple prediction from Figure fig-pop-growth is that the argmax of the US population—that is, the year that the growth rate reaches zero—will occur around 2070.

How large will the population be when it reaches its maximum?

In Block 2, we dealt with situations where we know the function \(f(t)\) and want to find the rate of change \(\partial_t f(t)\). Here, we know the rate of change of the population and we need to figure out the population itself, in other words to figure out from a known \(\partial_t f(t)\) what is the unknown function \(f(t)\).

The process of figuring out \(f(t) \longrightarrow \partial_t f(t)\) is, of course, called differentiation. The opposite process, \(\partial_t f(t) \longrightarrow f(t)\) is called anti-differentiation.

In this block we will explore the methods for calculating anti-derivatives and some of the settings in which anti-derivative problems arrive.

33.1 Accumulation

Imagine a simple setting: water flowing out of a tap into a basin or tank. The amount of water in the basin will be measured in a unit of volume, say liters. Measurement of the flow \(f(t)\) of water from the tap into the tank has different units, say liters per second. If volume \(V(t)\) is the volume of water in the tank as a function of time, \(f(t)\) at any instant is \(f(t) = \partial_t V(t)\).

There is a relationship between the two functions \(f(t)\) and \(V(t)\). Derivatives give a good description of that relationship: \[f(t) = \partial_t V(t)\] This description will be informative if we have measured the volume of water in the basin as a function of time and want to deduce the rate of flow from the tap. Now suppose we have measured the flow \(f(t)\) and want to figure out the volume. The volume at any instant is the past flow accumulated to that instant. As a matter of notation, we write this view of the relationship as \[V(t) = \int f(t) dt\ .\] Read this as “volume is the accumulated flow.”

Other examples of accumulation and change:

- velocity is the rate of change of position with respect to time. Likewise, position is the accumulation of velocity over time.

- force is the rate of energy with respect to position. Likewise energy is the accumulation of force as position changes.

- deficit is the rate of change of debt with respect to time. Likewise, debt is the accumulation of deficit over time.

33.2 Notation for anti-differentiation

For differentiation we are using the notation \(\partial_x\) as in \(\partial_x f(x)\). Remember that the subscript on \(\partial\) names the with-respect-to input. There are three pieces of information this notation:

- The \(\partial\) symbol which identifies the operation as partial differentiation.

- The name of the with-respect-to input \(\partial_{\color{magenta}{x}}\) written as a subscript to \(\partial\).

- The function to be differentiated, \(\partial_x {\color{magenta}{f(x)}}\).

For anti-differentiation, our notation must also specify the three pieces of information. It might be tempting to use the same notation as differentiation but replace the \(\partial\) symbol with something else, perhaps \(\eth\) or \(\spadesuit\) or \(\forall\), giving us something like \(\spadesuit_x f(x)\).

Convention has something different in store. The notation for anti-differentiation is \[\large \int f(x) dx\ .\]

- The \({\color{magenta}{\int}}\) is the marker for anti-differentiation.

- The name of the with-respect-to input is contained in the “dx” at the end of the notation: \(\int f(x) d{\color{magenta}{x}}\)

- The function being anti-differentiated is in the middle \(\int {\color{magenta}{f(x)}} dx\).

For those starting out with anti-differentiation, the conventional notation can be confusing, especially the \(dx\) part. It is easy confuse \(d\) for a constant and \(x\) for part of the function being anti-differentiated.

Think of the \(\int\) and the \(dx\) as brackets around the function. You need both brackets for correct notation, the \(\int\) and the \(dx\) together telling you what operation to perform.

Remember that just as \(\partial_x f(x)\) is a function, so is \(\int f(x) dx\).

33.3 R/mosaic notation

Recall that the notation for differentiation in R/mosaic is D(f(x) ~ x). The R/mosaic notation for anti-differentiation is very similar:

D(f(x) ~ x)This has the same three pieces of information as \(\partial_x f(x)\)

D()signifies differentiation whereasantiD()signifies anti-differentiation.~ xidentifies the with-respect-to input.f(x) ~is the function on which the operation is to be performed.

Remember that just as D(f(x) ~ x) creates a new function out of f(x) ~ x, so does antiD(f(x) ~ x).

33.4 Dimension and anti-differentiation

This entire block will be about anti-differentiation, its properties and its uses. You already know that anti-differentiation (as the name suggests) is the inverse of differentiation. There is one consequence of this that is helpful to keep in mind as we move on to other chapters. This being calculus, the functions that we construct and operate upon have inputs that are quantities and outputs that are also quantities. Every quantity has a dimension, as discussed in sec-dimensions-and-units. When you are working with any quantity, you should be sure that you know its dimension and its units.

The dimension of the input to a function does not by any means have to be the same as the dimension of the output. For instance, we have been using many functions where the input has dimension time and the output is position (dimension L) or velocity (dimension L/T) or acceleration (dimension L/T\(^2\)).

Imagine working with some function \(f(y)\) that is relevant to some modeling project of interest to you. Returning to the bracket notation that we used in sec-dimensions-and-units, the dimension of the input quantity will be [\(y\)]. The dimension of the output quantity is [\(f(y)\)]. (Remember from 16 that [\(y\)] means “the dimension of quantity \(y\)” and that [\(f(y)\)] means “the dimension of the output from \(f(y)\).”)

The function \(\partial_y f(y)\) has the same input dimension \([y]\) but the output will be \([f(y)] / [y]\). For example, suppose \(f(y)\) is the mass of fuel in a rocket as a function of time \(y\). The output of \(f(y)\) has dimension M. The input dimension \([y]\) is T.

The output of the function \(\partial_y f(y)\) has dimension \([f(y)] / [y]\), which in this case will be M / T. (Less abstractly, if the fuel mass is given in kg, and time is measured in seconds, then \(\partial_y f(y)\) will have units of kg-per-second.)

How about the dimension of the anti-derivative \(F(y) = \int f(y) dy\)? Since \(F(y)\) is the anti-derivative of \(f(y)\) (with respect to \(y\)), we know that \(\partial_y F(y) = f(y)\). Taking the dimension of both sides \[[\partial_y F(y)] = \frac{[F(y)]}{[y]} = \frac{[F(y)]}{\text{T}} = [f(y)] = \text{M}\] Consequently, \([F(y)] = \text{M}\).

To summarize:

- The dimension of derivative \(\partial_y f(y)\) will be \([f(y)] / [y]\).

- The dimension of the anti-derivative \(\int f(y) dy\) will be \([f(y)]\times [y]\).

Or, more concisely:

Differentiation is like division, anti-differentiation is like multiplication.

Paying attention to the dimensions (and units!) of input and output can be a boon to the calculus student. Often students have some function \(f(y)\) and they are wondering which of the several calculus operations they are supposed to do: differentiation, anti-differentiation, finding a maximum, finding an argmax or a zero. Start by figuring out the dimension of the quantity you want. From that, you can often figure out which operation is appropriate.

To illustrate, imagine that you have constructed \(f(y)\) for your task and you know, say, \[[f(y)] = \text{M and} \ \ \ \ \ [y] = \text{T}\ .\] Look things up in the following table:

| Dimension of result | Calculus operation |

|---|---|

| M / T | differentiate |

| M T | anti-differentiate |

| M | find max or min |

| T | find argmax/argmin or a function zero |

| M T\(^2\) | anti-differentiate twice in succession |

| M / T\(^2\) | differentiate twice in succession |

For example, suppose the output of the accelerometer on your rocket has dimension L / T\(^2\). You are trying to figure out from the accelerometer reading what is your altitude. Altitude has dimension L. Look up in the table to see that you want to anti-differentiate acceleration twice in succession.

33.5 From Calculus Made Easy

Calculus Made Easy, by Silvanus P. Thompson, is a classic, concise, and elegant textbook from 1910. It takes a common-sense approach, sometimes lampooning the traditional approach to teaching calculus.

Some calculus-tricks are quite easy. Some are enormously difficult. The fools who write the textbooks of advanced mathematics—and they are mostly clever fools—seldom take the trouble to show you how easy the easy calculations are. On the contrary, they seem to desire to impress you with their tremendous cleverness by going about it in the most difficult way. — From the preface

Thompson’s first chapter starts with the notation of accumulation, which he calls “the preliminary terror.”

The preliminary terror … can be abolished once for all by simply stating what is the meaning—in common-sense terms—of the two principal symbols that are used in calculating.

These dreadful symbols are:

- \(\Large\ d\) which merely means “a little bit of.”

Thus \(dx\) means a little bit of \(x\); or \(du\) means a little bit of \(u\). Ordinary mathematicians think it more polite to say “an element of,” instead of “a little bit of.” Just as you please. But you will find that these little bits (or elements) may be considered to be indefinitely small.

- \(\ \ \large\int\) which is merely a long \(S\), and may be called (if you like) “the sum of.”

Thus \(\ \int dx\) means the sum of all the little bits of \(x\); or \(\ \int dt\) means the sum of all the little bits of \(t\). Ordinary mathematicians call this symbol “the integral of.” Now any fool can see that if \(x\) is considered as made up of a lot of little bits, each of which is called \(dx\), if you add them all up together you get the sum of all the \(dx\)’s, (which is the same thing as the whole of \(x\)). The word “integral” simply means “the whole.” If you think of the duration of time for one hour, you may (if you like) think of it as cut up into \(3600\) little bits called seconds. The whole of the \(3600\) little bits added up together make one hour.

When you see an expression that begins with this terrifying symbol, you will henceforth know that it is put there merely to give you instructions that you are now to perform the operation (if you can) of totaling up all the little bits that are indicated by the symbols that follow.

The next chapter shows what it means to “total up all the little bits” of a function.