5 Pattern-book functions

One of the major tasks in modeling is to construct functions that represent a situation or phenomenon of interest. As you will see in later chapters, there are many ways to build a function. For example, you may have data about a relationship. Or, sometimes, you can figure out the shape of a function by asking simple questions about the situation or phenomenon.

In this Chapter, we introduce the pattern-book functions—a brief list of basic mathematical functions that apply in a surprisingly large range of situations. Think of the items in the pattern-book list as different actors, each of whom is skilled in portraying an archetypal character: hero, outlaw, lover, fool, comic. A play brings together different characters, costumes them, and relates them to one another through dialog or other means.

Costume designers use their imagination, often enhanced by referencing published collections of patterns and customizing them to the needs at hand. These references are sometimes called “pattern books,” an example of which is shown to the right. Each page of the book gives the pattern and instructions for constructing a particular type of costume.

Similarly, we will start with a pattern set of functions that have been collected from generations of experience. To remind us of their role in modeling, we will call these pattern-book functions. These pattern-book functions are useful in describing diverse aspects of the real world and have simple calculus-related properties that make them relatively easy to deal with. There are just a handful of pattern-book functions from which untold numbers of useful modeling functions can be constructed. Mastering calculus is in part a matter of becoming familiar with the mathematical connections among the pattern-book functions, just as understanding a play calls for becoming familiear with the relationships among the characters.

Table tbl-pattern-book-list is a list of our pattern-book functions written traditionally and in R. The list of pattern-book functions is short. You should memorize the names and be able easily to associate each name with the traditional notation.

| Pattern name | Traditional notation | R notation |

|---|---|---|

| exponential | \(e^x\) | exp(x) |

| logarithm (“natural log”) | \(\ln(x)\) | log(x) |

| sinusoid | \(\sin(x)\) | sin(x) |

| square | \(x^2\) | x^2 |

| identity | \(x\) | x |

| one | \(1\) | 1 |

| reciprocal | \(1/x\) or \(x^{-1}\) | 1/x |

| gaussian | \(\dnorm(x)\) | dnorm(x) |

| sigmoid | \(\pnorm(x)\) | pnorm(x) |

The input name used in the table, \(x\), is entirely arbitrary. You can (and will) use the pattern-book functions with other quantities—\(y\), \(z\), \(t\), and so on, even zebra if you like.

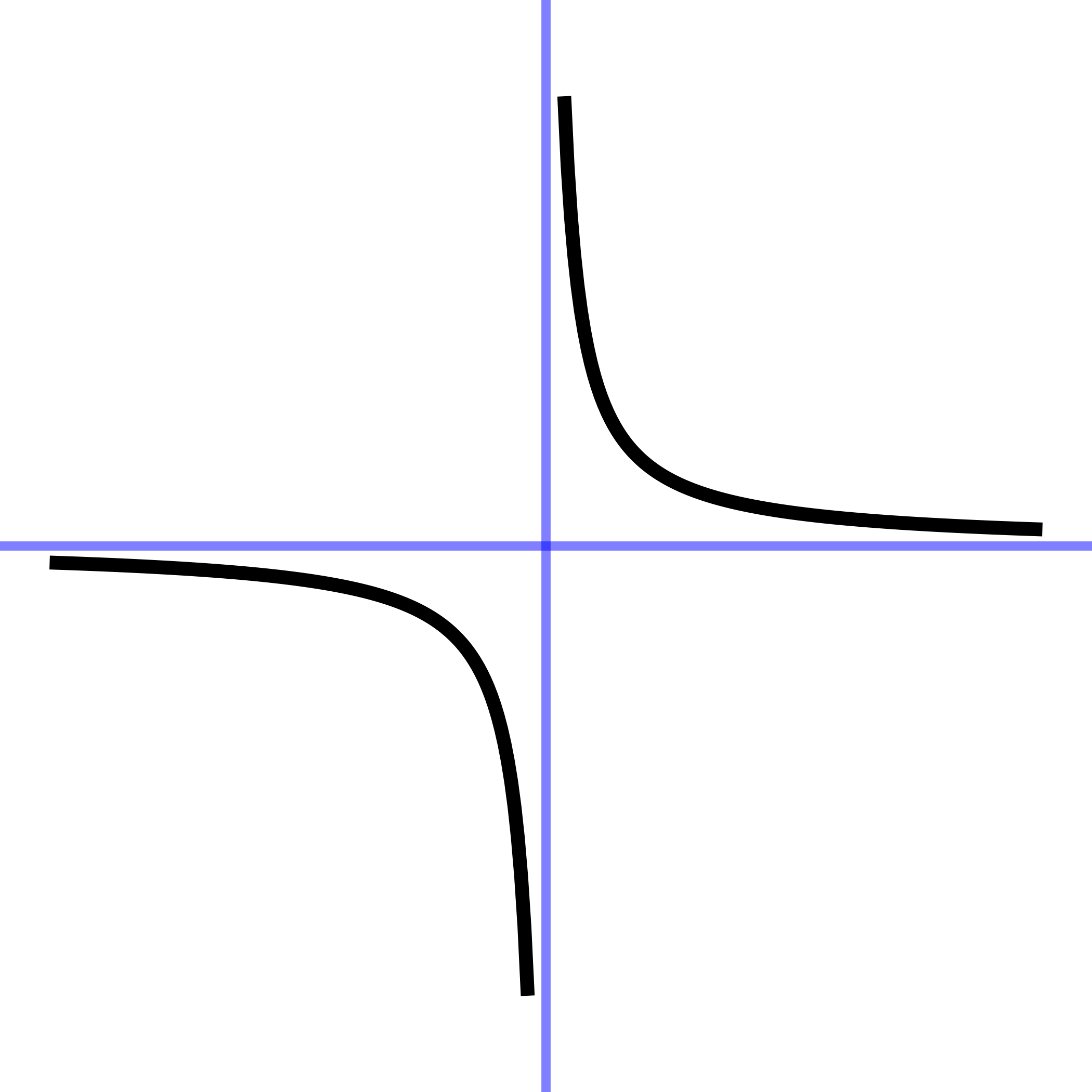

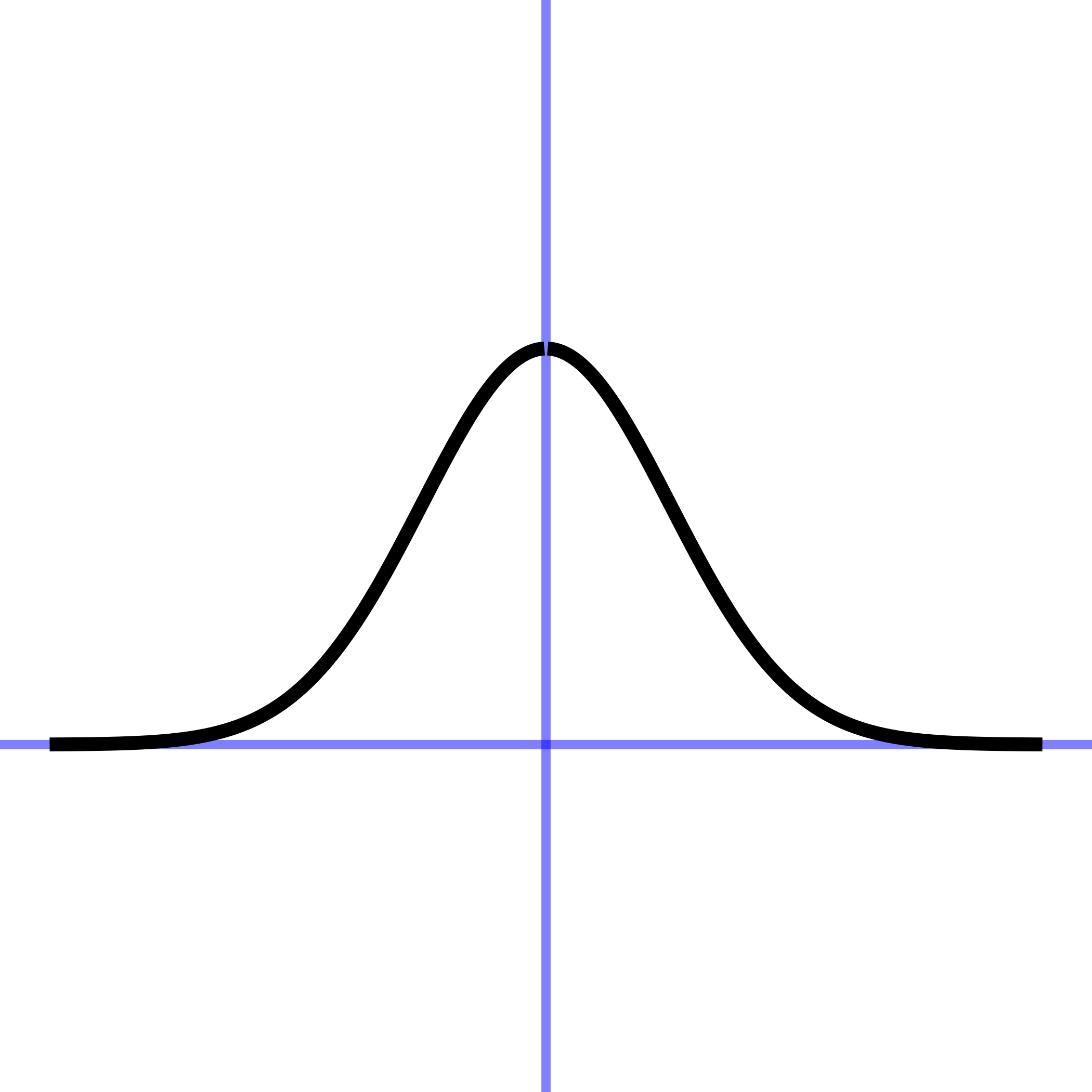

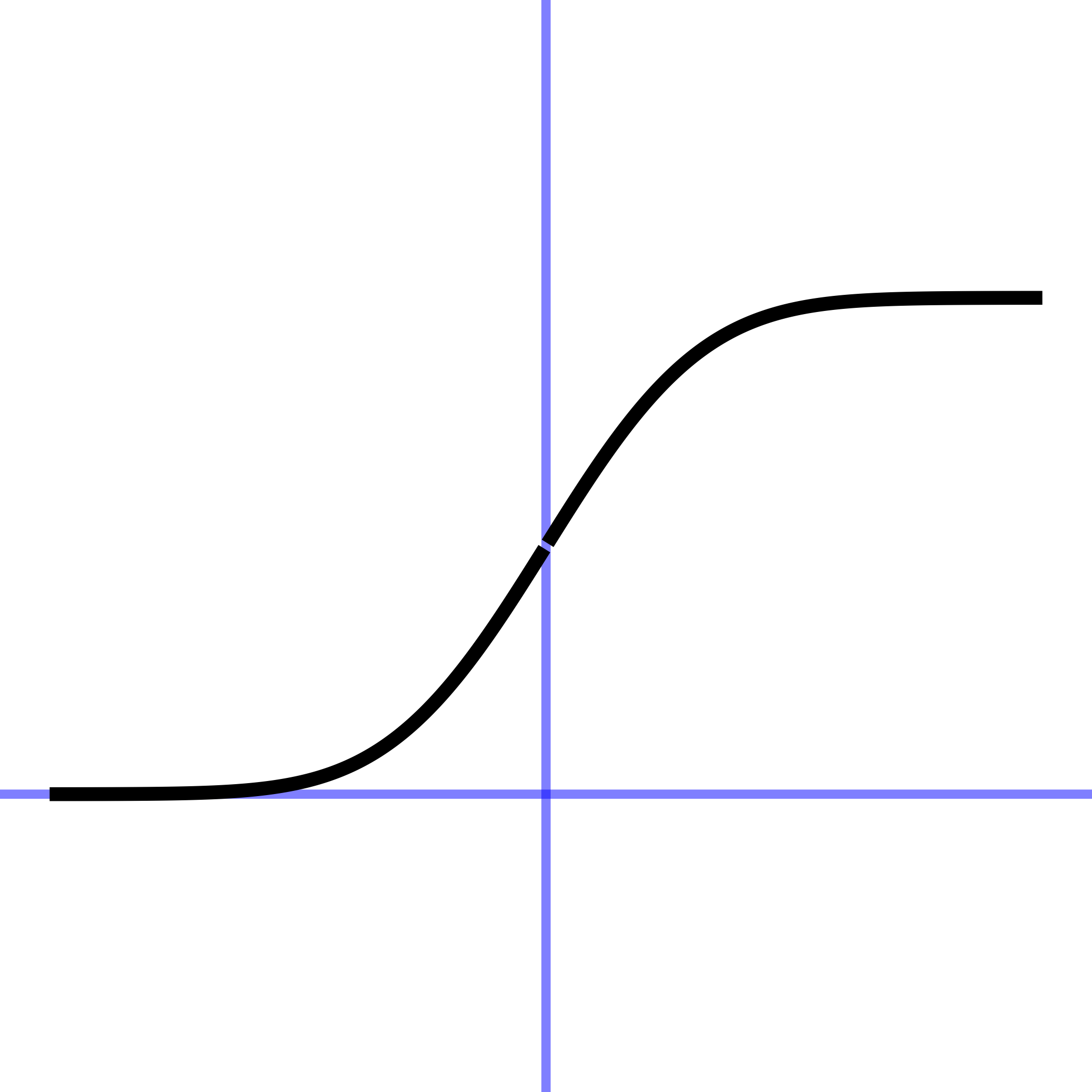

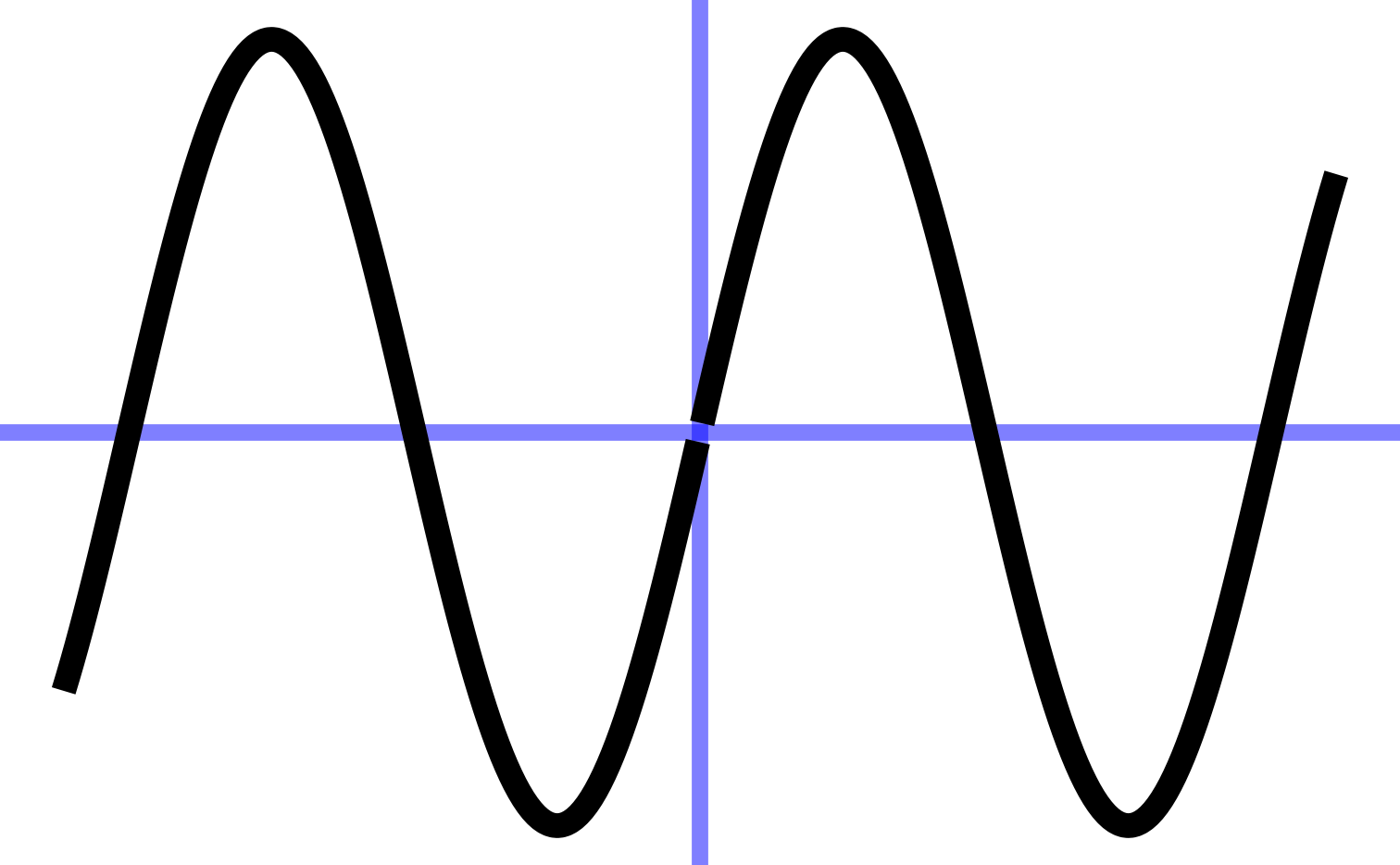

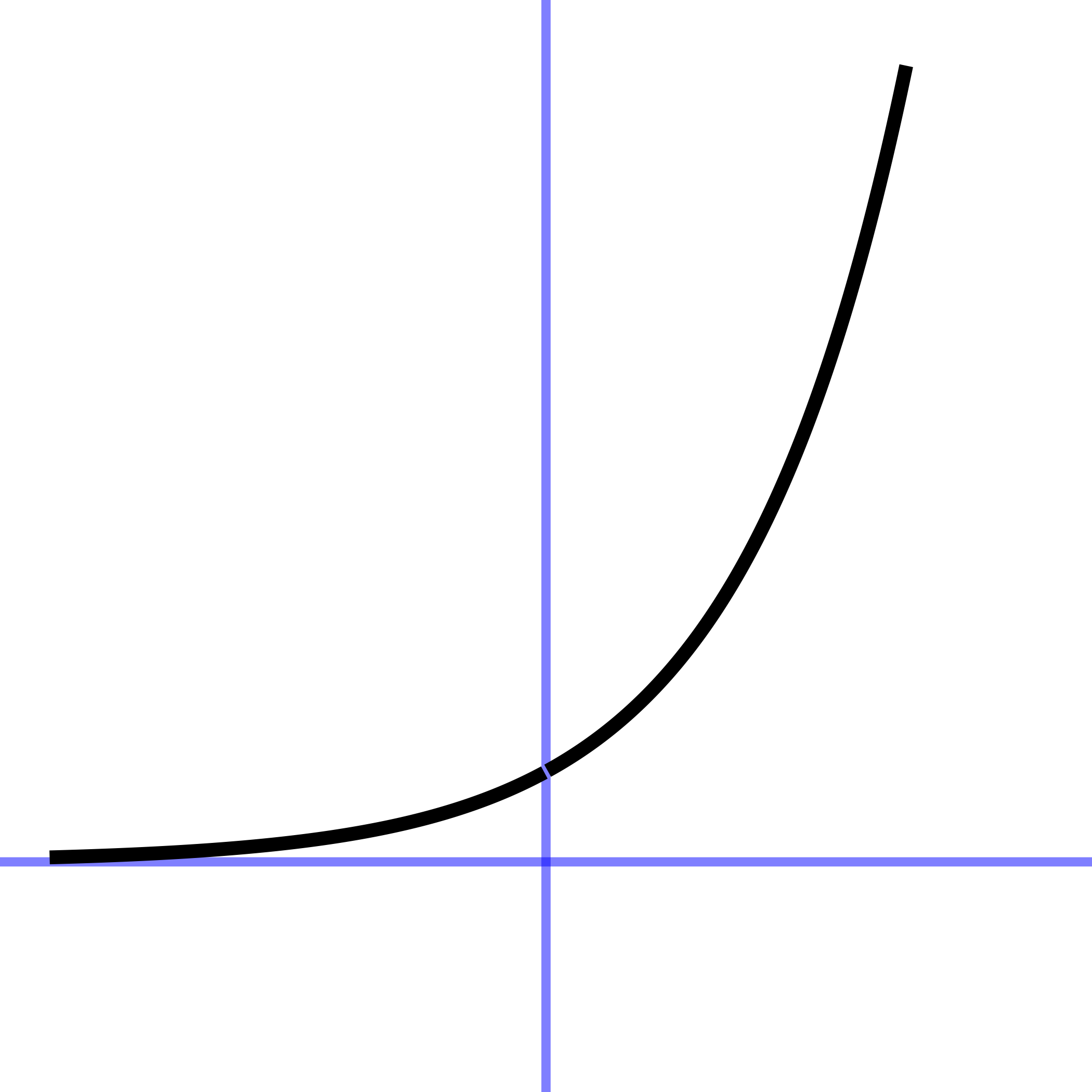

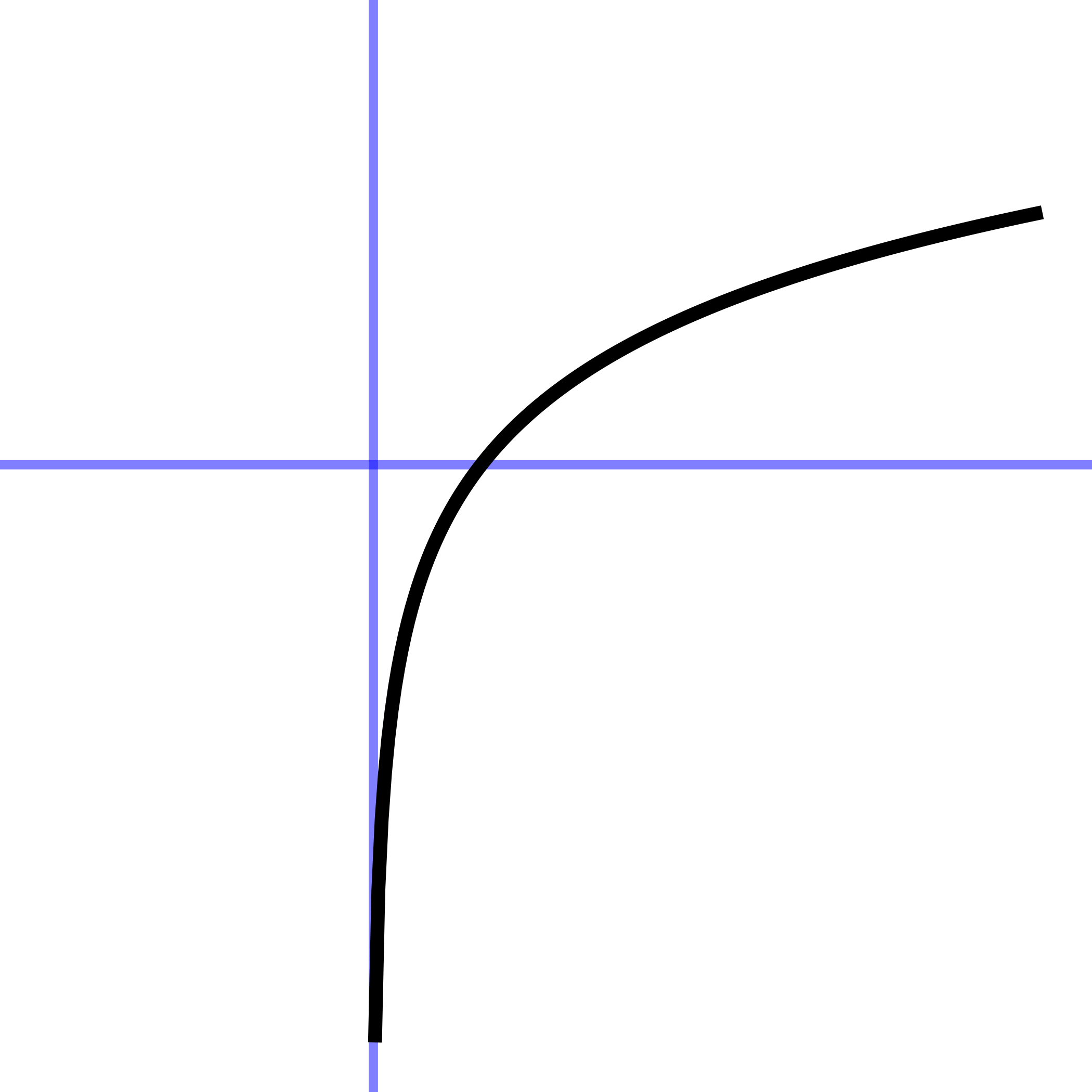

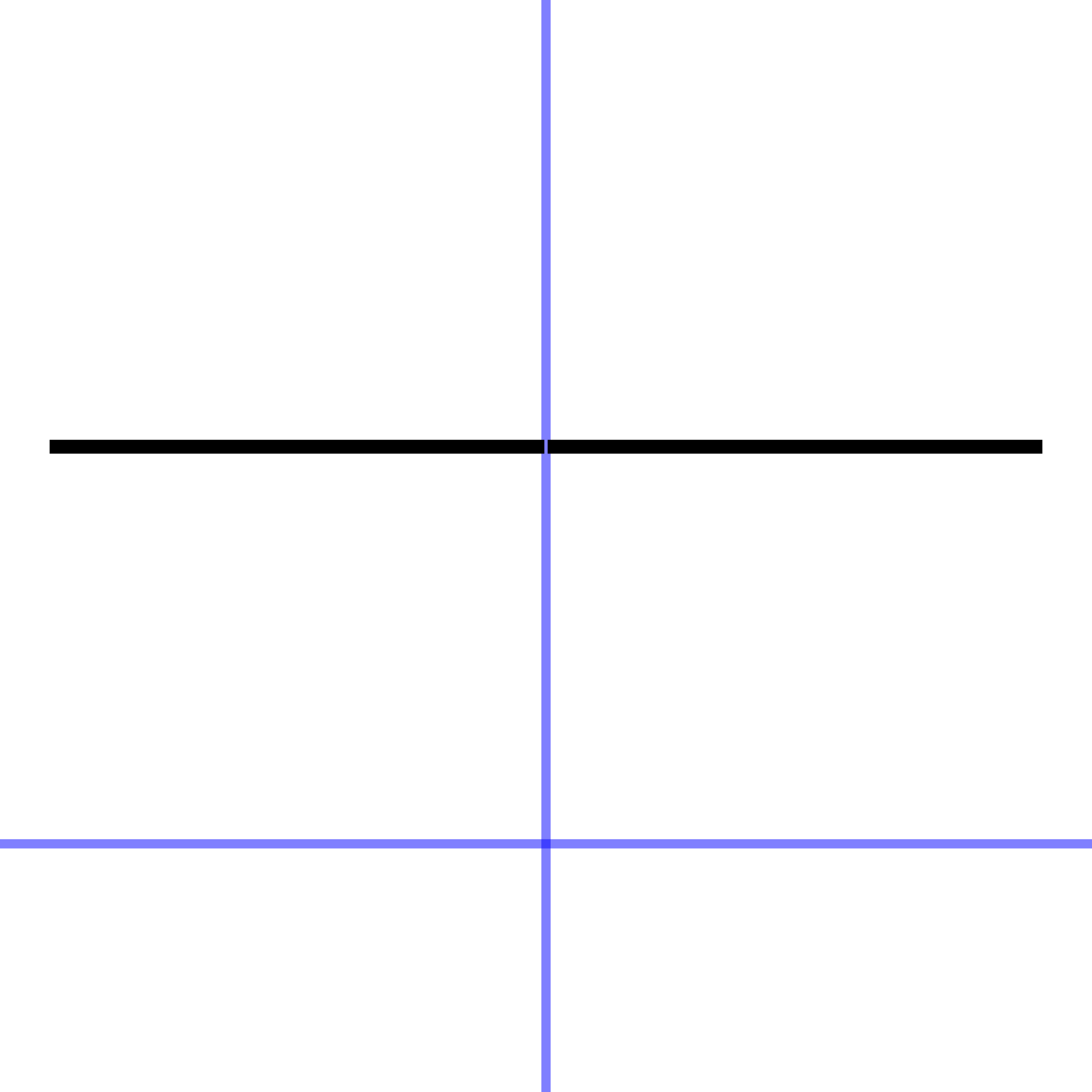

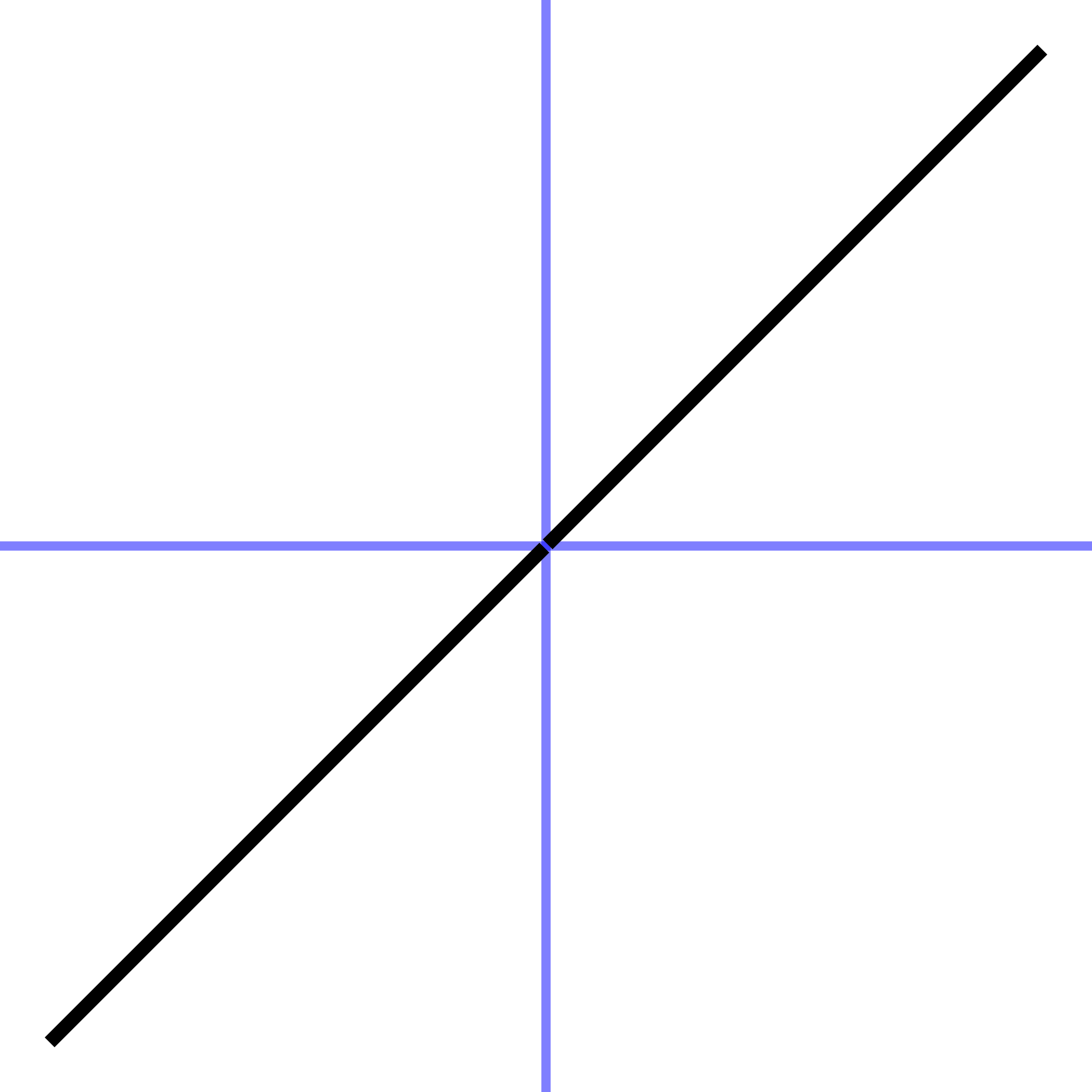

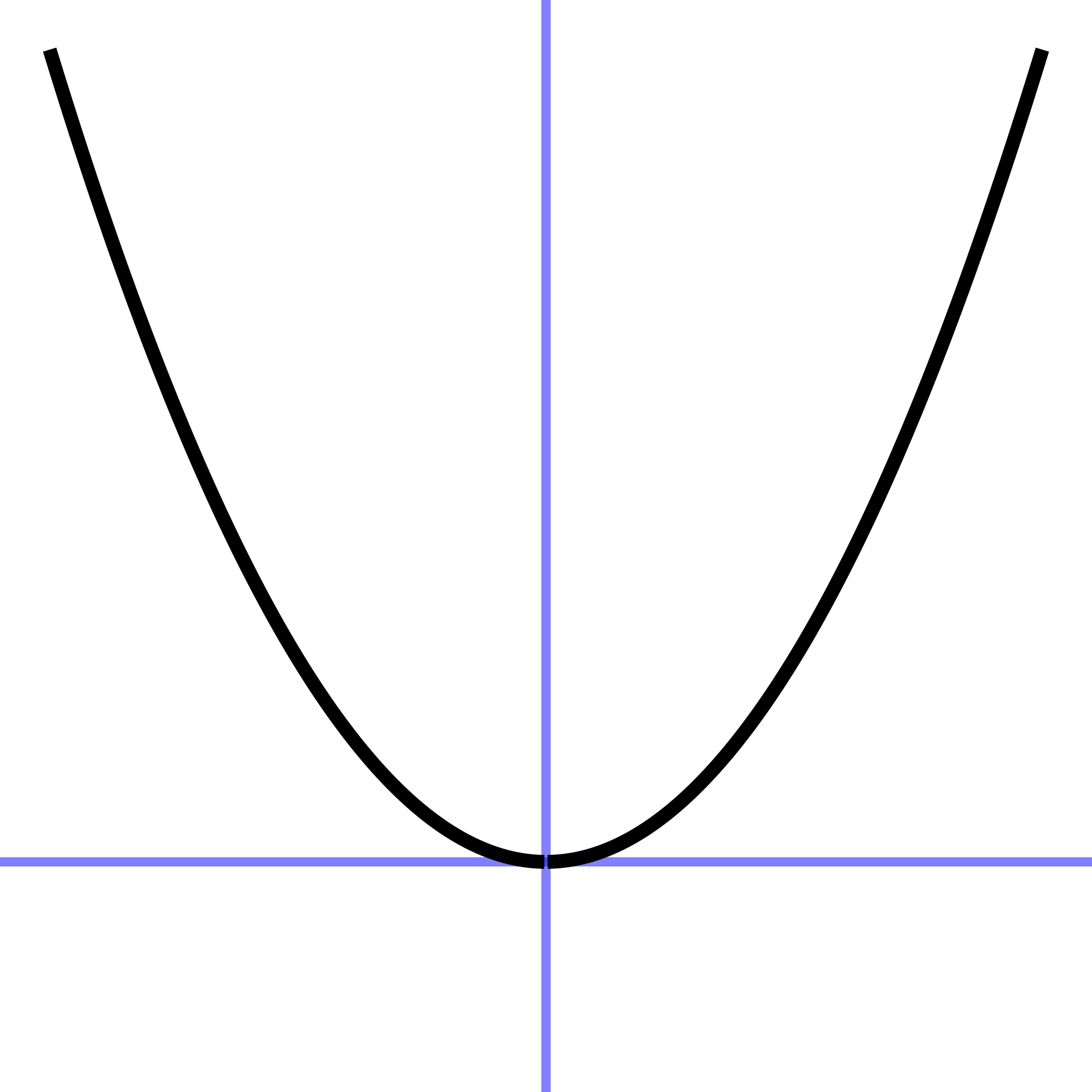

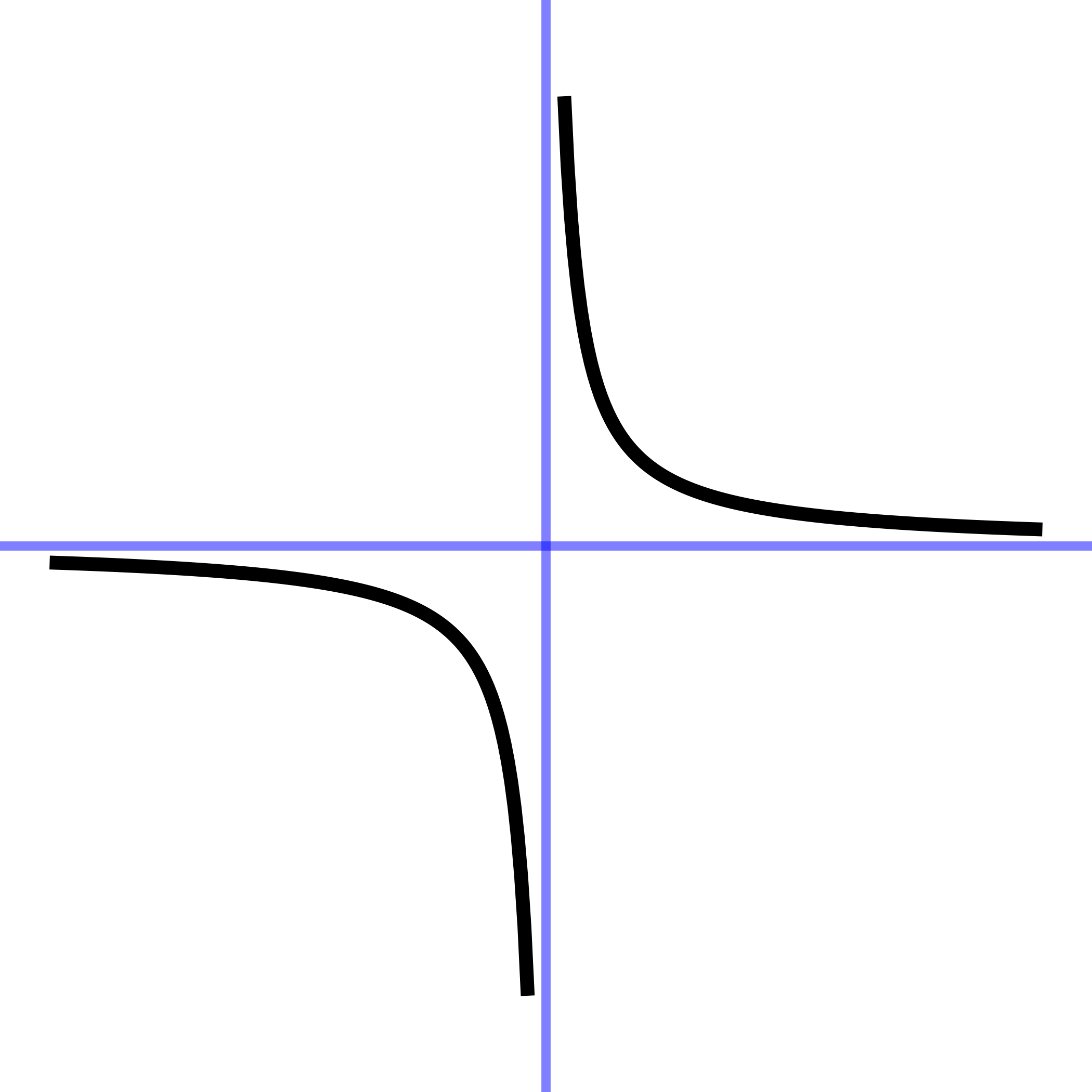

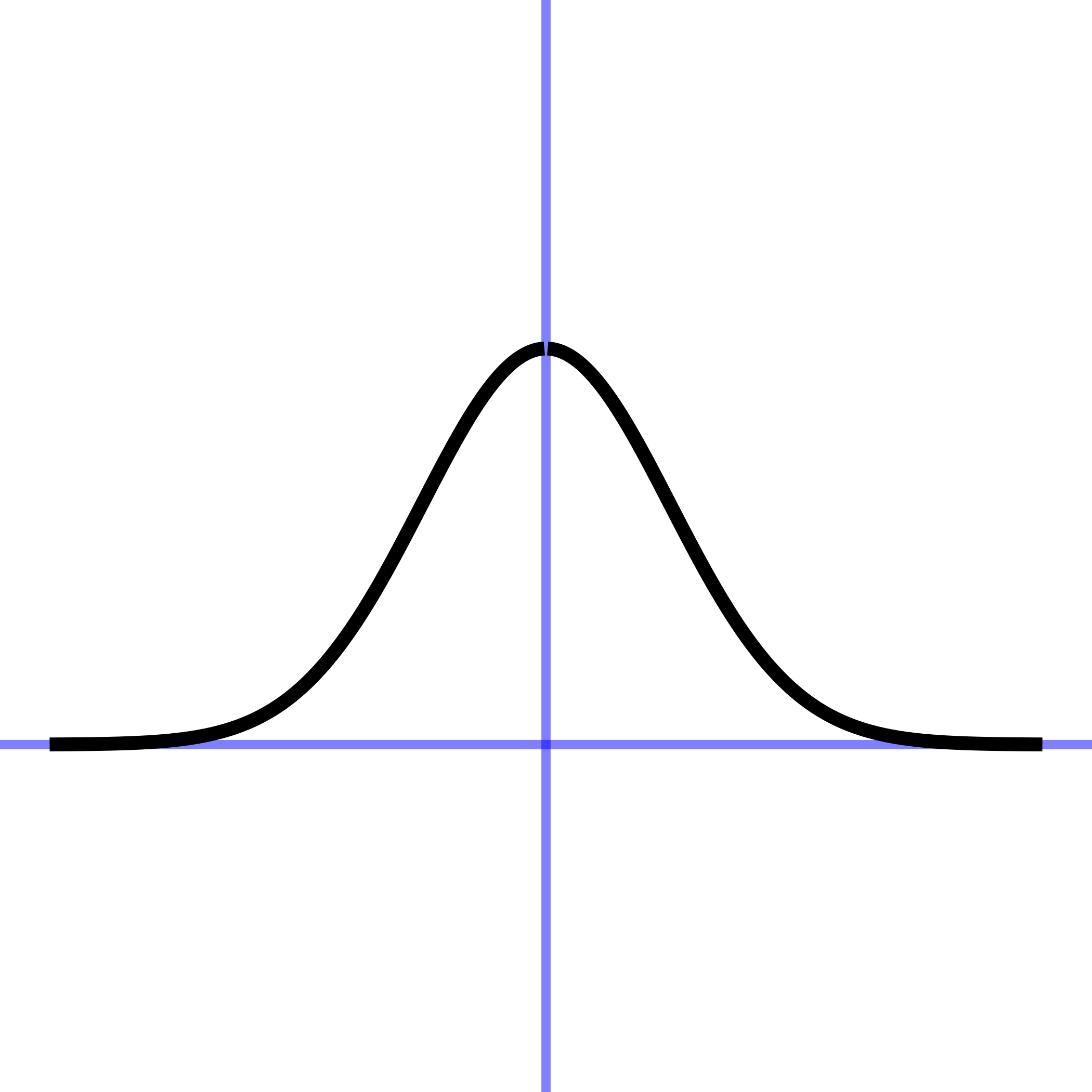

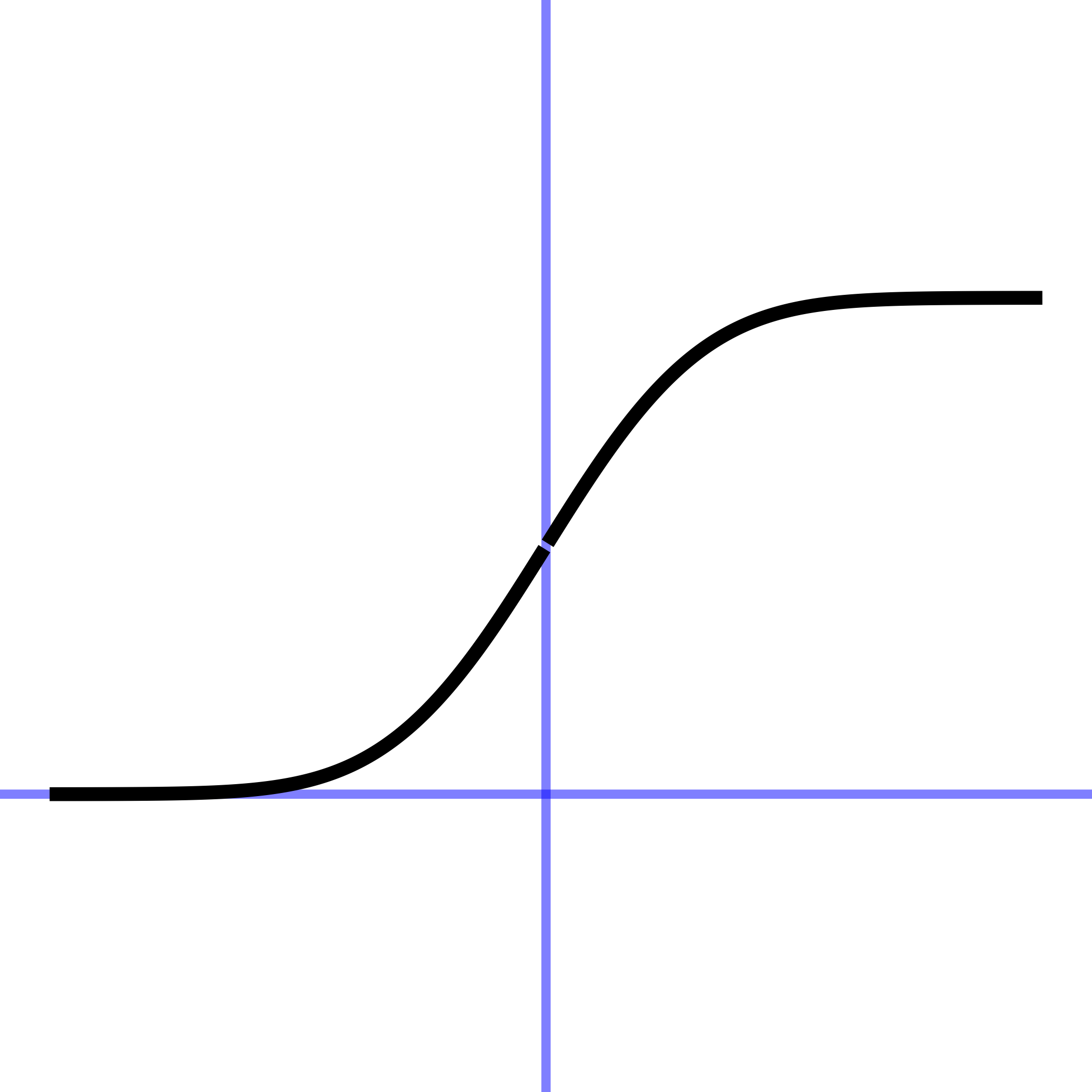

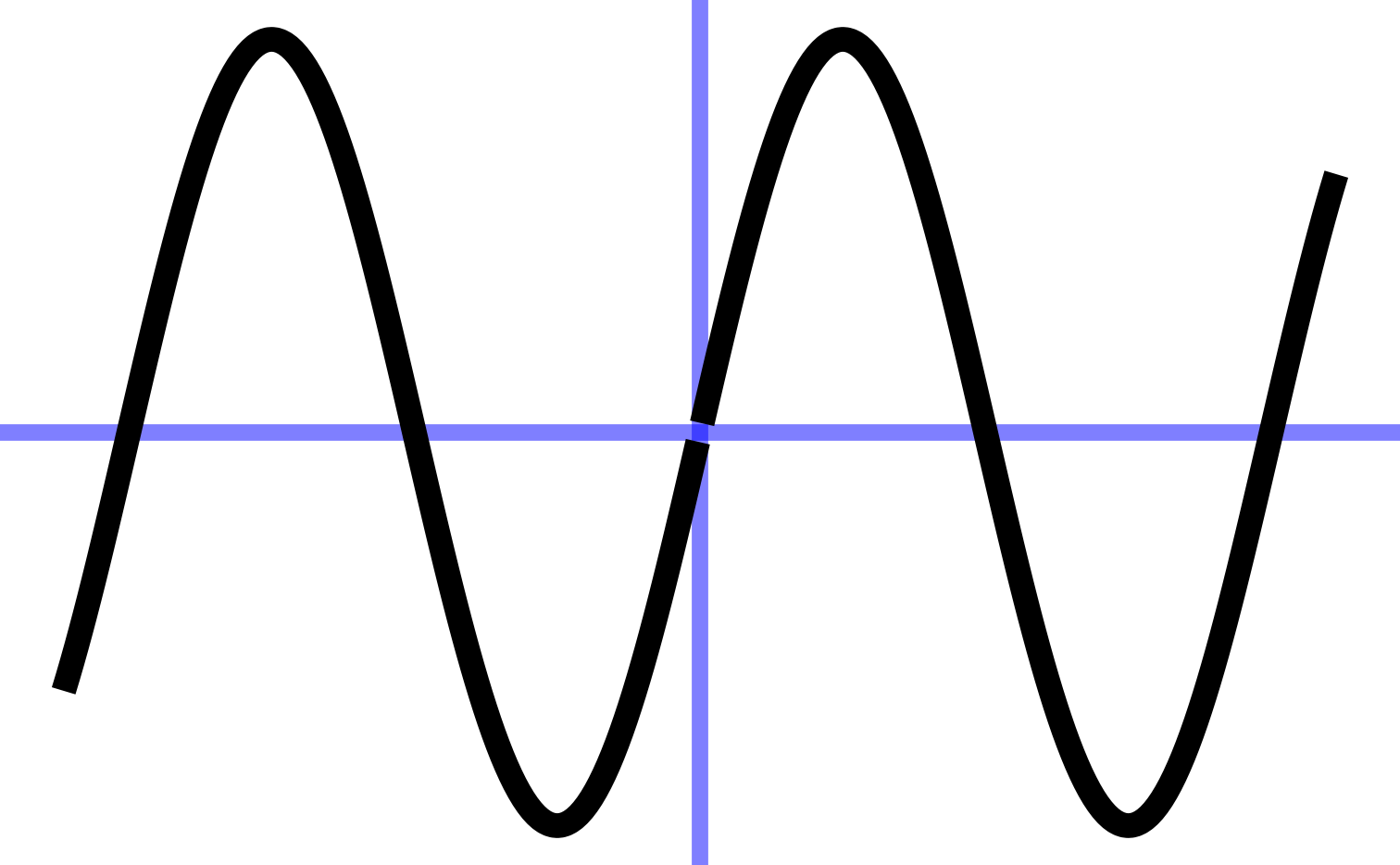

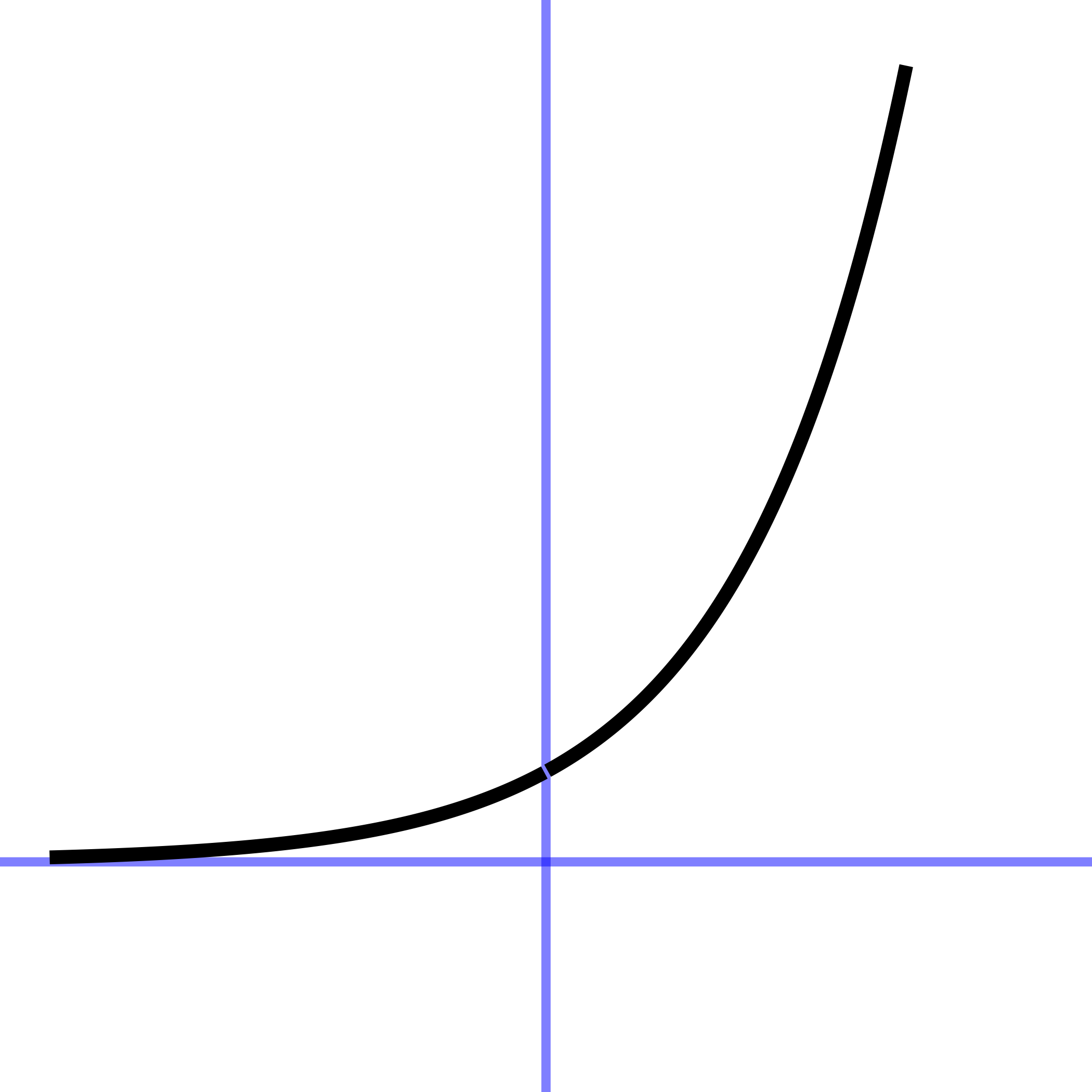

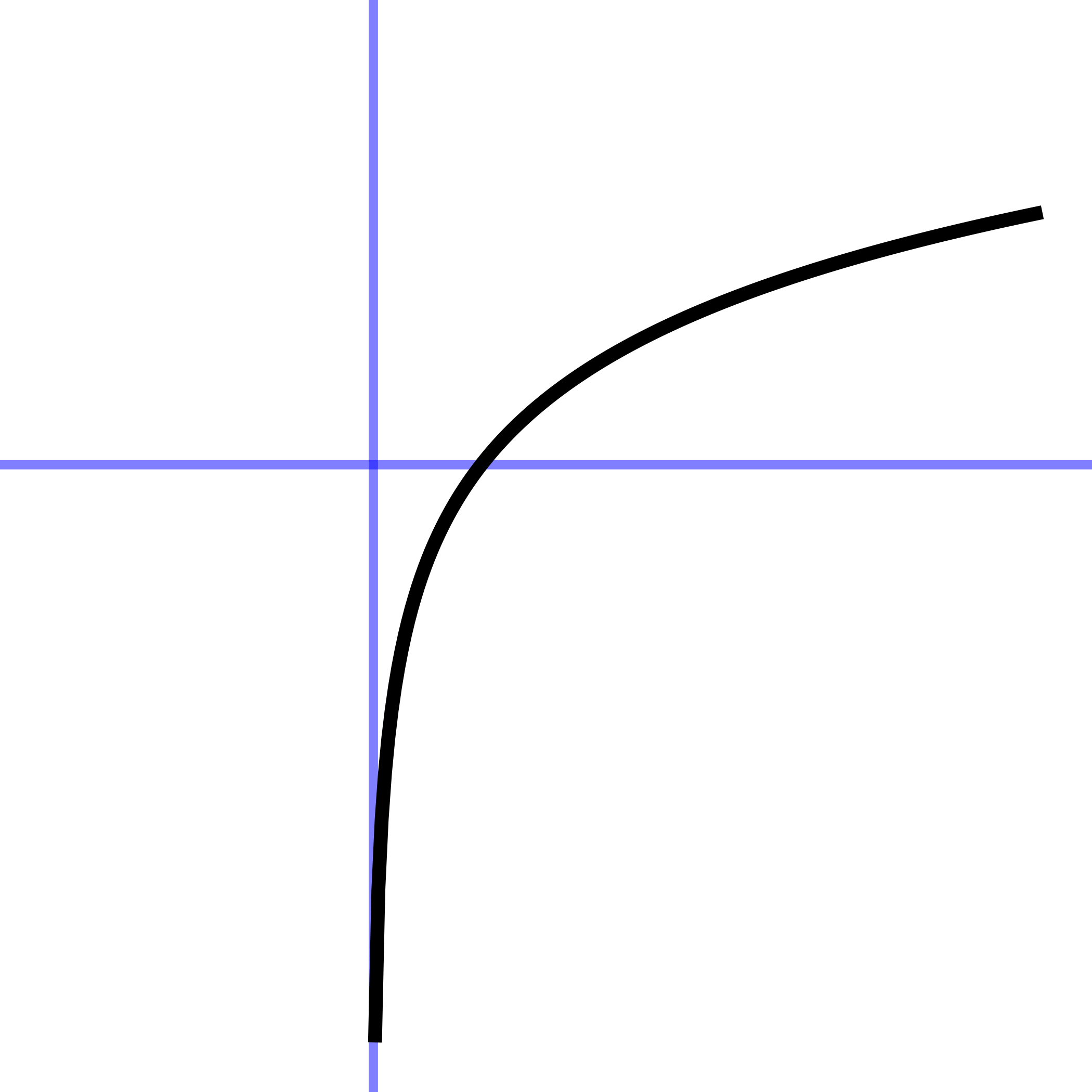

In addition to the names of the pattern-book functions, you should be able to draw their shapes easily. Figure fig-pattern-book-shapes provides a handy guide.

| const | identity | square | recip | gaussian | sigmoid | sinusoid | exp | log |

|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

These pattern-book functions are widely applicable. But nobody would confuse the pictures in a pattern book with costumes that are ready for wear. Each pattern must be tailored to fit the actor and customized to fit the theme, setting, and action of the story. sec-parameters shows how this is done.

Each of the pattern-book functions takes a number as input and produces a number as output. Naturally, a proper graph of a pattern-book function should display specific numerical values for input and output. This section shows such quantitative graphs.

When used in modeling, however, we need to modify the functions so that their inputs and outputs are quantities. Chapters sec-parameters and sec-assembling present the main techniques for doing this. This allows us to use the shape of a pattern-book function while customizing the quantitative input-output relationship to serve the situation at hand. In practice, however, it suffices to memorize just a couple of input/output pairs for each function

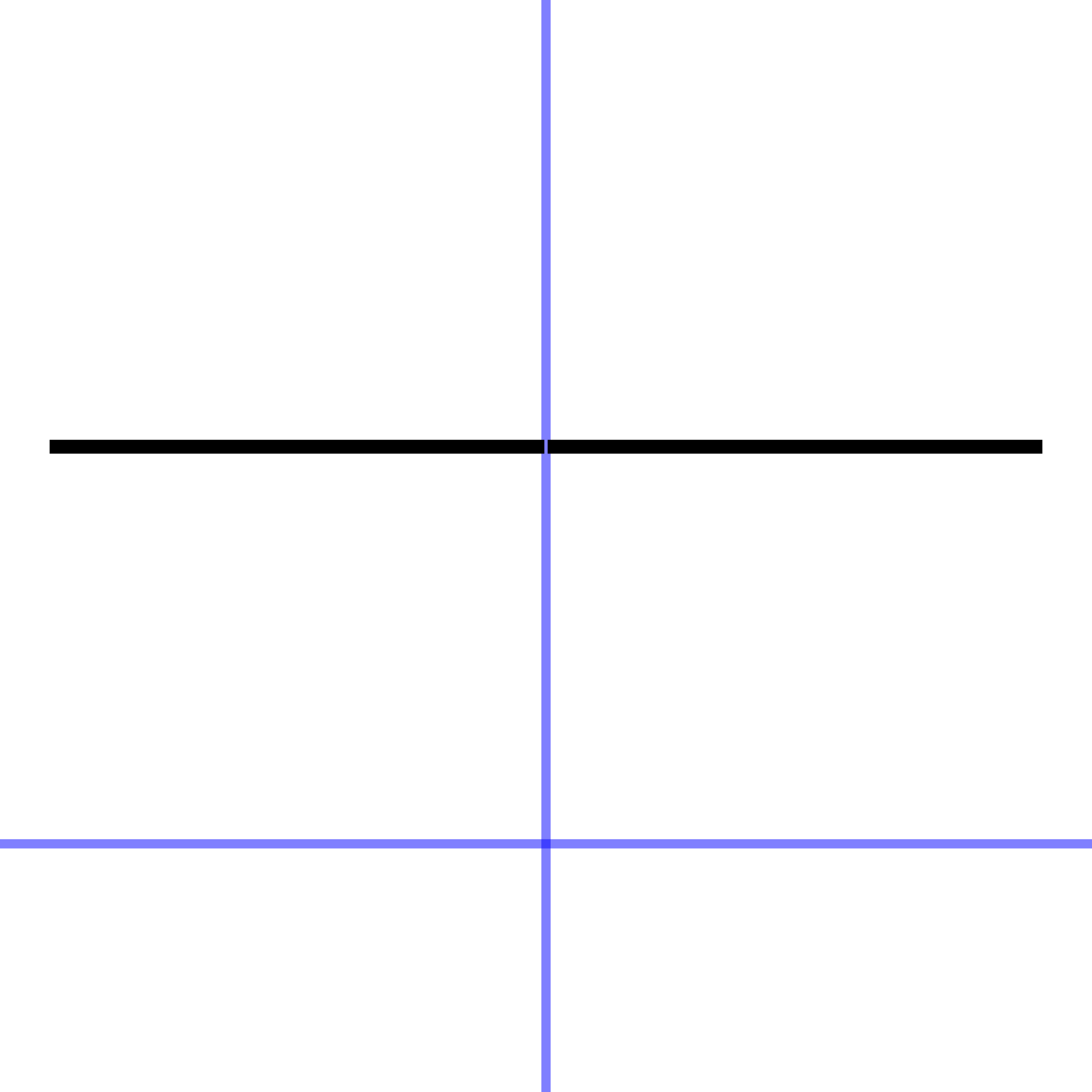

The constant function is so simple that you may be inclined to deny that it is a function at all.

The output of the constant function is always numerical 1, regardless of the input. Its graph is therefore a horizontal line.

You may wonder why to take the trouble to make a function whose output is always the same. After all, in a formula it shows up simply as the number 1, not looking like a formula at all. But when we start to combine functions (sec-assembling), the constant function will almost always have a role to play.

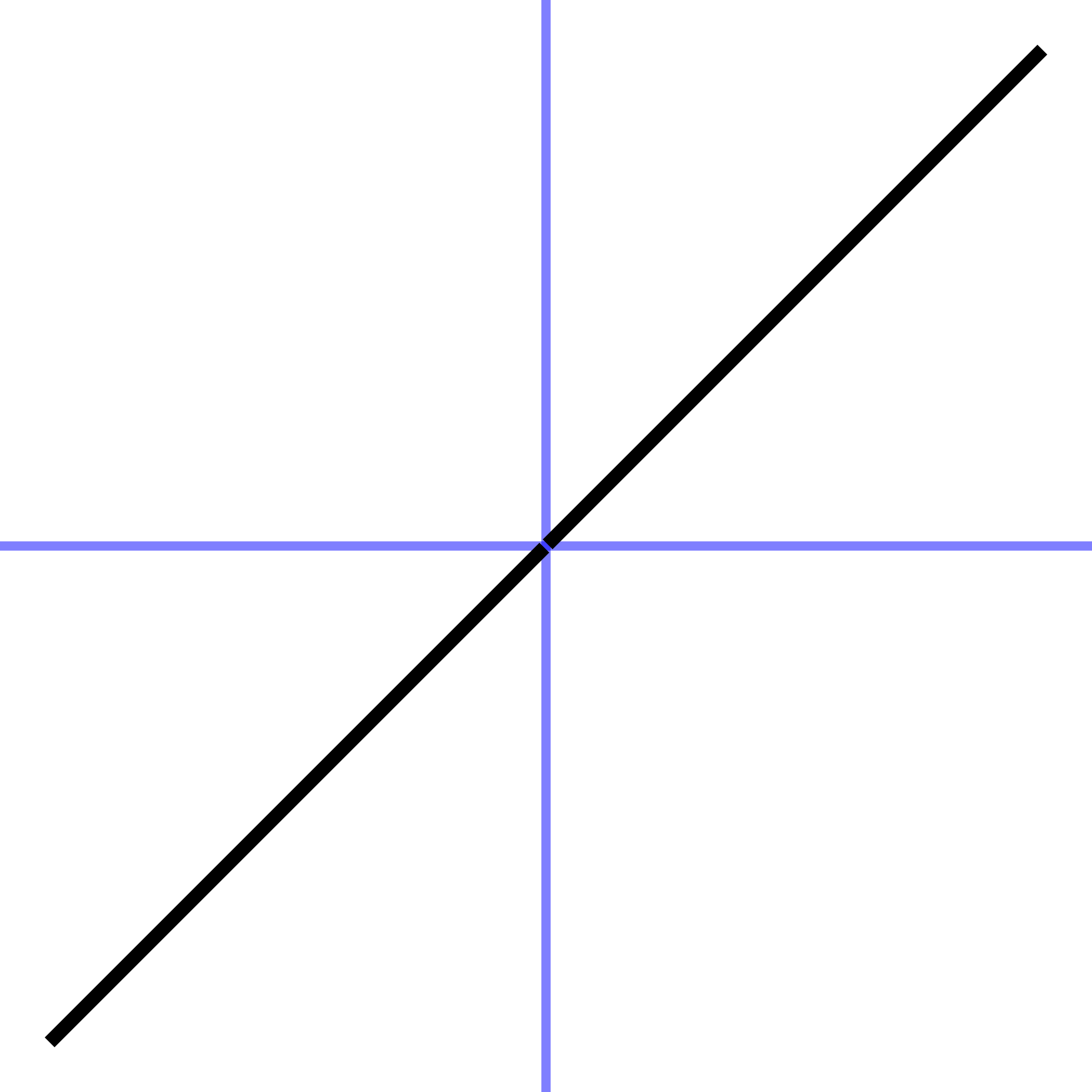

The identity function is also simple. Whatever is the input will become the output, that is, the output is identical to the input. The graph of the identity function is a straight line going through \((0,0)\) with slope 1.

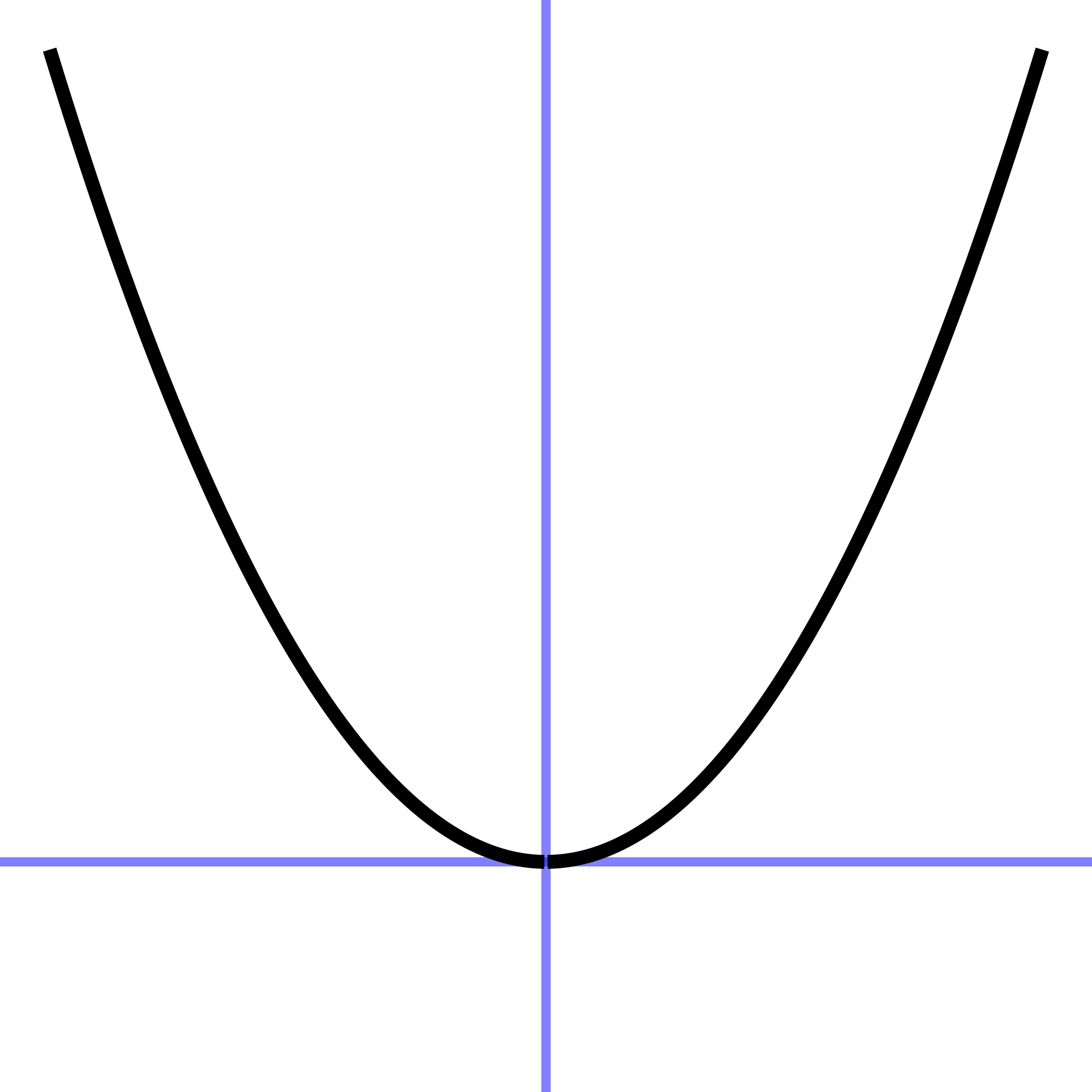

The remaining pattern-book functions all have curved shapes.

The square function has output zero when the input is zero. As the input gets farther from zero, the output grows producing the familiar shape of a parabola. It is a member of a large family of functions called the “power-law” functions. This family is described more extensively in sec-power-law-family.

The reciprocal function has a large output when the input is near zero (either to the left or the right) and an output near zero when the input is large (either positive or negative). Like the square function, the reciprocal is a member of a large family of functions called the “power-law” functions.

The Gaussian function is shaped like a mountain or, in many descriptions, like the outline of a bell. As the input becomes larger—in either the positive or negative directions—the output gets closer and closer to zero but never touches zero exactly.

The Gaussian function shows up so often in modeling that it has another widely used name: the normal function. But “normal” has additional meanings in mathematics, so we will not use that name in this book.

The sigmoid function models smooth transitions. For large negative inputs, the output is (very nearly) zero. For large positive inputs, the output is (very nearly) one. The sigmoid is closely related to the Gaussian. As you progress in calculus, the relationship will become evident.

A sinusoid is a periodic function, meaning that the pattern repeats itself identically from left to right in the graph. It is also very smooth, both visually and in the mathematical sense to be introduced in sec-describing-functions

If you studied trigonometry, you may be used to the sine of an angle in the context of a right triangle. That is the historical origin of the idea. For our purposes, think of the sinusoid just as a function that takes an input and returns an output. Starting in the early 1800s, oscillatory functions found a huge area of application in representing phenomena such as the diffusion of heat through solid materials and, eventually, radio communications.

In trigonometry, the sine has the cosine as a partner. But the two functions \(\sin()\) and \(\cos()\) are so closely connected that we will not often need to make the distinction, calling both of them simply “sinusoids.”

5.1 Exponential and logarithm functions

It’s a common English phrase to say that something is “growing exponentially.” For instance, at the outbreak of an epidemic there are few cases and slow growth in the number of cases. But as the number of cases increases, the growth gets faster.

The exponential function has important applications throughout science, technology, and the economy. For large negative inputs, the value is very close to zero in much the same way as for the Gaussian or sigmoid functions. But the output increases faster and faster as the input gets bigger. Note that the output of the exponential function is never negative for any input.

There is another way to think about exponential growth that makes it easier to comprehend. Again, imagine an epidemic in its eary stages. Suppose there are 20 cases. Time goes by and eventually the number of cases reaches 40, double the initial value. Suppose it takes one week for this doubling. That is, the doubling time is one week.

Now a second week goes by. If the growth were proportional to the input, after the second week the case number would be 60. For exponential growth, by the end of the second week the output will double. So, there will be 80 cases after two weeks.

At the end of the third week the output of the exponential will double again, to 160 cases. Fourth week: 320 cases. Fifth week: 640 cases. To summarize, Table tbl-doubling-epi shows the number of cases as a function of weeks elapsed.

| Time (weeks) | Number of cases |

|---|---|

| 0 (initial time) | 20 |

| 1 | 40 |

| 2 | 80 |

| 3 | 160 |

| 4 | 320 |

| 5 | 640 |

| 6 | 1280 |

| … | … |

| 10 | 20,480 |

| … | … |

| 15 weeks | 655,360 |

Note: You might speculate about where the epidemic will go in the long run. Most things that grow exponentially do so only for a short time. After that, other things come into play that limit or reverse the growth. So, over long periods of time the pattern can be better described as gaussian or sigmoidal.

I suspect that most people are comfortable with the idea of exponential growth. But many people, even highly-educated professionals, react with fear to the word “logarithmic.” [This fear might originate in the way logarithms are typically taught in high-school is heavily algebraic and taken out of any meaningful contemporary context. Logarithms were invented around 1600 to facilitate by-hand mathematical operations like multiplication and exponentiation. Nowadays, we have computers to perform such calculations.] sec-magnitude argues logarithms are an important tool for understanding quantities that can range from very, very small to very, very large. Table tbl-doubling-epi gives an example: the left column is a logarithm of the right column.

The logarithmic function is defined only for positive inputs. As the input increase from just above zero, the output t constantly grows but at a slower and slower rate. It never levels out.

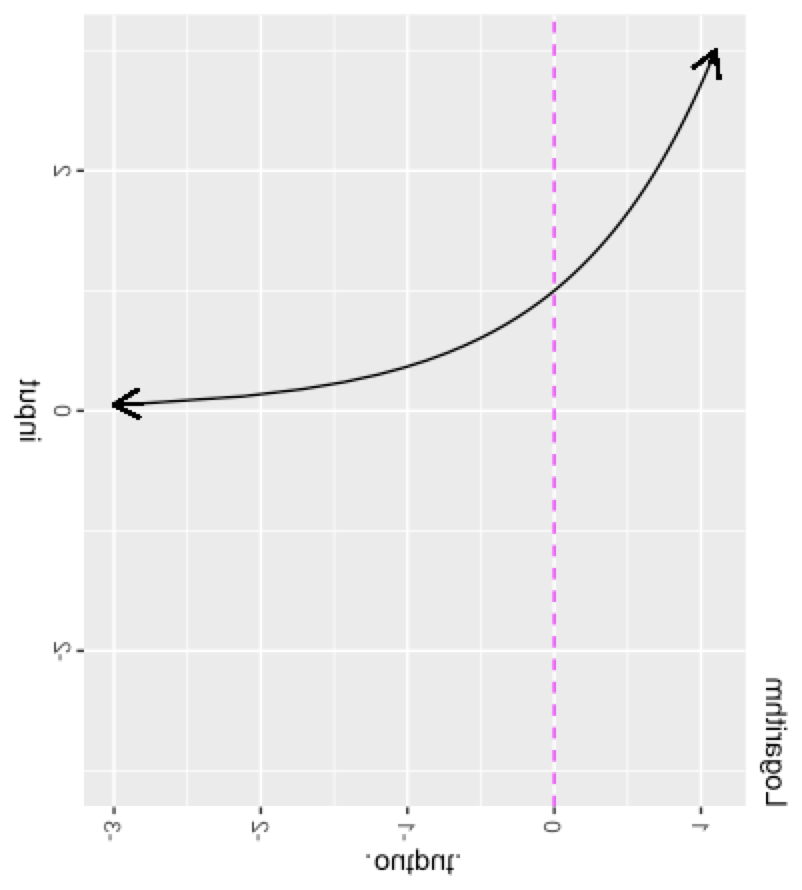

The exponential and logarithmic functions are intimate companions. You can see the relationship by taking the graph of the logarithm, and rotating it 90 degrees, then flipping left for right as in Figure fig-log-flipped. (Note in Figure fig-log-flipped that the graph is shown as if it were printed on a transparency which we are looking at from the back.)

The name “logarithm” is anything but descriptive. The name was coined by the inventor, John Napier (1550-1617), to emphasize the original purpose of his invention: to simplify the work of multiplication and exponentiation. The name comes from the Greek words logos, meaning “reasoning” or “reckoning,” and arithmos, meaning “number.” A catchy marketing term for the new invention, at least for those who speak Greek!

Although invented for the practical work of numerical calculation, the logarithm function has become central to mathematical theory as well as modern disciplines such as thermodynamics and information theory. The logarithm is key to the measurement of information and magnitude. As you know, there are units of information used particularly to describe the information storage capacity of computers: bits, bytes, megabytes, gigabytes, and so on. Very much in the way that there are different units for length (cm, meter, kilometer, inch, mile, …), there are different units for information and magnitude. For almost everything that is measured, we speak of the “units” of measurement. For logarithms, instead of “units,” by tradition another word is used: the base of the logarithm. The most common units outside of theoretical mathematics are base-2 (“bit”) and base-10 (“decade”). But the unit that is most convenient in mathematical notation is “base e,” where \(e = 2.71828182845905...\). This is genuinely a good choice for the units of the logarithm, but that is hardly obvious to anyone encountering it for the first time. To make the choice more palatable, it is marketed as the “base of the natural logarithm.” In this book, we will be using this natural logarithm as our official pattern-book logarithm.

5.2 The power-law family (optional)

Four of the pattern-book functions—\(1\), \(1/x\), \(x\), \(x^2\)— belong to an infinite family called the power-law functions. Some other examples of power-law functions are \(x^3, x^4, \ldots\) as well as \(x^{1/2}\) (also written \(\sqrt{x}\)), \(x^{1.36}\), and so on. Some of these also have special (albeit less frequently used) names, but all of the power-law functions can be written as \(x^p\), where \(x\) is the input and \(p\) is a number.

You have been using power-law functions from early in your math and science education. Some examples:1

| Setting | Function formula | exponent |

|---|---|---|

| Circumference of a circle | \(C(r) = 2 \pi r\) | 1 |

| Area of a circle | \(A(r) = \pi r^2\) | 2 |

| Volume of a sphere | \(V(r) = \frac{4}{3} \pi r^3\) | 3 |

| Distance traveled by a falling object | \(d(t) = \frac{1}{2} g t^2\) | 2 |

| Gas pressure versus volume | \(P(V) = \frac{n R T}{V}\) | \(-1\) |

| … perhaps less familiar … | ||

| Distance traveled by a diffusing gas | \(X(t) = D \sqrt{ \strut t}\) | \(1/2\) |

| Animal lifespan (in the wild) versus body mass | \(L(M) = a M^{0.25}\) | 0.25 |

| Blood flow versus body mass | \(F(M) = b M^{0.75}\) | 0.75 |

One reason why power-law functions are so important in science has to do with the logic of physical quantities such as length, mass, time, area, volume, force, power, and so on. We introduced this topic in sec-quantity-function-space and will return to it in more detail in sec-dimensions-and-units.

Within the power-law family, it is helpful to know and be able to distinguish between several groups:

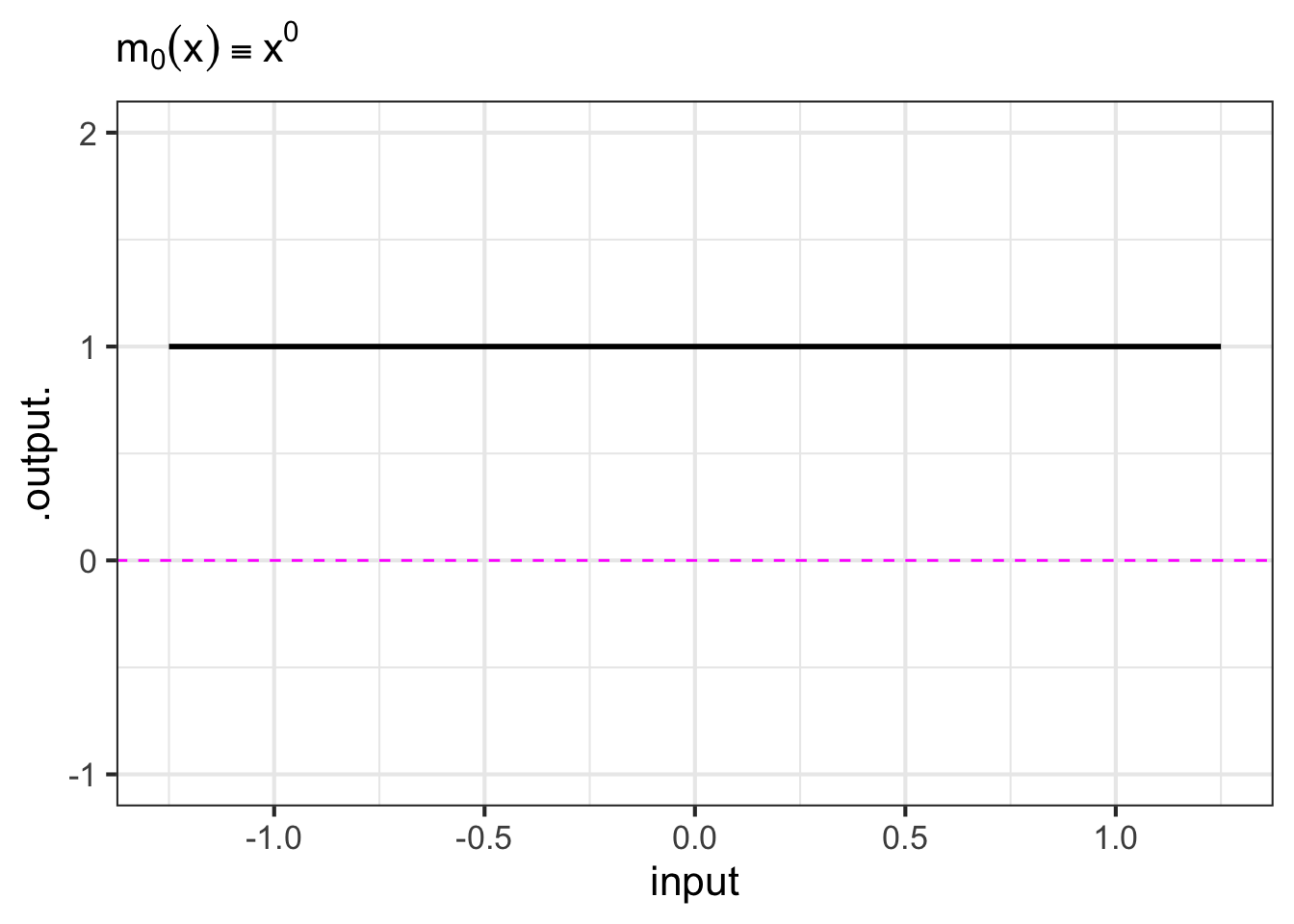

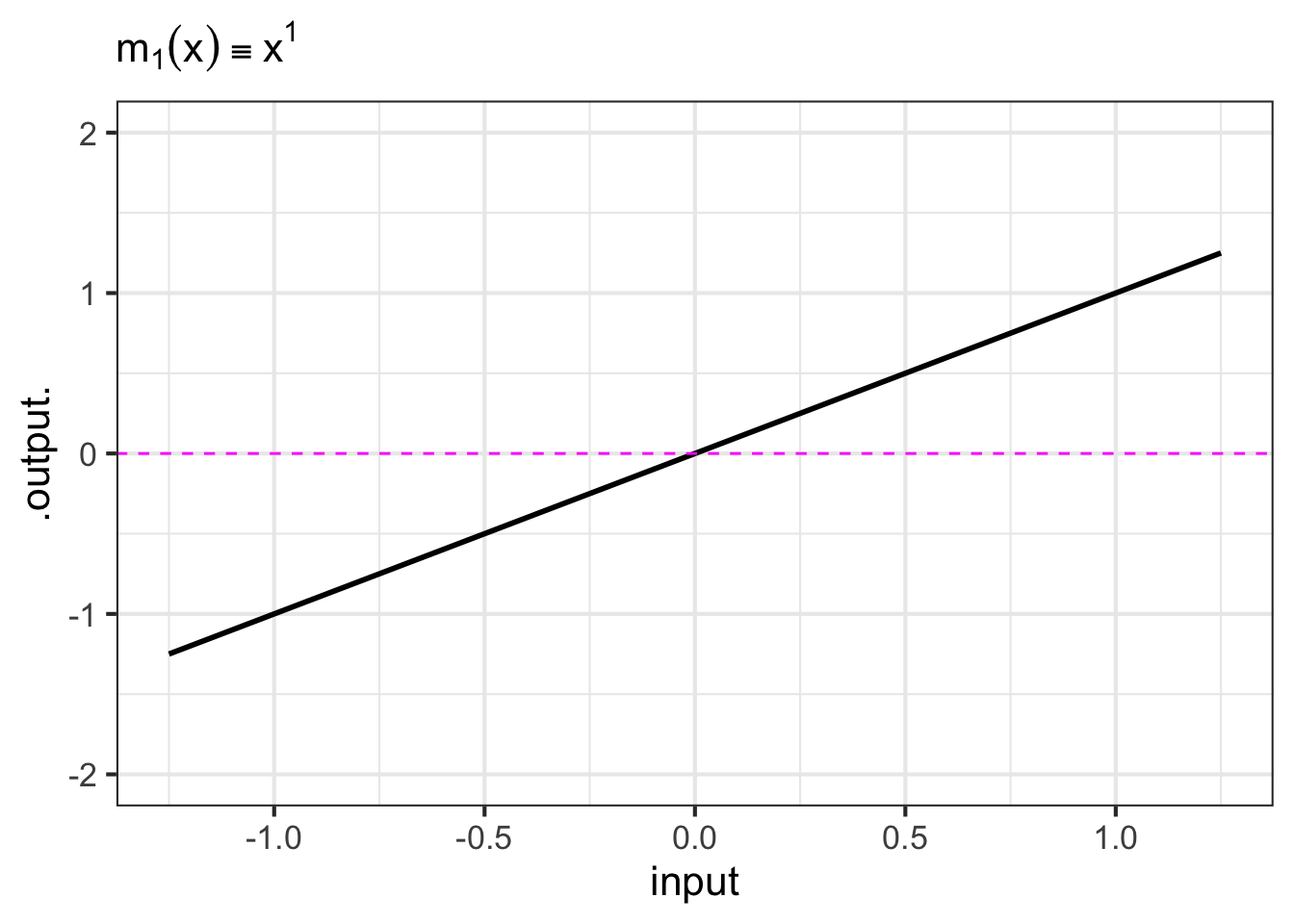

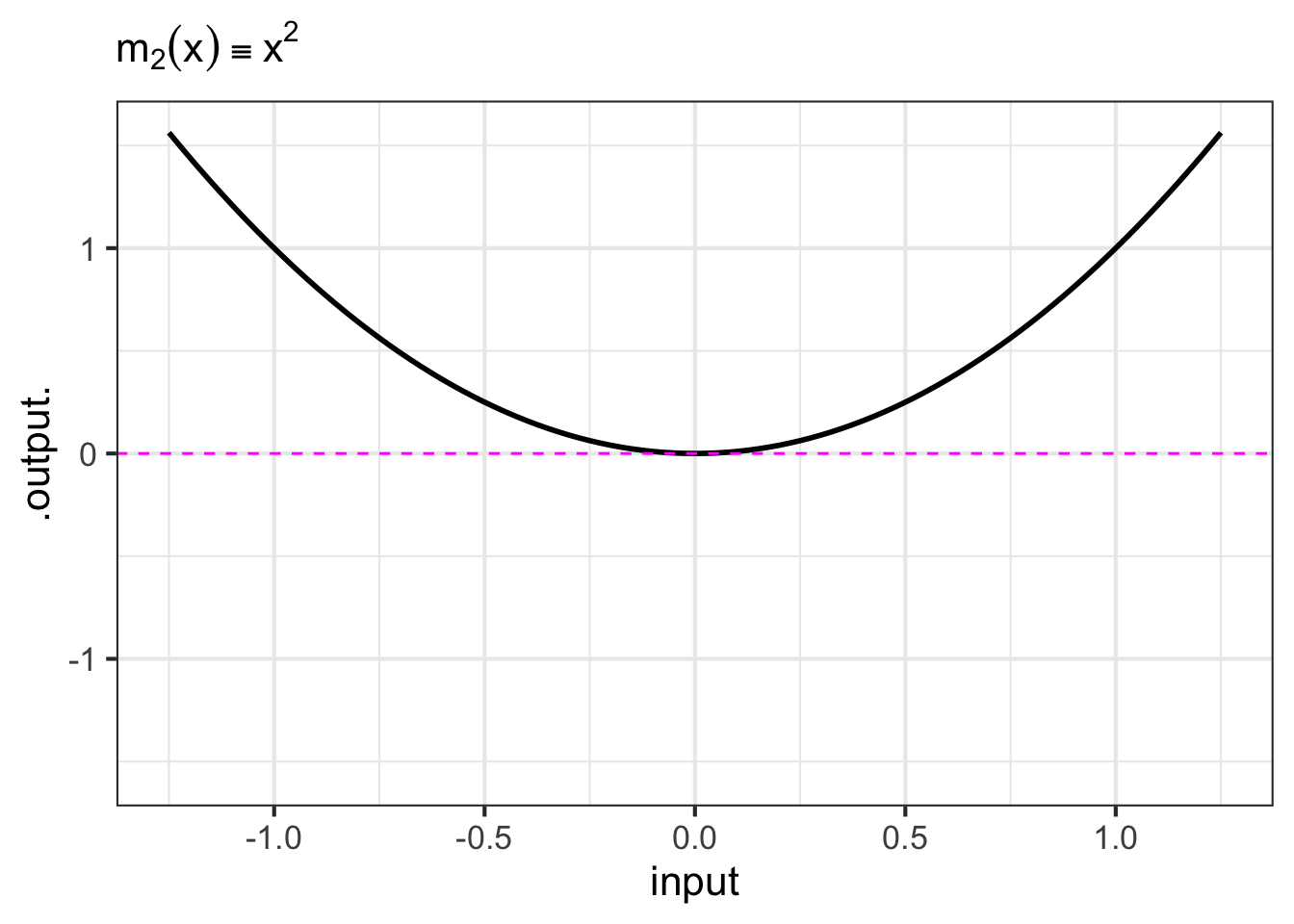

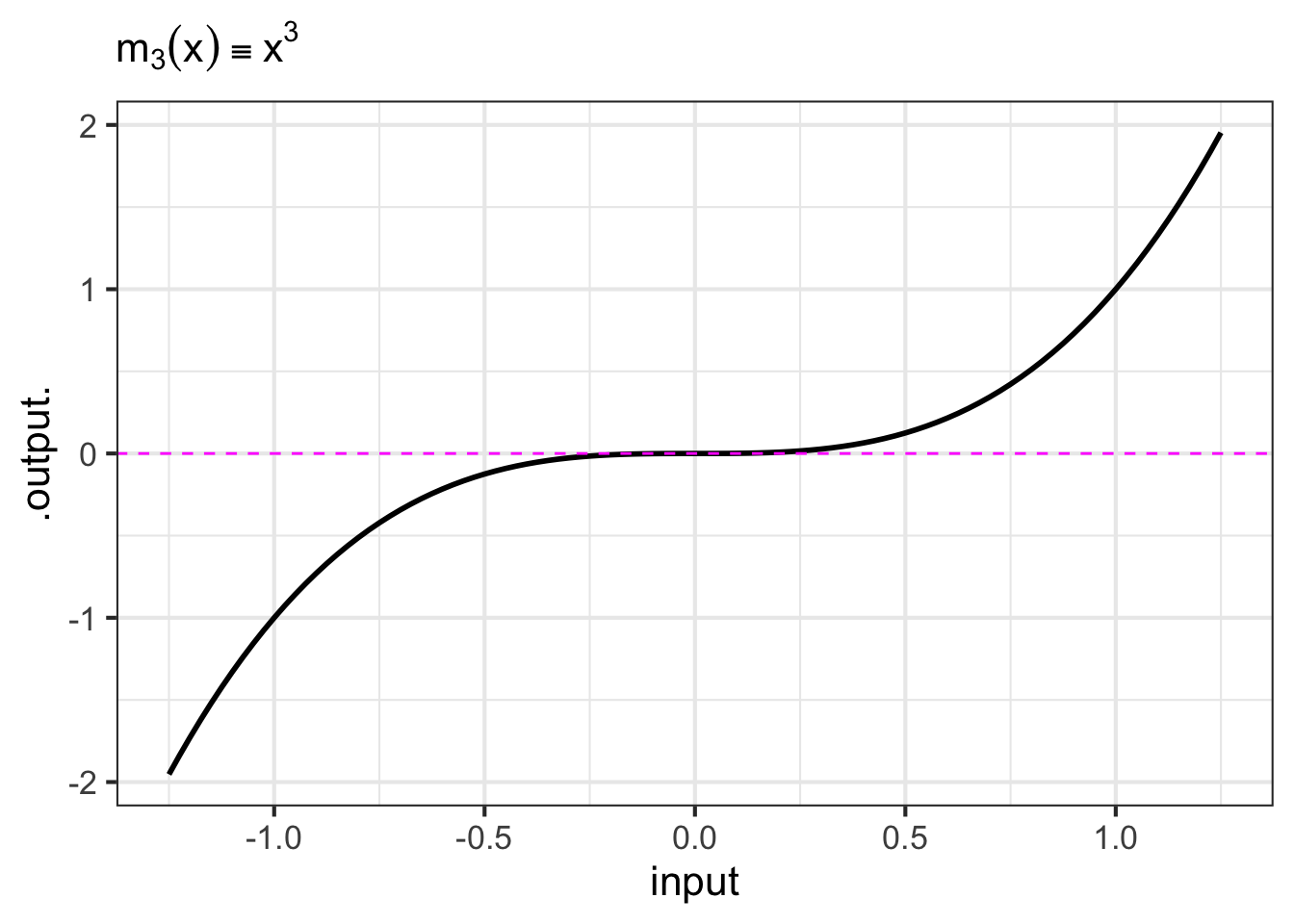

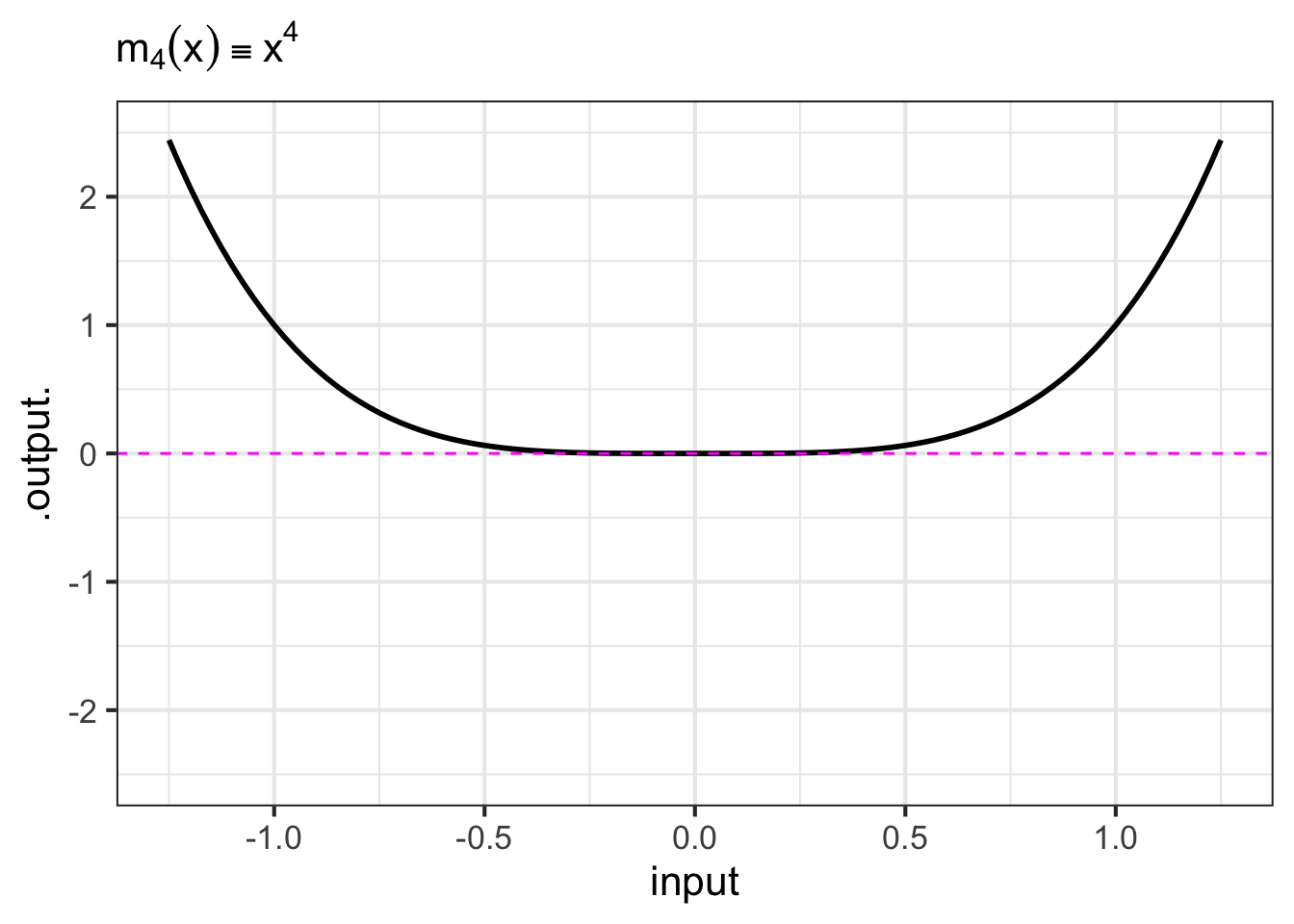

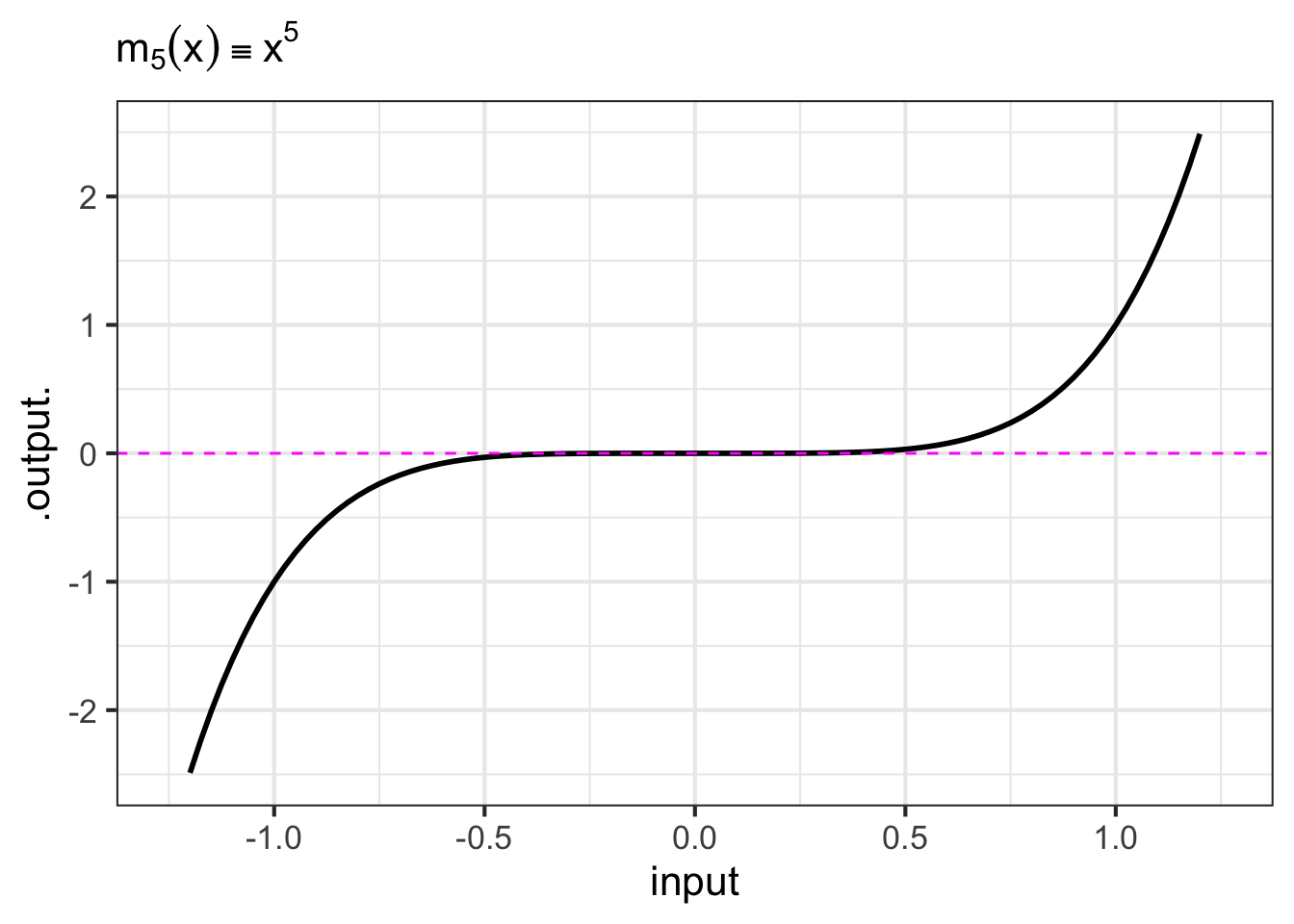

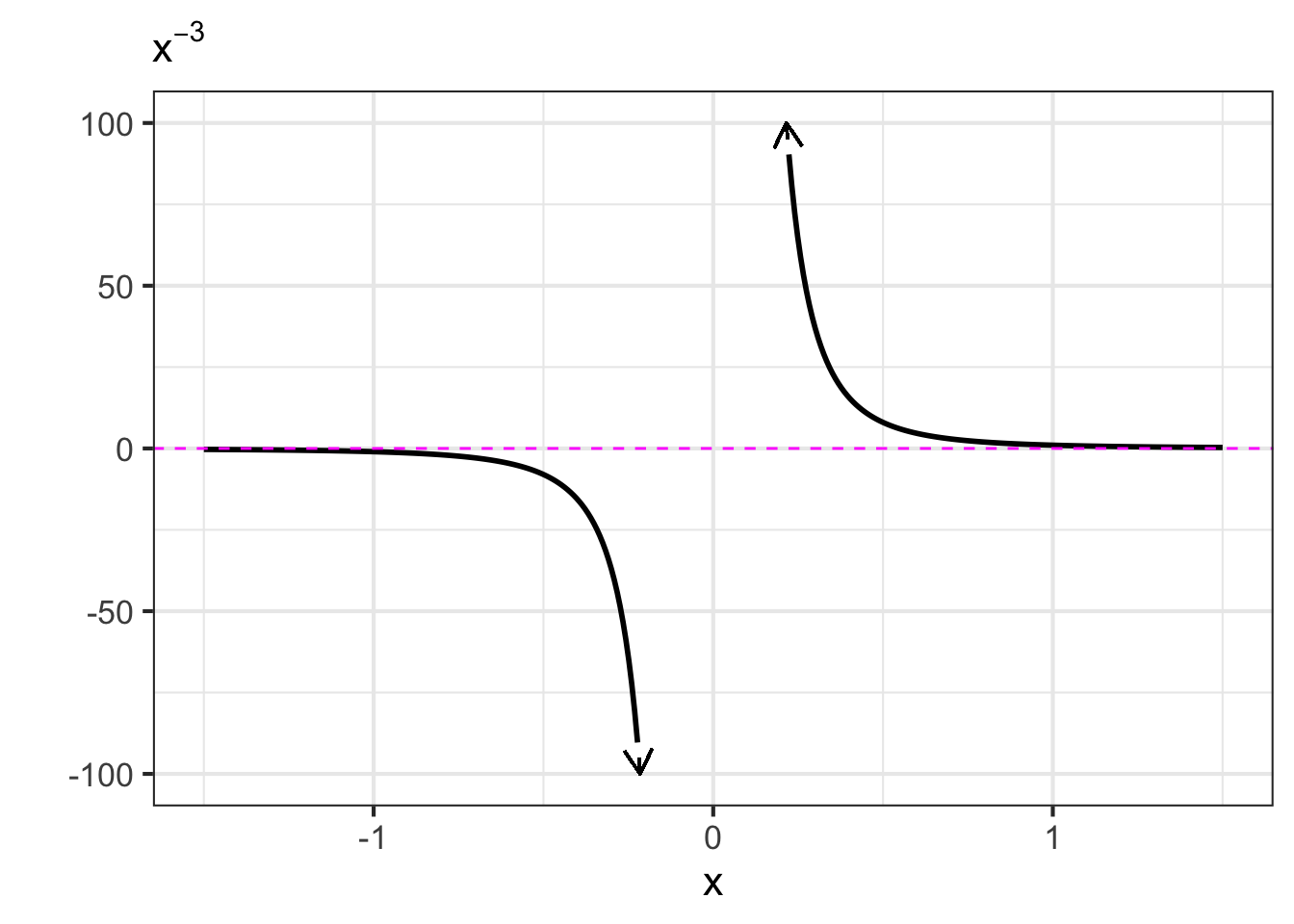

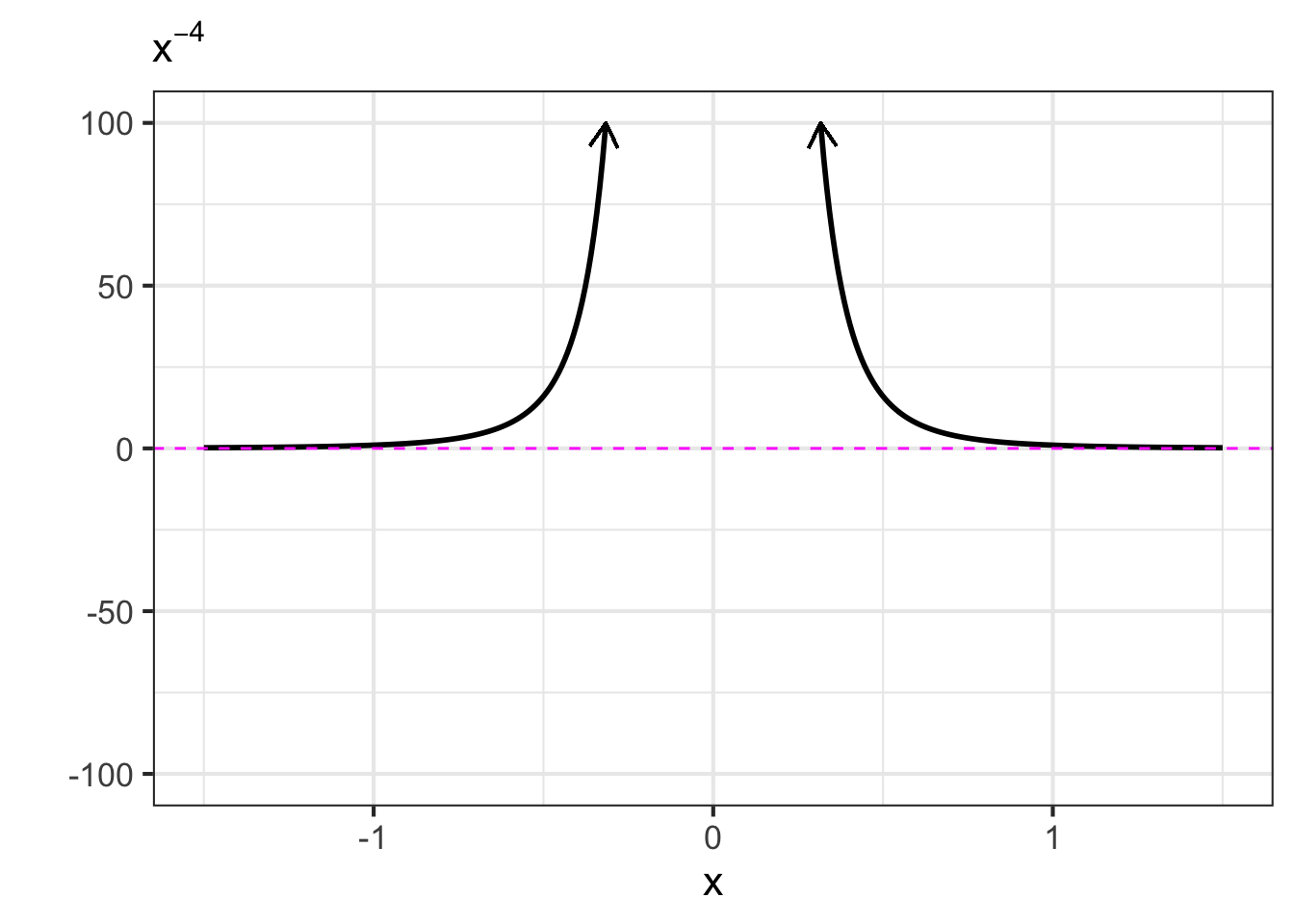

- The monomials. These are power-law functions such as \(m_0(x) \equiv x^0\), \(m_1(x) \equiv x^1\), \(m_2(x) \equiv x^2\), \(\ldots\), \(m_p(x) \equiv x^p\), \(\ldots\), where \(p\) is a whole number (i.e., a non-negative integer). Of course, \(m_0()\) is the same as the constant function, since \(x^0 = 1\). Likewise, \(m_1(x)\) is the same as the identity function since \(x^1 = x\). As for the rest, they have just two general shapes: both arms up for even powers of \(p\) (like in \(x^2\), a parabola); one arm up and the other down for odd powers of \(p\) (like in \(x^3\), a cubic). Indeed, you can see in Figure fig-monomial-graphs that \(x^4\) has a similar shape to \(x^2\) and that \(x^5\) is similar in shape to \(x^3\). For this reason, high-order monomials are rarely needed in practice.

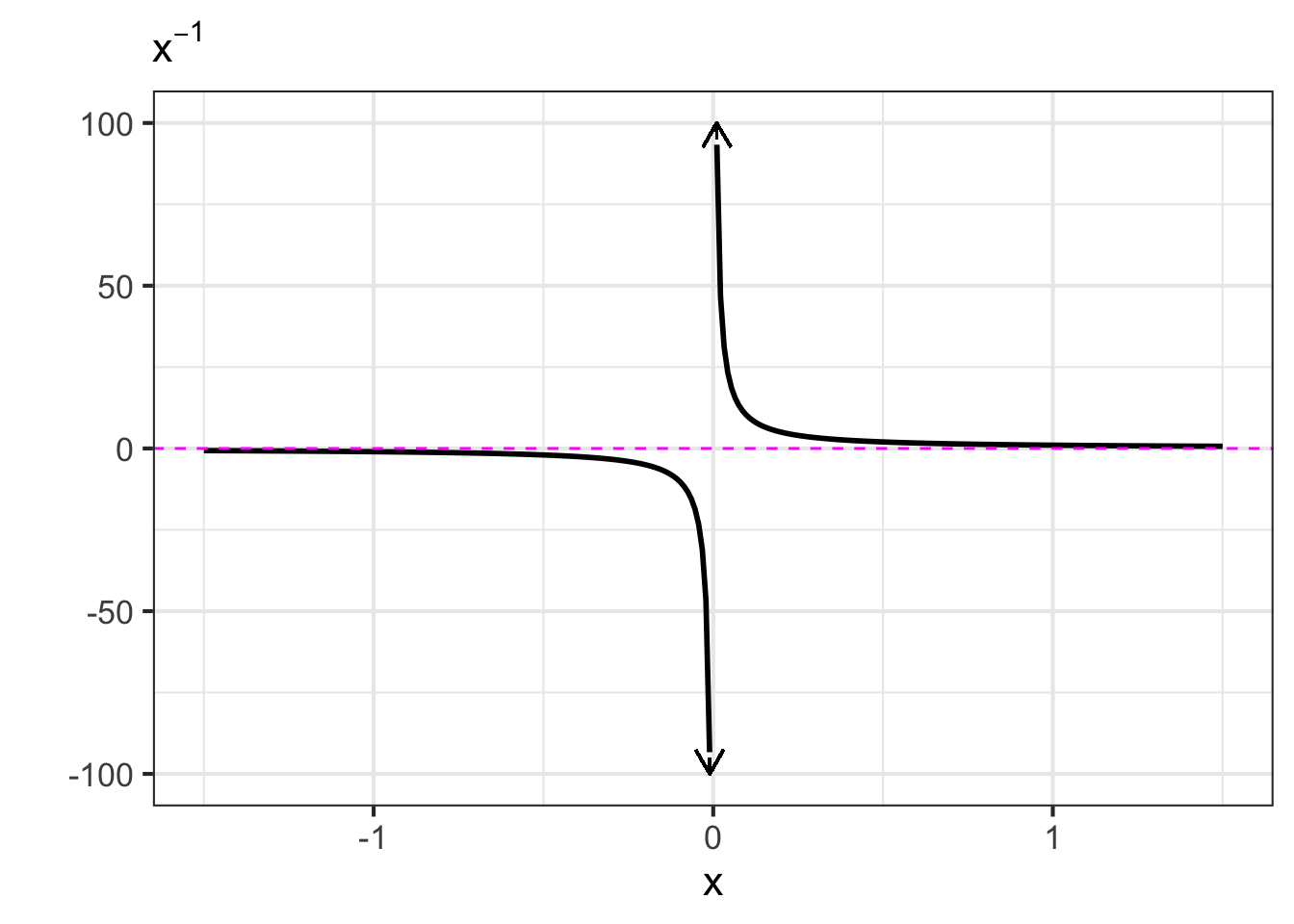

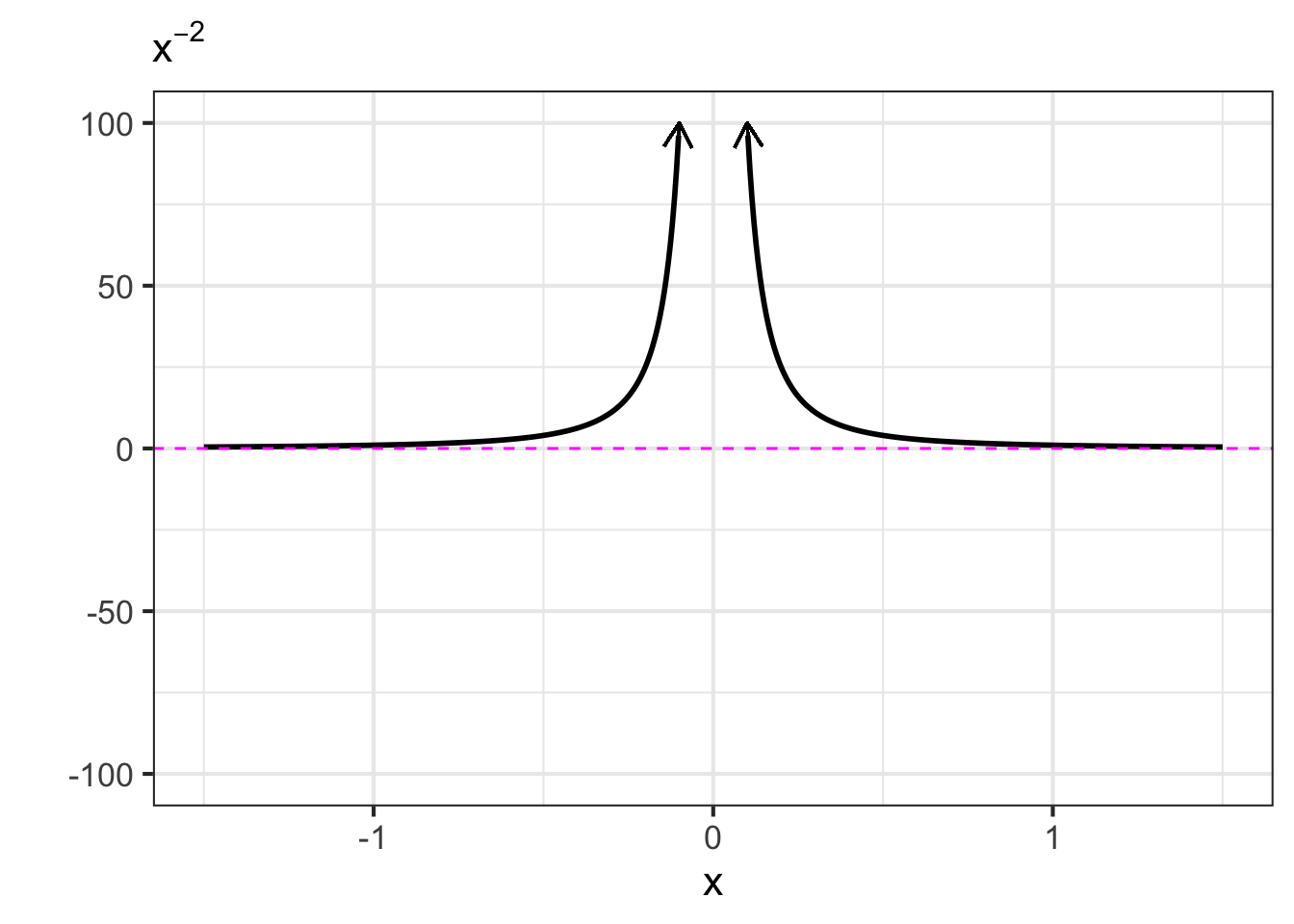

- The negative powers. These are power-law functions where \(p<0\), such as \(f(x) \equiv x^{-1}\), \(g(x) \equiv x^{-2}\), \(h(x) \equiv x^{-1.5}\). For negative powers, the size of the output is inversely proportional to the size of the input. In other words, when the input is large (not close to zero) the output is small, and when the input is small (close to zero), the output is very large. This behavior happens because a negative exponent like \(x^{-2}\) can be rewritten as \(\frac{1}{x^2}\); the input is inverted and becomes the denominator, hence the term “inversely proportional”.

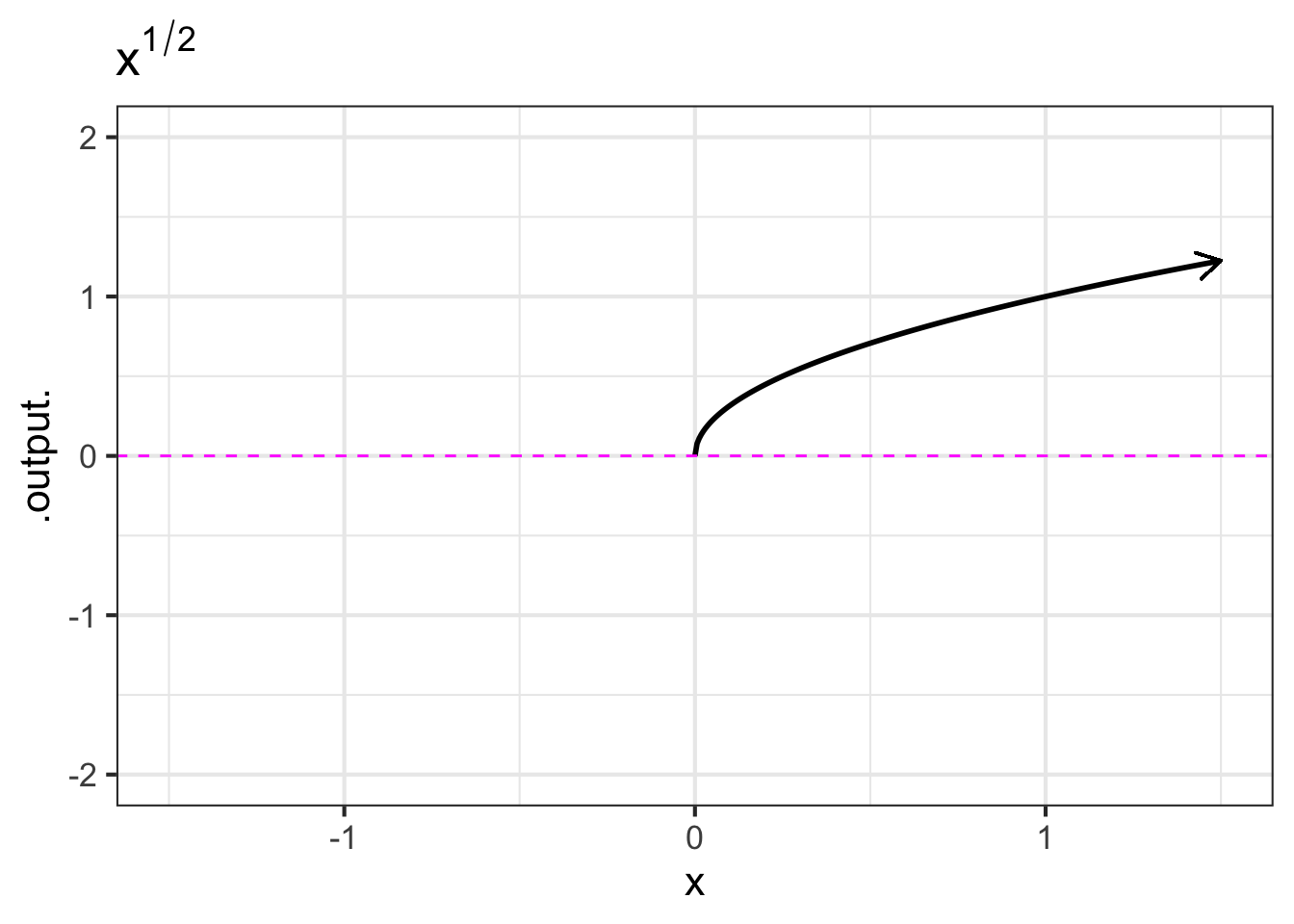

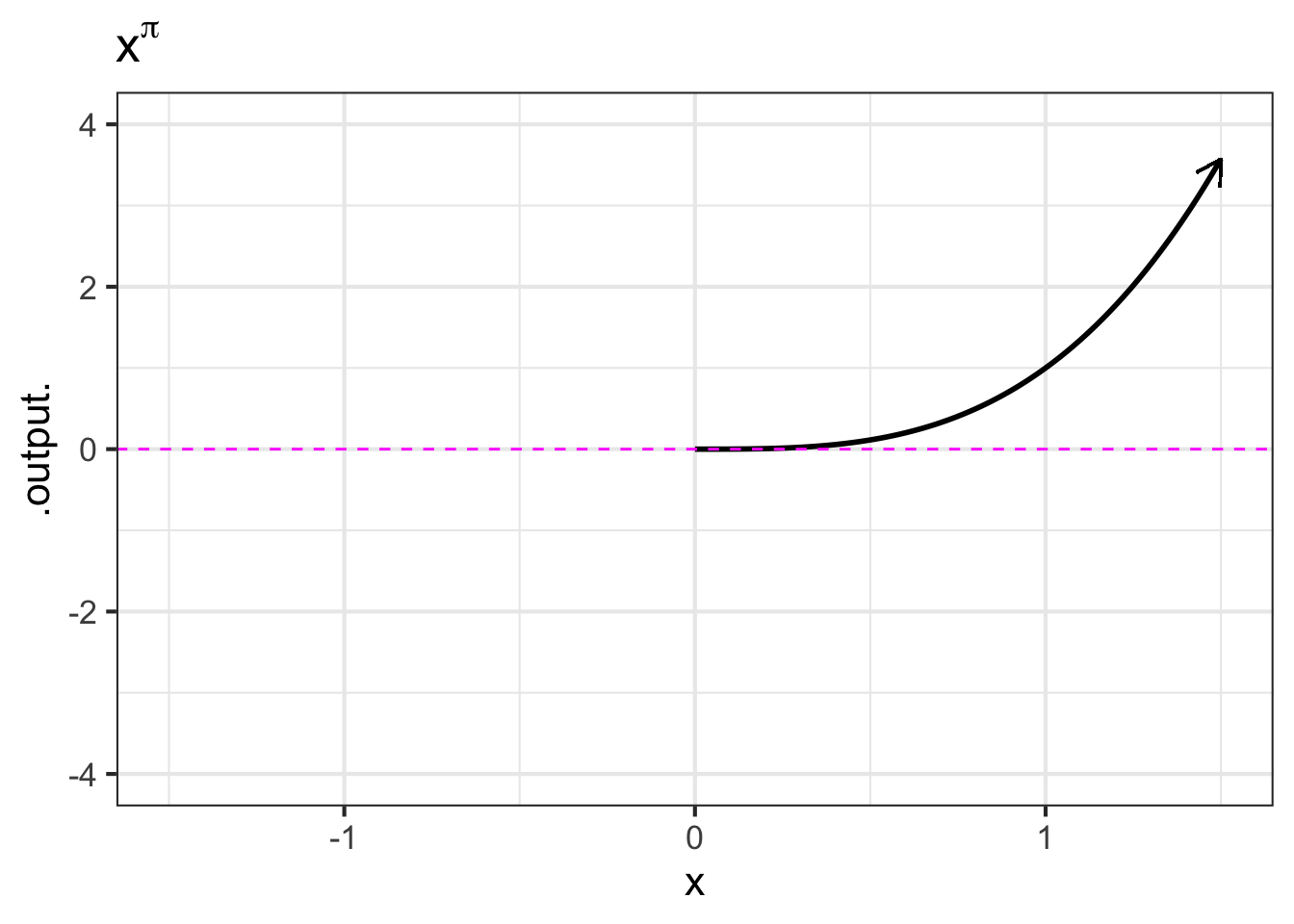

- The non-integer powers, e.g. \(f(x) = \sqrt{x}\), \(g(x) = x^\pi\), and so on. When \(p\) is either a fraction or an irrational number (like \(\pi\)), the real-valued power-law function \(x^p\) can only take non-negative numbers as input. In other words, the domain of \(x^p\) is \(0\) to \(\infty\) when \(p\) is not an integer. You have likely already encountered this domain restriction when using the power law with \(p=\frac{1}{2}\) since \(f(x)\equiv x^{1/2}=\sqrt{x}\), and the square root of a negative number is not a real number. You may have heard about the imaginary numbers that allow you to take the square root of a negative number, but for the moment, you only need to understand that when working with real-valued power-law functions with non-integer exponents, the input must be non-negative. (The story is a bit more complicated since, algebraically, rational exponents like \(1/3\) or \(1/5\) with an odd-valued denominator can be applied to negative numbers. Computer arithmetic, however, does not recognize these exceptions.)

When a function like \(\sqrt[3]{x}\) is written as \(x^{1/3}\) make sure to include the exponent in grouping parentheses: x^(1/3). Similarly, later in the book you will encounter power-law functions where the exponent is written as a formula. Particularly common will be power-law functions written \(x^{n-1}\) or \(x^{n+1}\). In translating this to computer notation, make sure to put the formula within grouping parentheses, for instance x^(n-1) or x^(n+1).

5.3 Domains of pattern-book functions

Each of our basic modeling functions, with two exceptions, has a domain that is the entire number line \(-\infty < x < \infty\). No matter how big or small is the value of the input, the function has an output. Such functions are particularly nice since we never have to worry about the input going out of bounds.

The two exceptions are:

- the logarithm function, which is defined only for \(0 < x\).

- some of the power-law functions: \(x^p\).

- When \(p\) is negative, the output of the function is undefined when \(x=0\). You can see why with a simple example: \(g(x) \equiv x^{-2}\). Most students had it drilled into them that “division by zero is illegal,” and \(g(0) = \frac{1}{0} \frac{1}{0}\), a double law breaker.

- When \(p\) is not an integer, that is \(p \neq 1, 2, 3, \cdots\) the domain of the power-law function does not include negative inputs. To see why, consider the function \(h(x) \equiv x^{1/3}\).

It is essential that you recognize that the exponential function is utterly different from the functions from the power-law family.

An exponential function, for instance, \(e^x\) or \(2^x\) or \(10^x\) has a constant quantity raised to a power set by the input to the function.

A power-law function works the reverse way: the input is raised to a constant quantity, as in \(x^2\) or \(x^10\).

A mnemonic phrase for exponentials functions is

Exponential functions have \(x\) in the exponent.

Of course, the exponential function can have inputs with names other than \(x\), for instance, \(f(y) \equiv 2^y\), but the name “x” makes for a nice alliteration in the mnemonic.

The animal lifespan relationship is true when comparing species. Individual-to-individual variation within a species does not follow this pattern.↩︎