40 Differential equations

40.1 Dynamical systems

In this Block, we take on what an important application of derivatives: the representation of dynamical systems.

“Dynamical systems” (but not under that name) were developed initially in the 1600s to relate planetary motion to the force of gravity. Nowadays, they are used to describe all sorts of physical systems from oscillations in electrical circuits to the ecology of interacting species to the spread of contagious disease.

As examples of dynamical systems, consider a ball thrown thrown through the air or a rocket being launched to deploy a satellite. At each instant of time, a ball has a position—a point in \(x,y,z\) space—and a velocity \(\partial_t x\), \(\partial_t y\), and \(\partial_t z\). These six quantities, and perhaps others like spin, constitute the instantaneous state of the ball. Rockets have additional components of state, for example the mass of the fuel remaining.

The “dynamical” in “dynamical systems” refers to the change in state. For the ball, the state changes under the influence of mechanisms such as gravity and air resistance. The mathematical representation of a dynamical system codifies how the state changes as a function of the instantaneous state. For example, if the instantaneous state is a single quantity called \(x\), the instantaneous change in state is the derivative of that quantity: \(\partial_t x\).

To say that \(x\) changes in time is to say that \(x\) is a function of time: \(x(t)\). When we write \(x\), we mean \(x()\) evaluated at an instant. When we write \(\partial_t x\), we mean “the derivative of \(x(t)\) with respect to time” evaluated at the same instant as for \(x\).

The dynamical system describing the motion of \(x\) is written in the form of a differential equation, like this:

\[\partial_t x = f(x)\ .\] Notice that the function \(f()\) is directly a function of \(x\), not \(t\). This is very different from the situation we studied in Block 3, where we might have written \(\partial_t y = \cos\left(\frac{2\pi}{P} t\right)\) and assigned you the challenge of finding the function \(y(t)\) by anti-differentiation. (The answer to the anti-differentiation problem, of course, is \(y(t) = \frac{P}{2\pi}\sin\left(\frac{2\pi}{P} t\right) + C\).)

It is essential that you train yourself to distinguish two very different statements

- anti-differentiation problems like \(\partial_{\color{blue}{t}} y = g(\color{blue}{t})\), which has \(t\) as both the with-respect-to variable and as the argument to the function \(g()\).

and

- dynamical systems like \[\partial_{\color{blue}{t}} \color{magenta}{y} = g(\color{magenta}{y})\ .\]

This is one place where Leibniz’s notation for derivatives can be useful: \[\underbrace{\frac{d\color{magenta}{y}}{d\color{blue}{t}} = g(\color{blue}{t})}_{\text{as in antidifferentiation}}\ \ \ \text{versus}\ \ \ \underbrace{\frac{d\color{magenta}{y}}{d\color{blue}{t}} = g(\color{magenta}{y})}_{\text{dynamical system}}\]

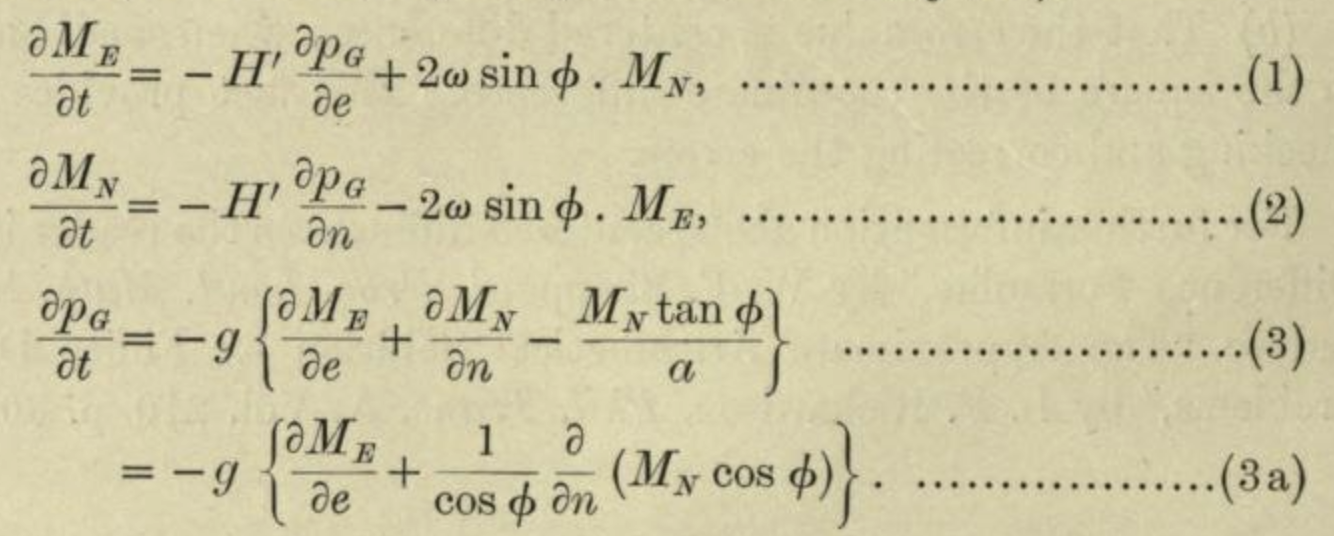

Dynamical systems with multiple state quantities are written mathematically as sets of differential equations, for instance: \[\partial_t y = g(y, z)\\ \partial_t z = h(y, z)\] We typically use the word system rather than “set,” so a dynamical system is represented by a system of differential equations.

40.2 State

The mathematical language of differential equations and dynamical systems is able to describe a stunning range of systems, for example:

- physics

- swing of a pendulum

- bobbing of a mass hanging from a spring.

- a rocket shooting up from the launch pad

- commerce

- investment growth

- growth in equity in a house as a mortgage is paid up. (“Equity” is the amount of the value of the house that belongs to you.)

- biology

- growth of animal populations, including predator and prey.

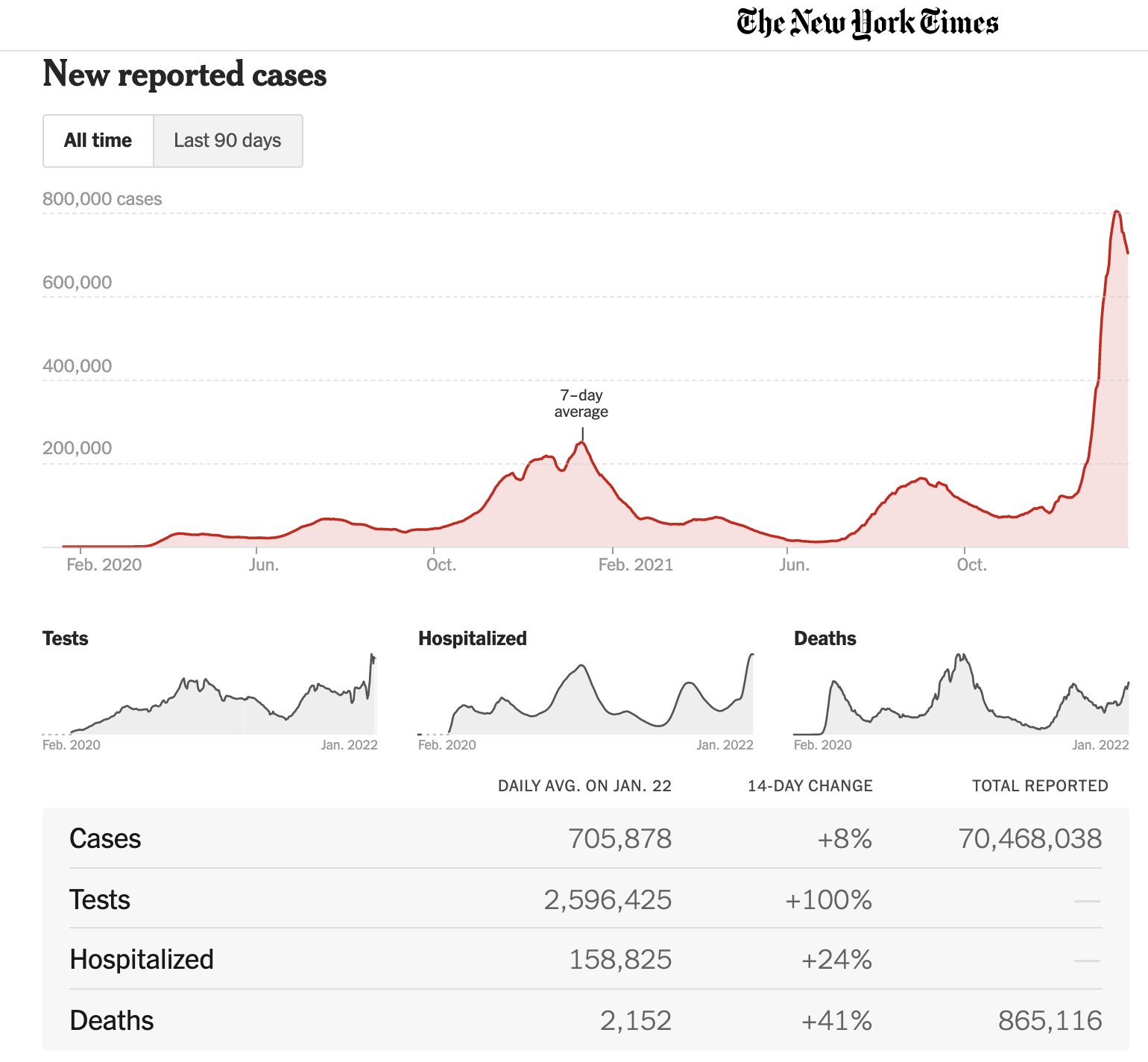

- spread of an infectious disease

- growth of an organism or a crop.

All these systems involve a state that describes the configuration of the system at a given instant in time. For the growth of a crop, the state would be, say, the amount of biomass per unit area. For the spread of infectious disease, the state would be the fraction of people who are infectious and the fraction who are susceptible to infection. “State” in this sense is used in the sense of “the state of affairs,” or “his mental state,” or “the state of their finances.”

Since we are interested in how the state changes over time, sometimes we refer to it as the dynamical state.

One of the things you learn when you study a field such as physics or epidemiology or engineering is what constitutes a useful description of the dynamical state for different situations. In the crop and infectious disease examples above, the state mentioned is a strong simplification of reality: a model. Often, the modeling cycle leads the modeler to include more components to the state. For instance, some models of crop growth include the density of crop-eating insects. For infectious disease, a model might include the fraction of people who are incubating the disease but not yet contagious.

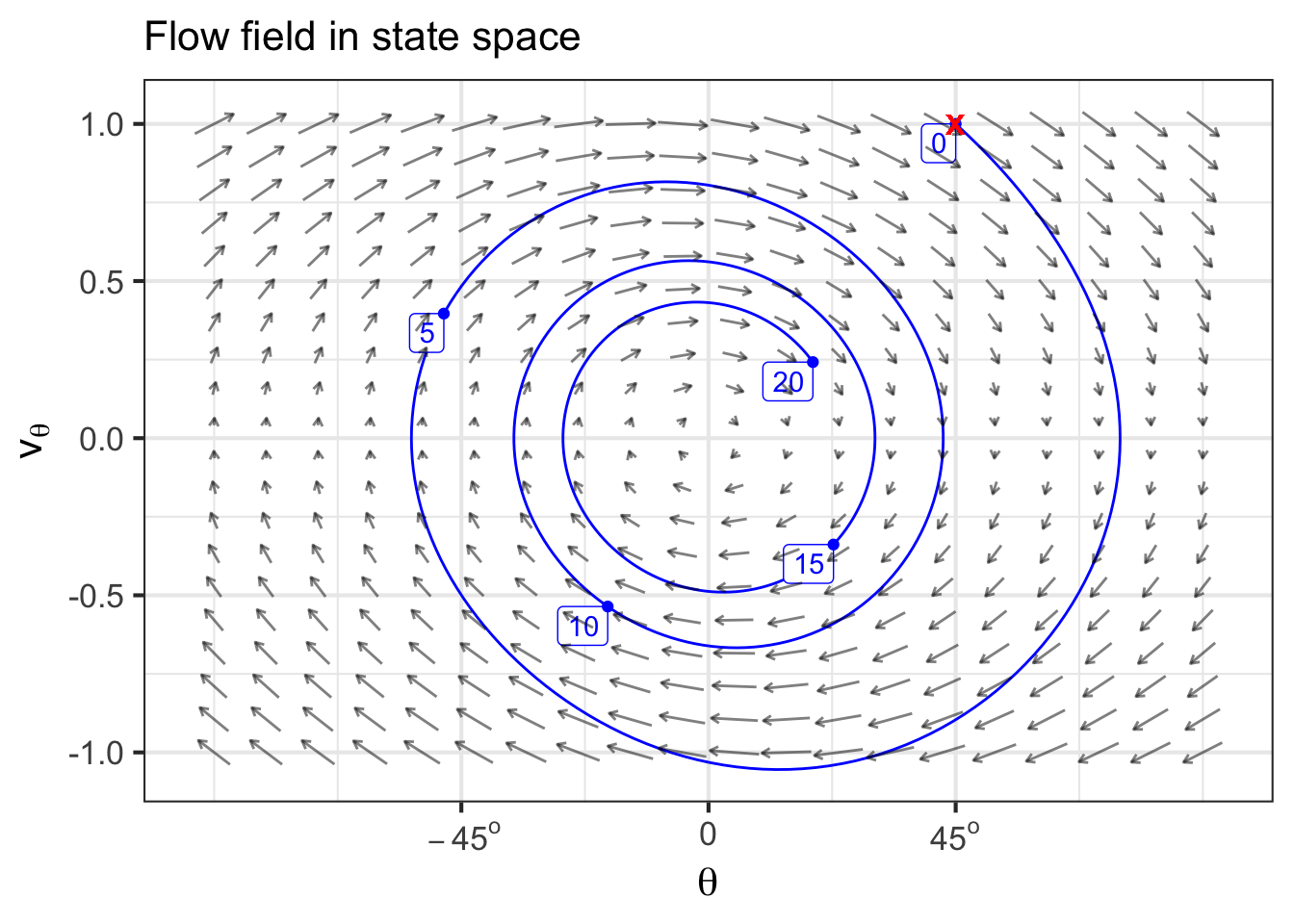

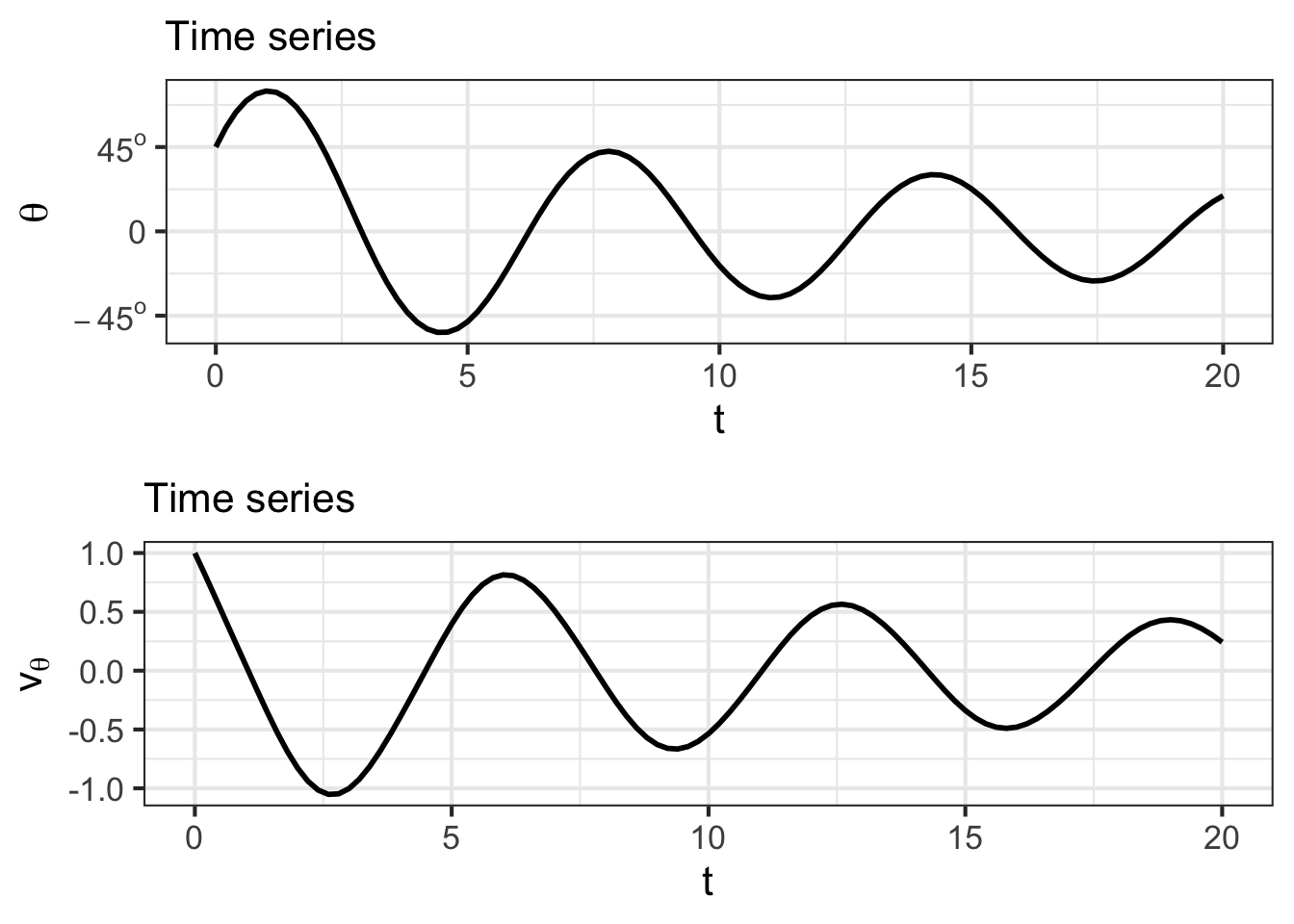

Consider the relatively simple physical system of a pendulum, swinging back and forth under the influence of gravity. In physics, you learn the essential dynamical elements of the pendulum system: the current angle the pendulum makes to the vertical, and the rate at which that angle changes. There are also fixed elements of the system, for instance the length of the pendulum’s rod and the local gravitational acceleration. Although such fixed characteristics may be important in describing the system, they are not elements of the dynamical state. Instead, they might appear as parameters in the functions on the right-hand side of the differential equations.

To be complete, the dynamical state of a system has to include all those changing aspects of the system that allow you to calculate from the state at this instant what the state will be at the next instant. For example, the angle of the pendulum at an instant tells you a lot about what the angle will be at the next instant, but not everything. You also need to know which way the pendulum is swinging and how fast.

Figuring out what constitutes the dynamical state requires knowledge of the mechanics of the system, e.g. the action of gravity, the constraint imposed by the pivot of the pendulum. You get that knowledge by studying the relevant field: electrical engineering, economics, epidemiology, etc. You also learn what aspects of the system are fixed or change slowly enough that they can be considered fixed. (Sometimes you find out that something your intuition tells you is important to the dynamics is, in fact, not. An example is the mass of the pendulum.)

40.3 State space

The state of a dynamical system tells you the configuration of the system at any instant in time. It is appropriate to think about the instantaneous state as a single point in a state space, a coordinate system with an axis for each component of state. As the system configuration changes with time—say, the pendulum loses velocity as it swings to the left—the instantaneous state moves along a path in the state space. Such a path is called a trajectory of the dynamical system.

In this book, we will work almost exclusively with systems that have a one- or two-dimensional state. Consequently, the state space will be either the number line or the coordinate plane. The methods you learn will be broadly applicable to systems with higher-dimensional state.

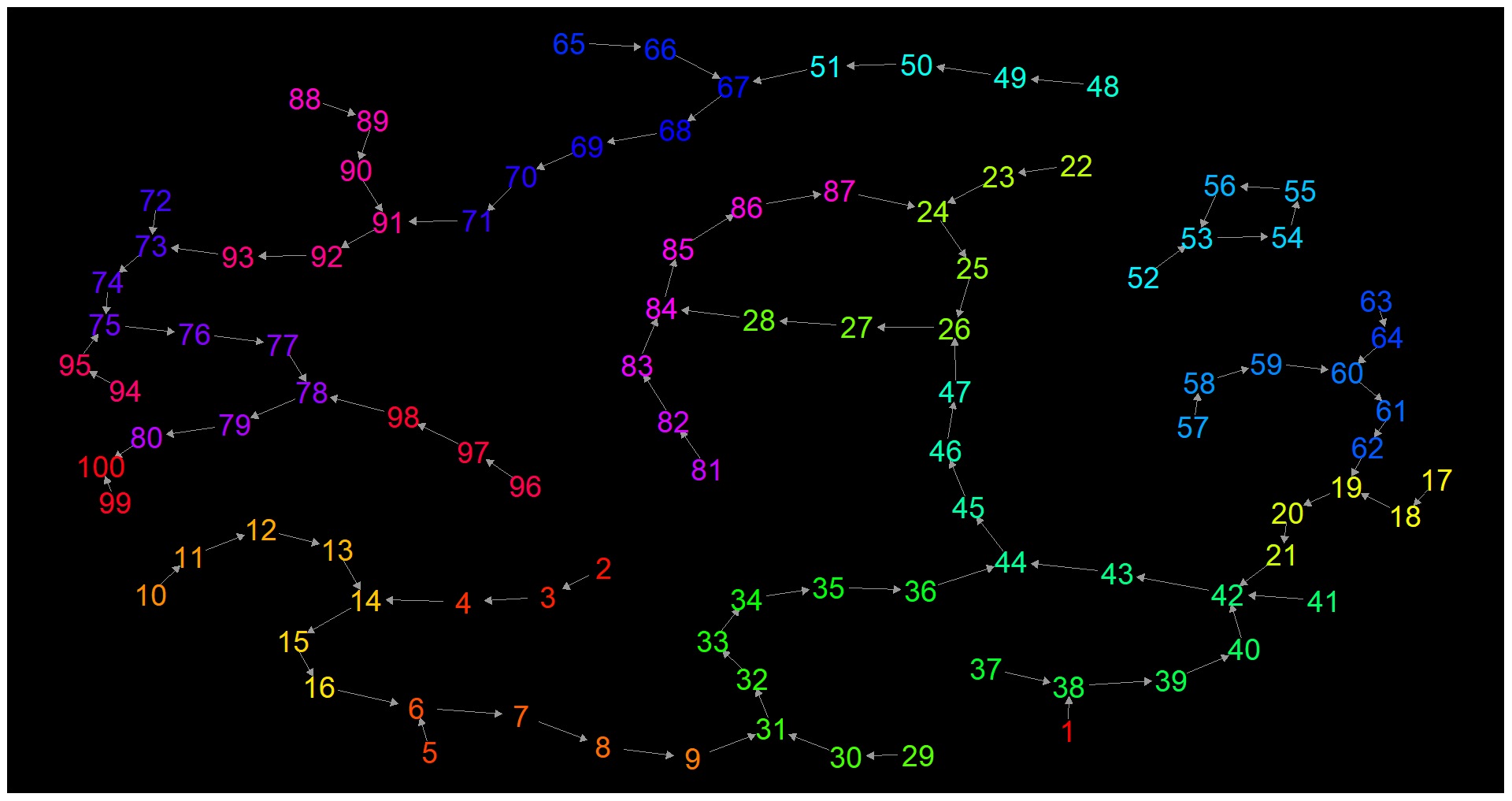

For the deterministic dynamical systems we will be working with, a basic principle is that a trajectory can never cross itself. This can be demonstrated by contradiction. Suppose a trajectory did cross itself. This would mean that the motion from the crossing point couple possibly go in either of two directions; the state might follow one branch of the cross or the other. Such a system would not be deterministic. Determinism implies that from each point in state space the flow goes only in one direction.

The dimension of the state space is the same as the number of components of the state; one axis of state space for every component of the state. has important implications for the type of motion that can exist.

- If the state space is one-dimensional, the state as a function of time must be monotonic. Otherwise, the trajectory would cross itself, which is not permitted.

- A state space that is two- or higher-dimensional can support motion that oscillates back and forth. Such a trajectory does not cross itself, instead it goes round and round in a spiral or a closed loop.

For many decades, it was assumed that all dynamical systems produce either monotonic behavior or spiral or loop behavior. In the 1960s, scientists working on a highly simplified model of the atmosphere discovered numerically that there is a third type of behavior, the irregular and practically unpredictable behavior called chaos. To display chaos, the state space of the system must have at least three elements.

The pendulum was started out by lifting it to an angle of \(45^\circ\) and giving it an initial upward velocity. The bob swings up for a bit before being reversed by gravity and swinging toward \(\theta = 0\) and beyond. Due to air resistance, the amplitude of swinging decreases over time.

:::

The flow of a dynamical system tells how different points in state space are connected. Because movement of the state is continuous in time and the state space itself is continuous, the connections cannot be stated in the form “this point goes to that point.” Instead, as has been the case all along in calculus, we describe the movement in terms of a “velocity” vector. Each dynamical function specifies one component of the “velocity” vector, taken together they tell the direction and speed of movement of the state at each instant in time.

Perhaps it would be better to use the term state velocity instead of “velocity.” In physics and most aspects of everyday life, “velocity” refers to the rate of change of physical position of an object. Similarly, the state velocity tells the rate of change of the position of the state. It is a useful visualization technique to think of the state as an object skating around the state space in a manner directed by the dynamical functions. But the state space almost always includes components other than physical position. For instance, in the rabbit/fox model, the state says nothing about where individual rabbits and foxes are located in their environment; it is all about the density of animals in a region.

In physics, often the state space consists of position in physical state as well as the physical velocity in physical space. For instance, the state might consist of the three \(x, y, z\) components of physical position as well as the three \(v_x, v_y, v_z\) components of physical velocity. Altogether, that is a six-dimensional state space. The state velocity also has six components. Three of those components will be the “velocity of the velocity,” that is, the direction and speed with which the physical velocity is changing.