15 Magnitudes

Undoubtedly you are comfortable with the standard way of writing numbers, for instance 33 or 512 or 1051. Elementary school students master the comparison of such numbers. Which is greater: 512 or 33? Which is less, 1051 or 512? You can answer such questions at a glance; the comparison can be accomplished simply by counting the number of digits. 1051 has four digits, so it is larger than the three-digit number 512. There are two digits in 33, so it smaller than 512. When two numerals have the same number of digits—say, 337 and 512—you can’t answer the “greater than” question by simple counting. Instead, you proceed from left to right and compare the number in each place. So, for 512 and 337, you compare 5 to 3 and … since 5 is greater than 3, 512 is greater than 337. If the two leading digits are the same, go on to the next digit and so on for all the digits in turn. ![]() 1600

1600

Things were not always this simple. Our number system that uses place and Arabic numerals is a human invention. An example of an earlier number system is Roman numerals. Here, comparison is hard. For instance, which of these three numbers is bigger?

\[\text{MLI or CXII or XXXIII}\] The typographically shorter number is the largest, and vice versa. Even when two Roman numerals have the same length, it’s not trivial to compare them on a place-by-place basis. For instance, IC is about fifteen times bigger than VI, even though I is much smaller than V.

15.1 Counting digits

Digit counting provides an easy, fast way to perform many calculations, at least approximately. What is \(\sqrt{10000}\)? There are five digits, and the square root of a number will have “half the number of digits.” So, \(\sqrt{10000} = 100\). What is \(10 \times 34\)? Easy: 340. Just append the one zero from 10 to the end of 34. What is \(1000 \times 13\)? Just as easy: 13,000. We even punctuate written numbers with commas and a period in order to facilitate counting digits.

Imagine having a digit counting function called digit(). It takes a number as input and produces a number as output. We don’t have a formula for digit(), but for some inputs the output can be calculated just be counting. For example:

- digit(10) \(\equiv\) 1

- digit(100) \(\equiv\) 2

- digit(1000) \(\equiv\) 3

- … and so on …

- digit(1,000,000) \(\equiv\) 6

- … and on.

The digit() function easily can be applied to the product of two numbers. For instance:

- digit(1000 \(\times\) 100) = digit(1000) + digit(100) = 3 + 2 = 5.

Similarly, applying digit() to a ratio gives the difference of the digits of the numerator and denominator, like this:

- digit(1,000,000 \(\div\) 100) = digit(1,000,000) - digit(100) = 6 - 2 = 4

15.2 Using digit() to understand magnitude

We haven’t shown you the digit() function for anything but the handful of discrete inputs listed above. It was a heroic task to produce the continuous version of digit(). The method is sketched out in 15.7.

In practice, digit() is so useful that it could well have been one of our basic modeling functions:

\[\text{digit(x)} = 2.302585 \ln(x)\] or, in R, log10(). We elected \(\ln()\) rather than digit() for reasons that will be seen when we study differentiation.

15.3 Quantity and magnitude

The familiar quantity 60 miles-per-hour is written as a number (60 here) followed by units. The quantity is neither the number nor the units: it is the combination of the two. For instance, 100 is obviously not the same as 60. And miles-per-hour is not the same as kilometers-per-hour. Yet, 60 miles-per-hour is almost exactly the same quantity as 100 kilometers-per hour.21 ![]() 1610

1610

6, 60, 600, and 6000 miles-per-hour are quantities that differ in size by orders of magnitude. Such differences often point to a substantial change in context. A jog is 6 mph, a car on a highway goes 60 mph, a cruising commercial jet goes 600 mph, a rocket passes through 6000 mph on its way to orbital velocity.

In everyday speech, the difference between 60 and 6 is 54; just subtract. Modelers and scientists routinely mean something else: the difference between 60 and 6 is “one order of magnitude.” Similarly, 60 and 6000 are different by “two orders of magnitude,” and 6 and 6000 by three orders of magnitude.

In everyday English, we have phrases like “a completely different situation” or “different in kind” or “qualitatively different” (note the l) to indicate substantial differences. “Different orders of magnitude” expresses the same kind of idea but with specific reference to quantity.

The use of factors of 10 in counting orders of magnitude is arbitrary. A person walking and a person jogging are on the edge of being qualitatively different, although their speeds differ by a factor of only 2. Aircraft that cruise at 600 mph and 1200 mph are qualitatively different in design, although the speeds are only a factor of 2 apart. A professional basketball player (height 2 meters or more) is qualitatively different from a third grader (height about 1 meter).

Modelers develop an intuitive sense for when to think about difference in terms of a subtractive difference (e.g. 60 - 6 = 54) and when to look at orders of magnitude (e.g. 60-to-6 is one order of magnitude). This seems to be a skill based in experience and judgment, as opposed to a mechanical process.

One clue that thinking in terms of orders of magnitude is appropriate is when you are working with a set of objects whose range of sizes spans one or many factors of 2. Comparing baseball and basketball players? Probably no need for orders of magnitudes. Comparing infants, children, and adults in terms of height or weight? Orders of magnitude may be useful. Comparing bicycles? Mostly they fit within a range of 2 in terms of size, weight, and speed (but not expense!). Comparing cars, SUVs, and trucks? Differences by a factor of 2 are routine, so thinking in terms of order of magnitude is likely to be appropriate.

Another clue is whether “zero” means “nothing.” Daily temperatures in the winter are often near “zero” on the Fahrenheit or Celcius scales, but that in no way means there is a complete absence of heat. Those scales are arbitrary. Another way to think about this clue is whether negative values are meaningful. If so, thinking in terms of orders of magnitude is not likely to be useful.

You may have guessed that digits() is handy for computing differences in terms of orders of magnitude. Here’s how: ![]() 1620

1620

- Make sure that the quantities are expressed in the same units.

- Calculate the difference between the

digits()of the numerical part of the quantity.

What is the order-of-magnitude difference in velocity between a snail and a walking human. A snail slides at about 1 mm/sec, a human walks at about 5 km per hour. Putting human speed in the same units as snail speed:

\[5 \frac{km}{hr} = \left[\frac{1}{3600} \frac{hr}{sec}\right] 5 \frac{km}{hr} = \left[10^6 \frac{mm}{km}\right] \left[\frac{1}{3600} \frac{hr}{sec}\right] 5 \frac{km}{hr} = 1390 \frac{mm}{sec} \]

Calculating the difference in digits() between 1 and 1390:

## [1] 3.143015So, about 3 orders of magnitude difference in speed. To a snail, we walking humans must seem like rockets on their way to orbit!

Animals, including humans, go about the world in varying states of illumination, from the bright sunlight of high noon to the dim shadows of a half moon. To be able to see in such diverse conditions, the eye needs to respond to light intensity across many orders of magnitude.

The lux is the unit of illuminance in the Système international. This table22 shows the illumination in a range of familiar outdoor settings:

| Illuminance | Condition |

|---|---|

| 110,000 lux | Bright sunlight |

| 20,000 lux | Shade illuminated by entire clear blue sky, midday |

| 1,000 lux | Typical overcast day, midday |

| 400 lux | Sunrise or sunset on a clear day (ambient illumination) |

| 0.25 lux | A full Moon, clear night sky |

| 0.01 lux | A quarter Moon, clear night sky |

For a creature active both night and day, they eye needs to be sensitive over 7 orders of magnitude of illumination. To accomplish this, eyes use several mechanisms: contraction or dilation of the pupil accounts for about 1 order of magnitude, photopic (color, cones) versus scotopic (black-and-white, rods, nighttime) covers about 3 orders of magnitude, adaptation over minutes (1 order), squinting (1 order).

15.4 Composing \(\ln()\)

The logarithm is the inverse of the exponential function. In other words, \[\ln(e^x) = x\ \ \text{and}\ \ e^{\ln(x)} = x\]

Think about this in terms of the kinds of quantities that are the input and output to each function. ![]() 1630

1630

- Logarithm: The input is a quantity, the output is the magnitude of that quantity.

- Exponential: The input is a magnitude, the output is the quantity with that magnitude.

15.5 Magnitude graphics

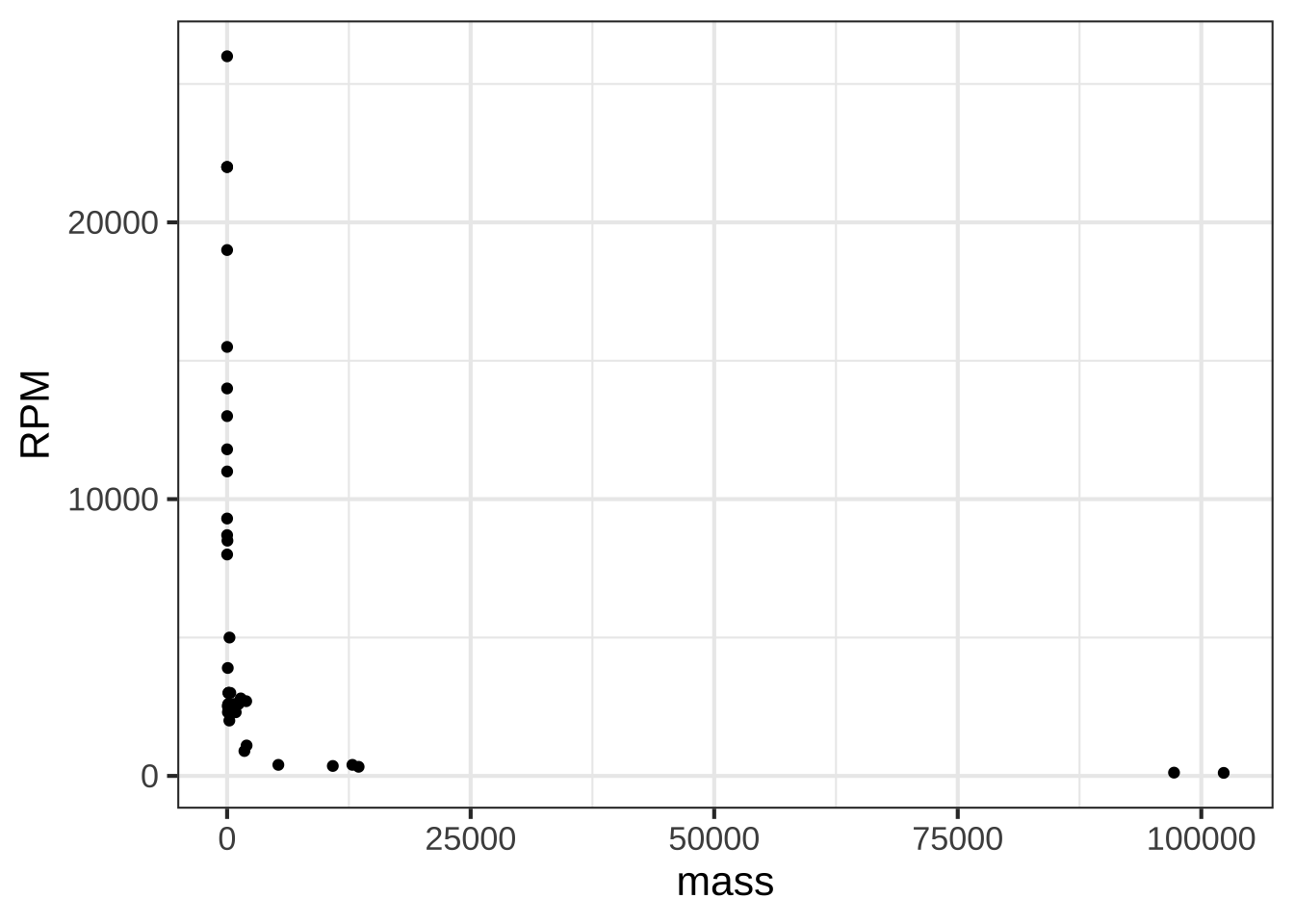

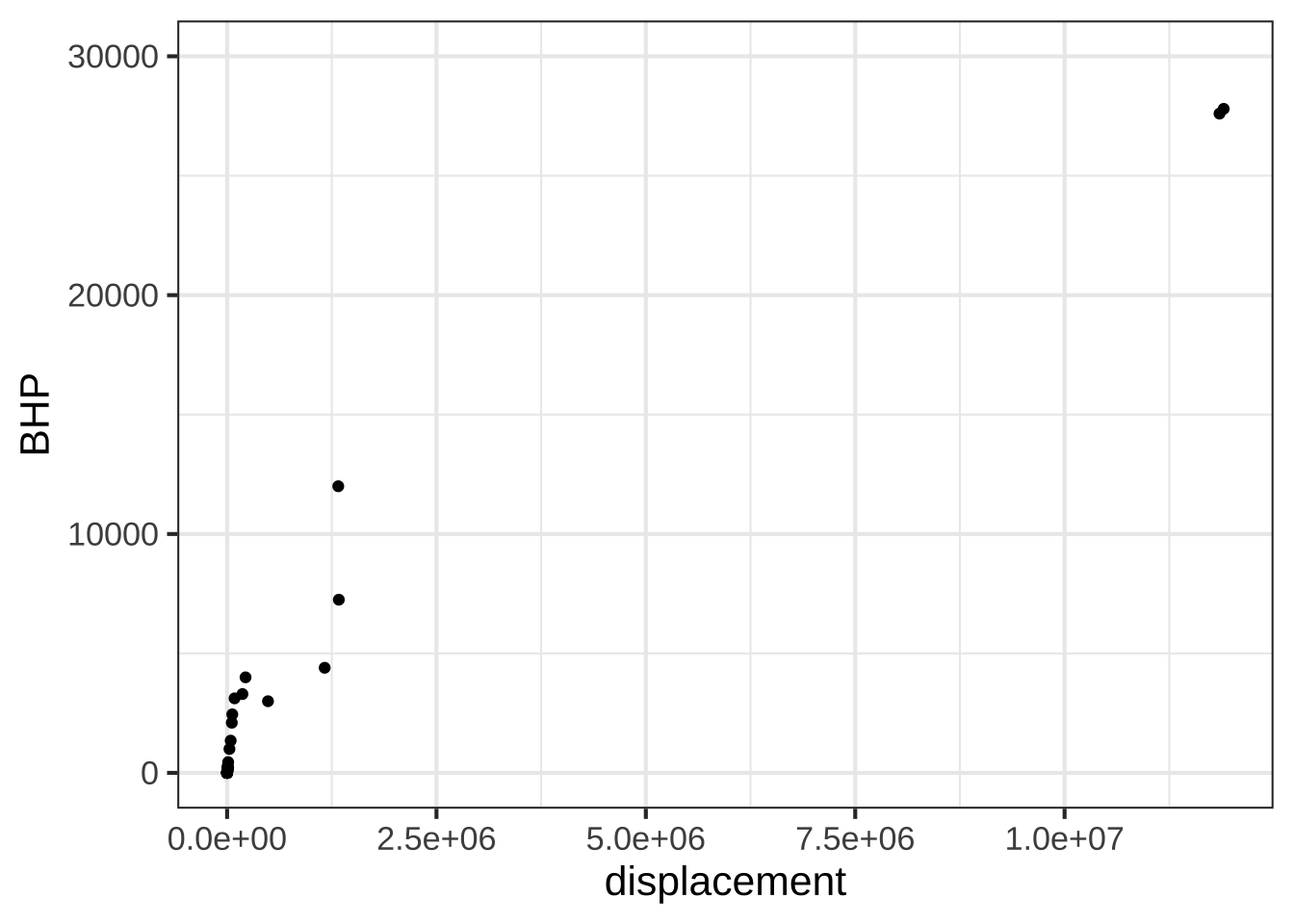

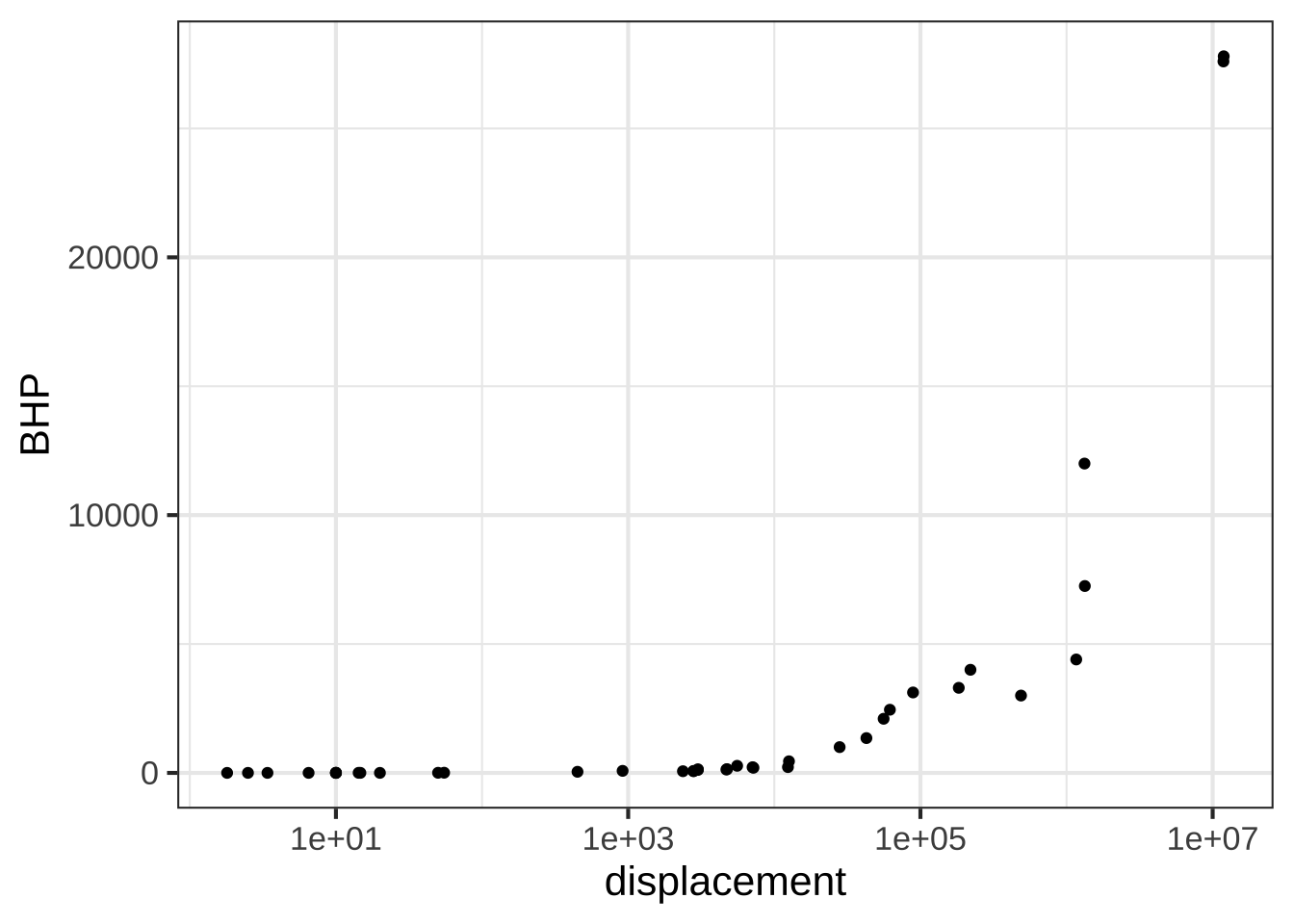

In order to display a variable from data that varies over multiple orders of magnitude, it helps to plot the logarithm rather than the variable itself. Let’s illustrate using the Engine data frame, which contains measurements of many different internal combustion engines of widely varying size. For instance, we can graph engine RPM (revolutions per second) versus enging mass, as in Figure 15.1.

gf_point(RPM ~ mass, data = Engines)

Figure 15.1: Engine RPM versus mass for 39 different enginges plotted on the standard linear axis.

In the graph, most of the engines have a mass that is … zero. At least that’s what it appears to be. The horizontal scale is dominated by the two huge 100,000 pound monster engines plotted at the right end of the graph.

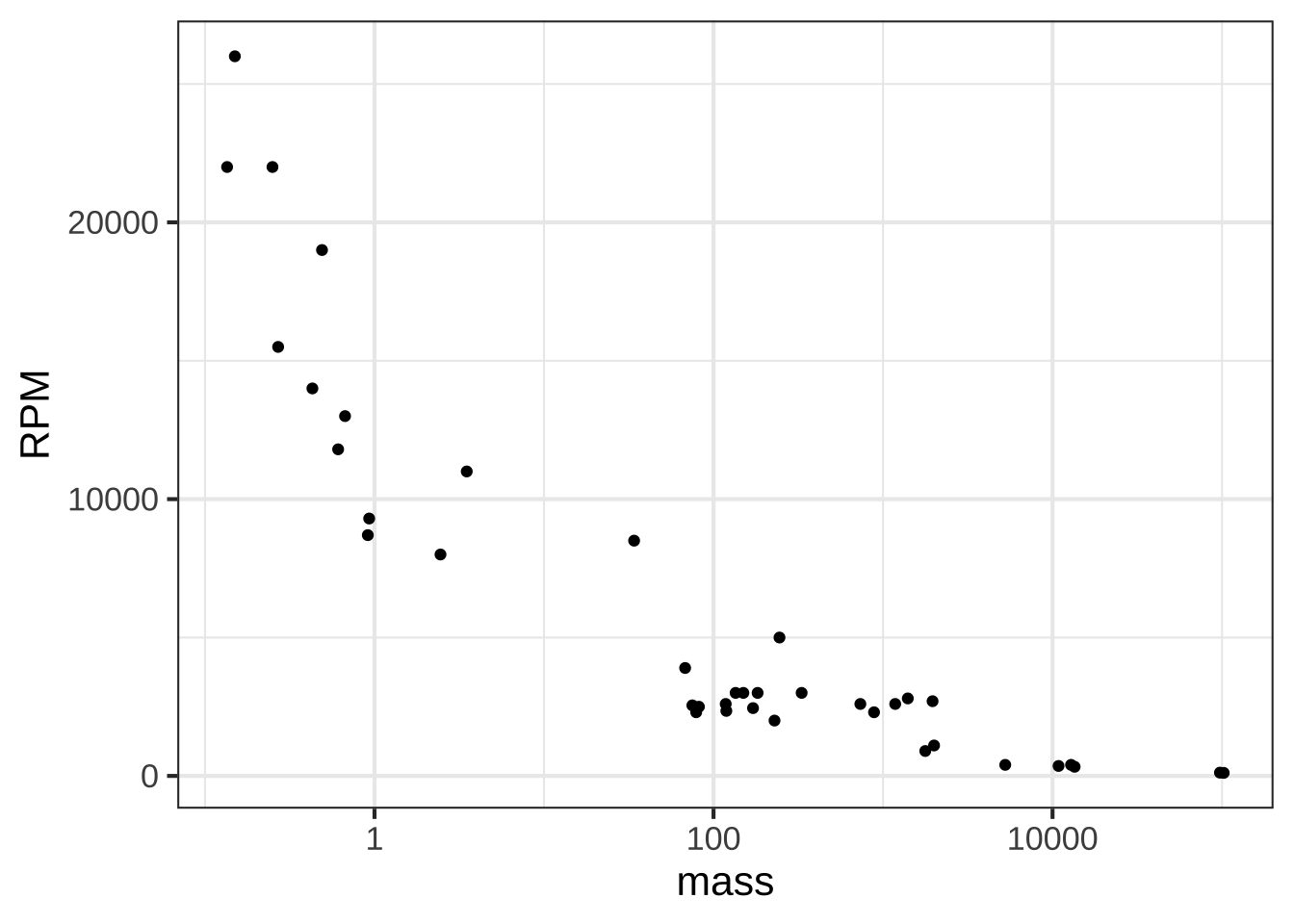

Plotting the logarithm of the engine mass spreads things out, as in Figure 15.2.

gf_point(RPM ~ mass, data = Engines) %>%

gf_refine(scale_x_log10())

Figure 15.2: Engine RPM versus mass on semi-log axes.

Note that the horizontal axis has been labelled with the actual mass (in pounds), with the labels evenly spaced in terms of their logarithm. This presentation, with the horizontal axis constructed this way, is called a semi-log plot.

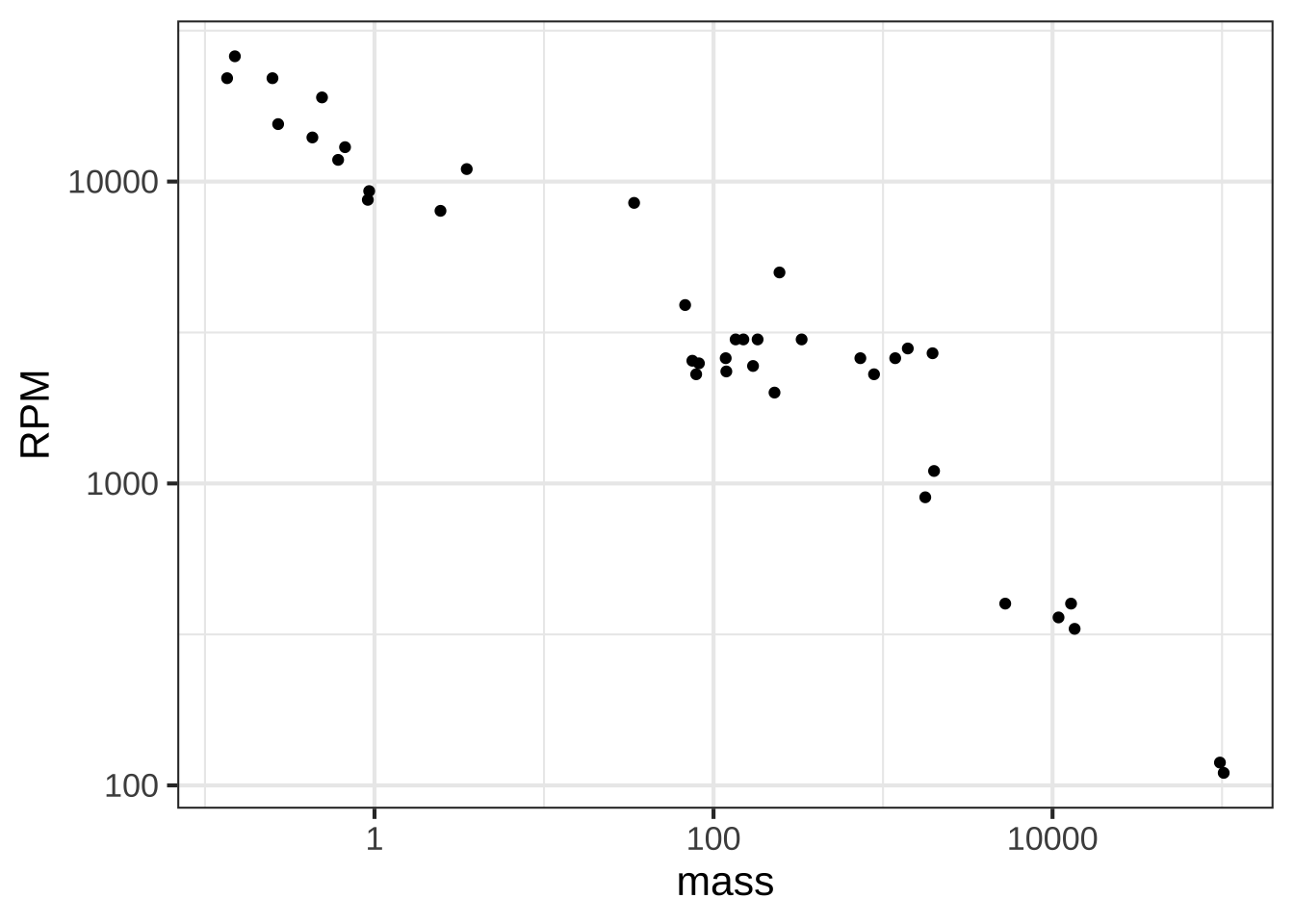

When both axes are labeled this way, we have a log-log plot, as shown in Figure 15.3.

gf_point(RPM ~ mass, data = Engines) %>%

gf_refine(

scale_x_log10(),

scale_y_log10()

)

Figure 15.3: Engine RPM versus mass on log-log axes.

Semi-log and log-log axes are widely used in science and economics, whenever data spanning several orders of magnitude need to be displayed. In the case of the engine RPM and mass, the log-log axis shows that there is a graphically simple relationship between the variables. Such axes are very useful for displaying data, but can be hard for the newcomer to read quantitatively. For example, calculating the slope of the evident straight-line relationship in Figure 15.3 is extremely difficult for a human reader and requires translating the labels into their logarithms.

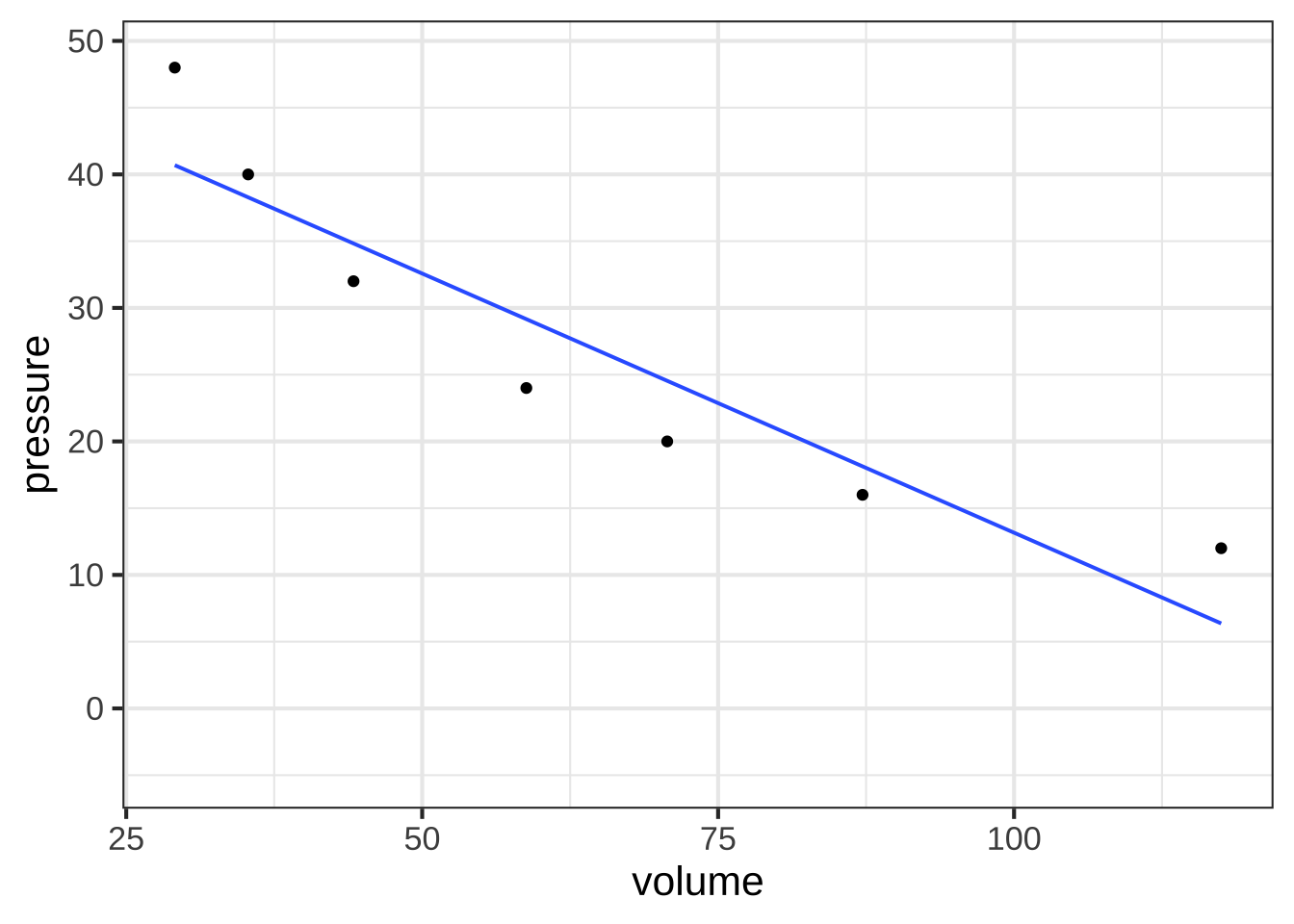

Robert Boyle (1627-1691) was a founder of modern chemistry and of the scientific method in general. As any chemistry student already knows, Boyle sought to understand the properties of gasses. His results are summarized in Boyle’s Law.

The data frame Boyle contains two variables from one of Boyle’s experiments as reported in his lab notebook: pressure in a bag of air and volume of the bag. The units of pressure are mmHg and the units of volume are cubic inches.23

Famously, Boyle’s Law states that, at constant temperature, the pressure of a constant mass of gas is inversely proportional to the volume occupied by the gas. Figure 15.4 shows a cartoon of the relationship.

](www/Boyles_Law_animated.gif)

Figure 15.4: A cartoon illustrating Boyle’s Law. Source: NASA Glenn Research Center

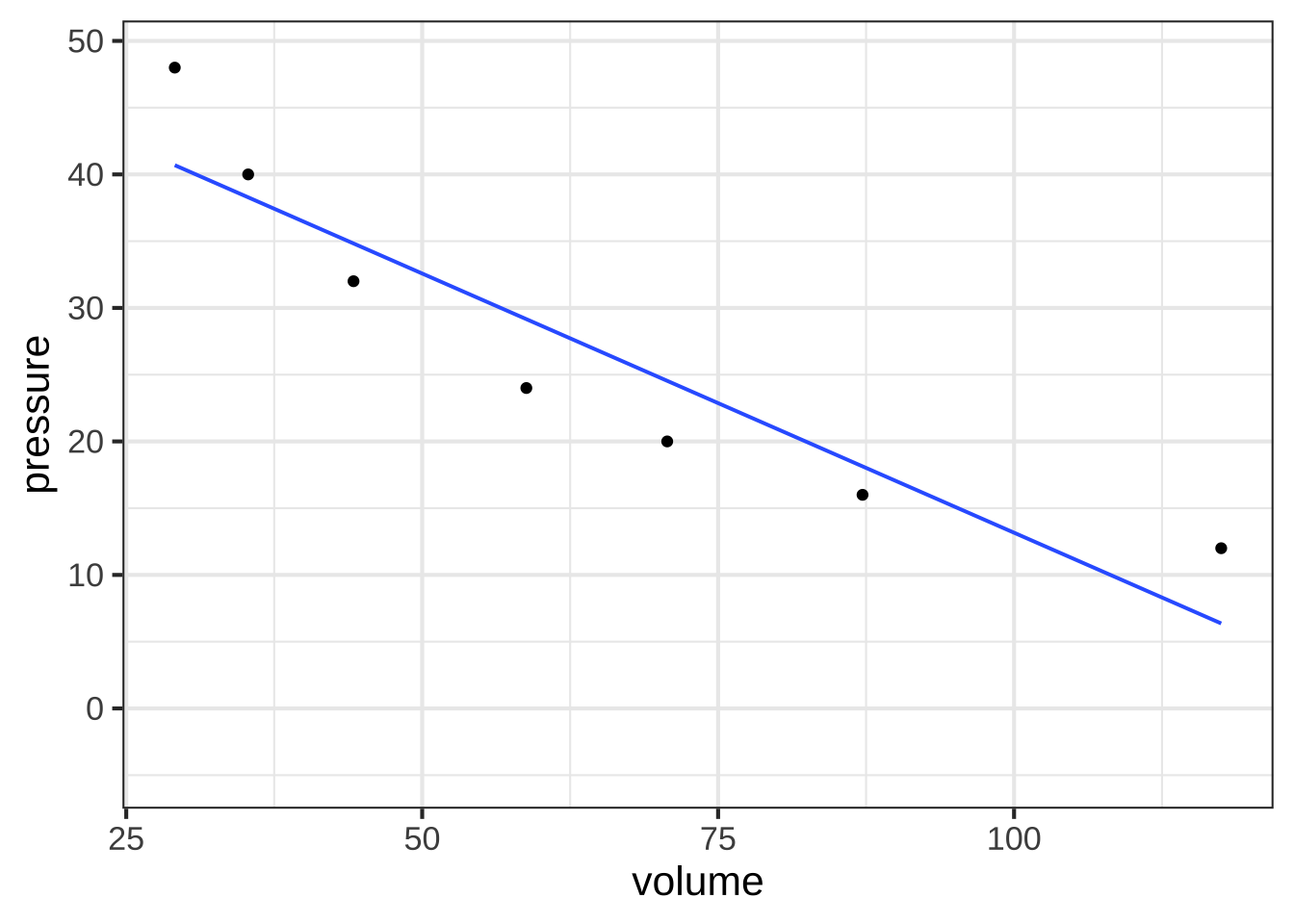

Figure 15.5 plots out Boyle’s actual experimental data. I

gf_point(pressure ~ volume, data = Boyle) %>%

gf_lm()

Figure 15.5: A plot of Boyle’s pressure vs volume data on linear axes. The straight line model is a poor representation of the pattern seen in the data.

You can see a clear relationship between pressure and volume, but it’s hardly a linear relationship.

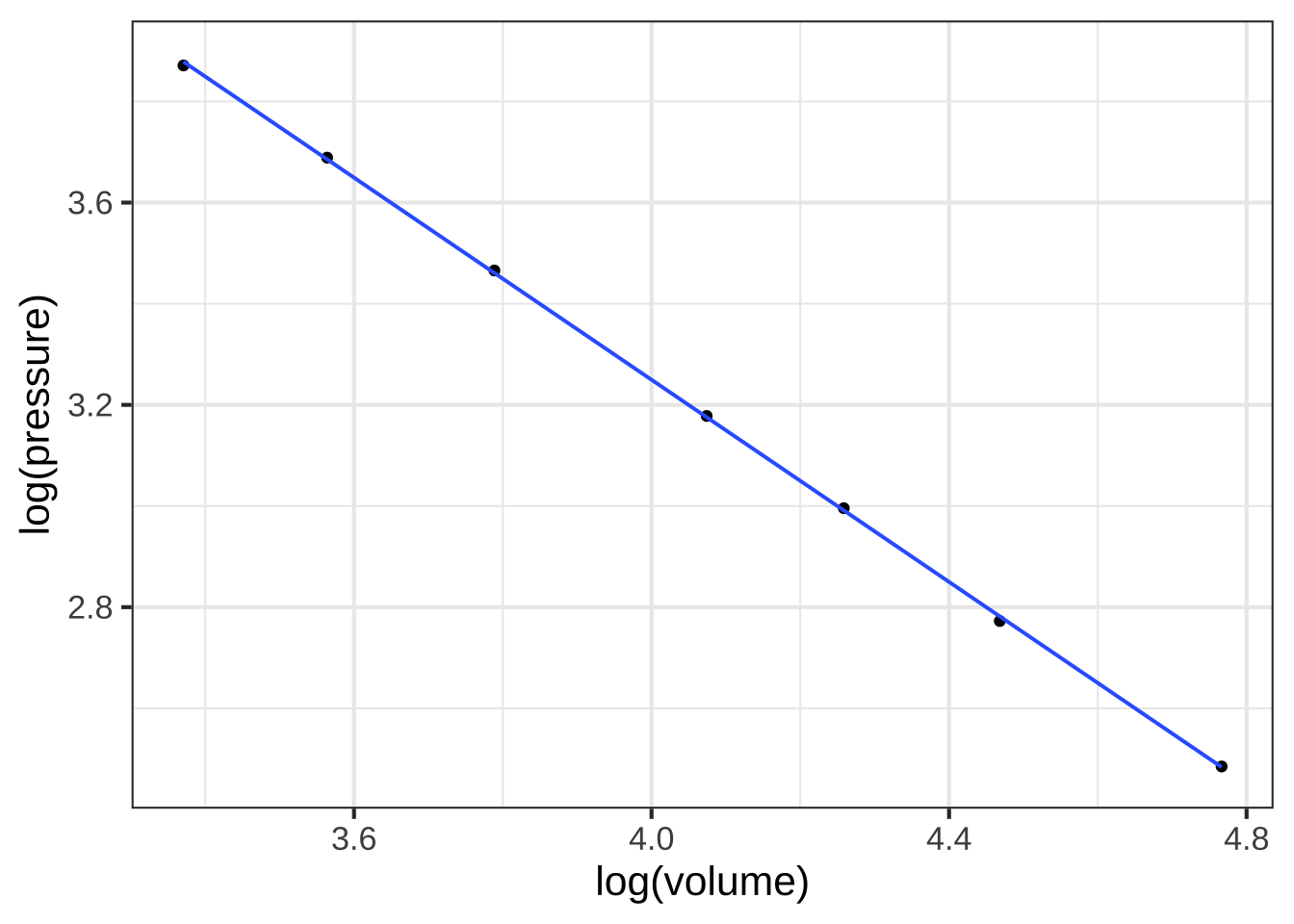

Plotting Boyle’s data on log-log axes reveals that, in terms of the logarithm of pressure and the logarithm of volume, the relationship is linear.

Figure 15.6: Plotting the logarithm of pressure against the logarithm of volume reveals a straight-line relationship.

Figure 15.6 shows that Boyle’s log-pressure and log-volume data are a straight-line function. In other words:

\[\ln(\text{Pressure}) = a + b \ln(\text{Volume})\]

You can find the slope \(b\) and intercept \(a\) from the graph. For now, we want to point out the consequences of the straight-line relationship between logarithms.

Exponentiating both sides gives \[e^{\ln(\text{Pressure})} = \text{Pressure} = e^{a + b \ln(\text{Volume})} = e^a\ \left[e^{ \ln(\text{Volume})}\right]^b = e^a\, \text{Volume}^b\] or, more simply (and writing the number \(e^a\) as \(A\))

\[\text{Pressure} = A\, \text{Volume}^b\] A power-law relationship!

15.6 Reading logarithmic scales

Plotting the logarithm of a quantity gives a visual display of the magnitude of the quantity and labels the axis as that magnitude. A useful graphical technique is to label the axis with the original quantity, letting the position on the axis show the magnitude. ![]() 1640

1640

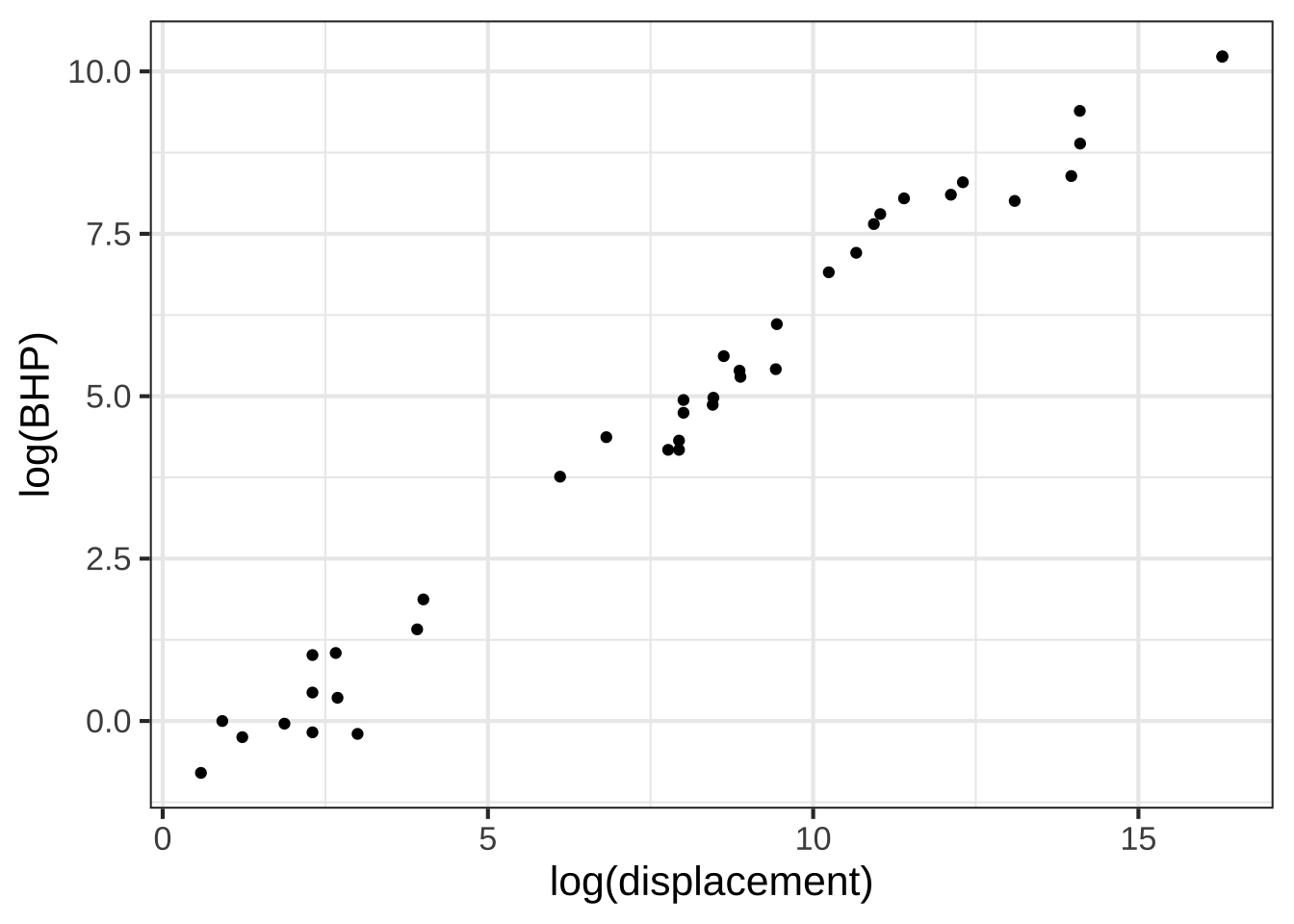

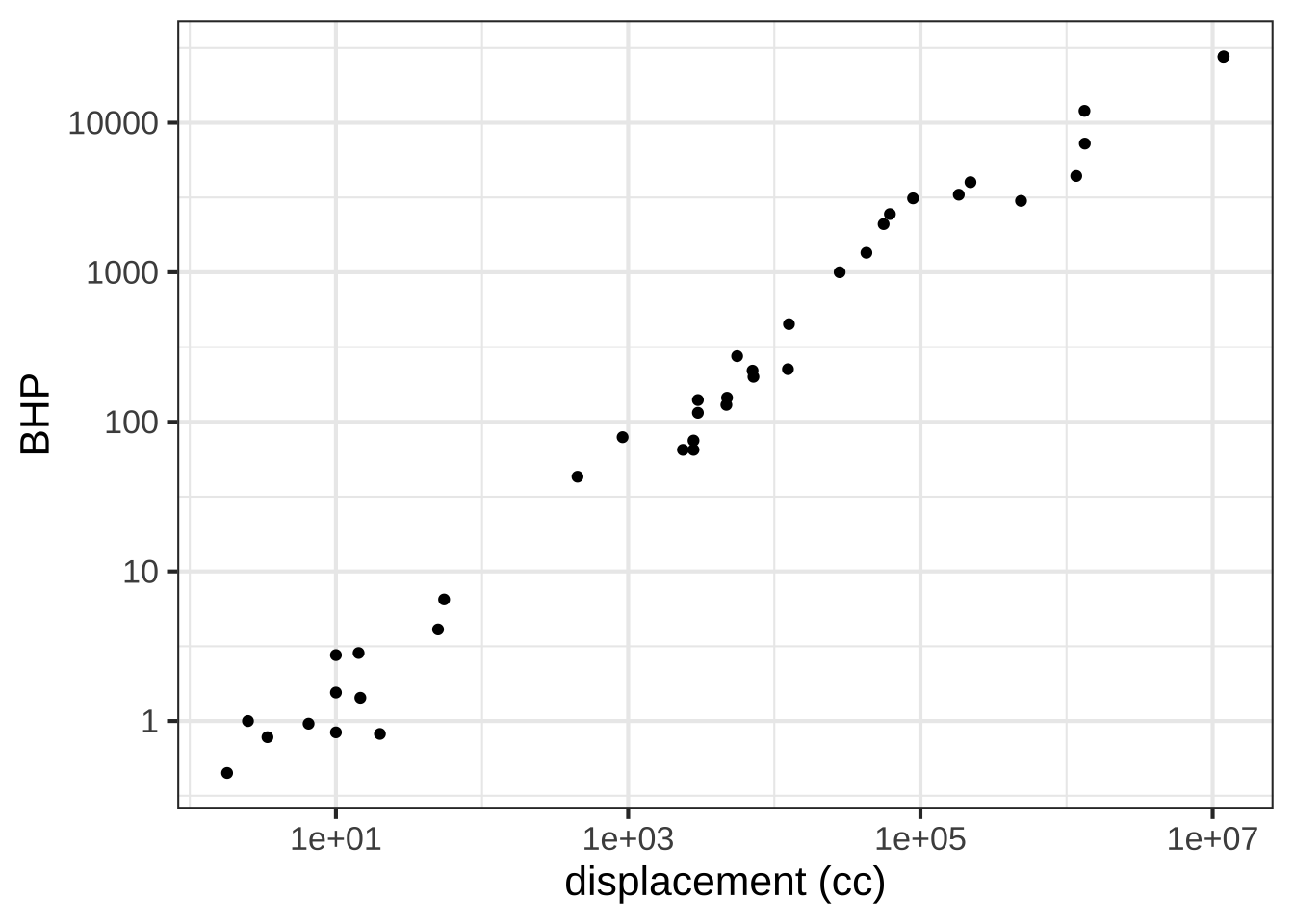

To illustrate, Figure 15.7(left) is a log-log graph of horsepower versus displacement for the internal combustion engines reported in the Engines data frame. The points are admirably evenly spaced, but it is hard to translate the scales to the physical quantity. The right panel in Figure 15.7 shows exactly the same data points, but now the scales are labeled using the original quantity.

gf_point(log(BHP) ~ log(displacement), data = Engines)

gf_point(BHP ~ displacement, data = Engines) %>%

gf_refine(scale_y_log10(), scale_x_log10())

Figure 15.7: Horsepower versus displacement from the Engines data.frame plotted with log-log scales.

The tick marks on the vertical axis in the left pane are labeled for 0, 2.5, 5.0, 7.5, and 10. That doesn’t refer to the horsepower itself, but to the logarithm of the horsepower. The right pane has tick labels that are in horsepower at positions marked 1, 10, 100, 1000, 10000.

Such even splits of a 0-100 scale are not appropriate for logarithmic scales. One reason is that 0 cannot be on a logarithmic scale in the first place since \(\log(0) = -\infty\).

Another reason is that 1, 3, and 10 are pretty close to an even split of a logarithmic scale running from 1 to 10. It’s something like this:

1 2 3 5 10 x

|----------------------------------------------------|

0 1/3 1/2 7/10 1 log(x)It’s nice to have the labels show round numbers. It’s also nice for them to be evenly spaced along the axis. The 1-2-3-5-10 convention is a good compromise; almost evenly separated in space yet showing simple round numbers.

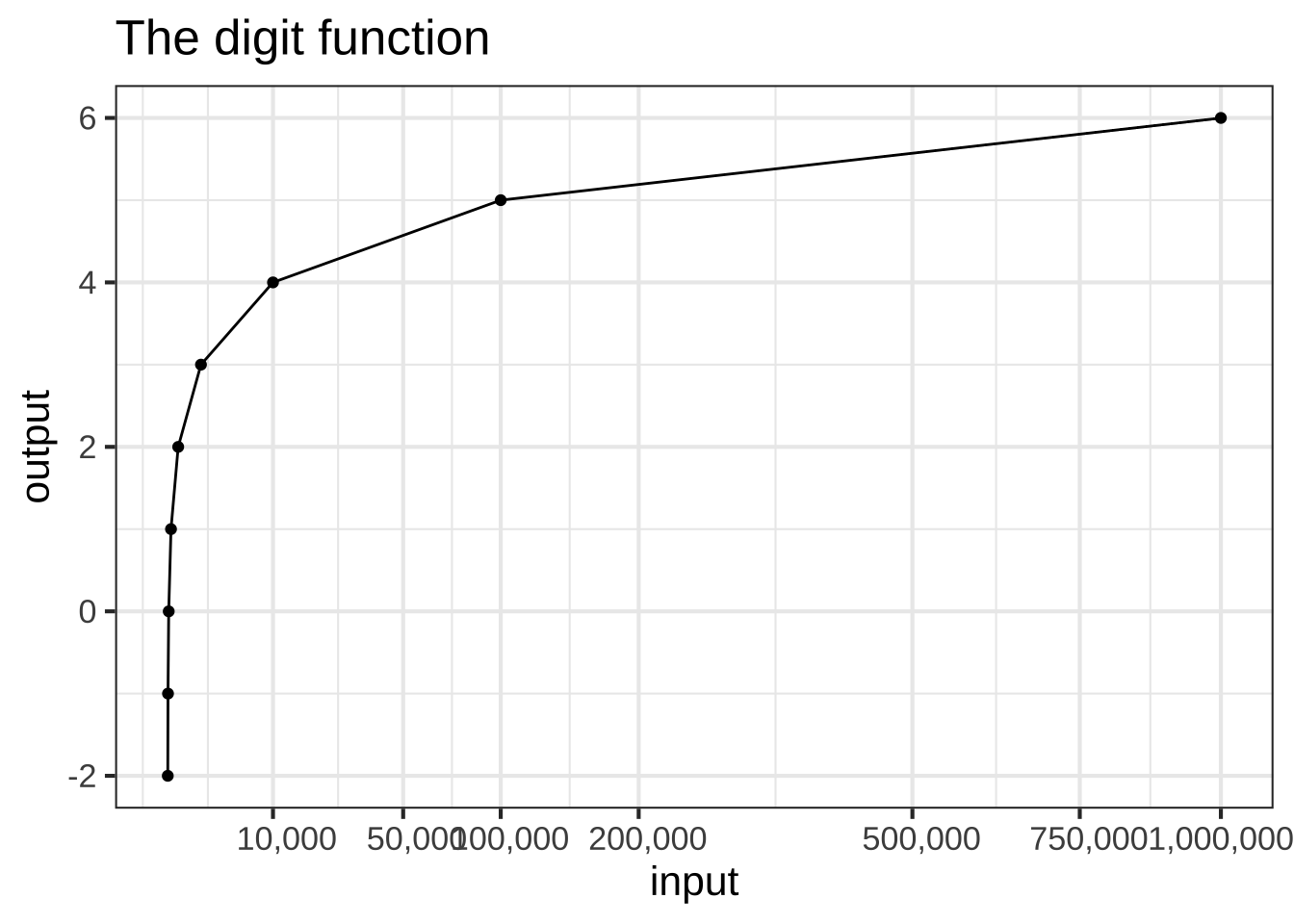

15.7 Fractional digits (optional)

So far, we have the digit() function in a tabular form: ![]() 1650

1650

| input | output |

|---|---|

| \(\vdots\) | \(\vdots\) |

| 0.01 | -2 |

| 0.1 | -1 |

| 1 | 0 |

| 10 | 1 |

| 100 | 2 |

| 1000 | 3 |

| 10,000 | 4 |

| 100,000 | 5 |

| 1,000,000 | 6 |

| \(\vdots\) | \(\vdots\) |

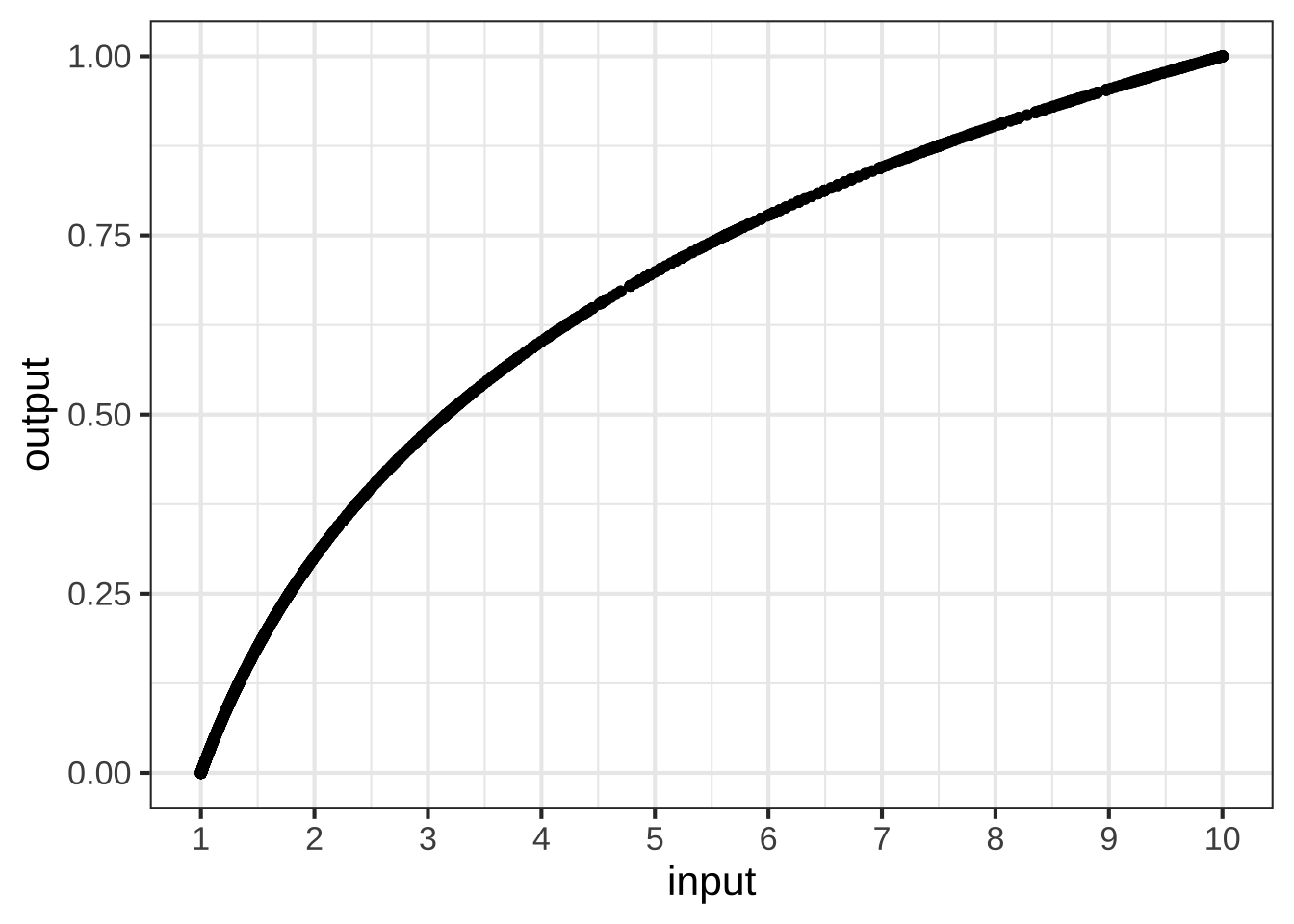

Here’s the point-plot presentation of the table:

Figure 15.8: Connecting the data points for the digit function to make a continuous function.

We’ve imagined digits() to be a continuous function so we’ve connected the gaps with a straight line. Now we have a function that has an output for any input between 0.01 and 1,000,000, for instance, 500,000.

The angles between consecutive line segments give the function plotted in Figure 15.8 an unnatural look. Still, it is a continuous function with an output for any input even if that input is not listed in the table.

Starting around 1600, two (now famous) mathematicians, John Napier (1550-1617) and Henry Briggs (1561-1630) had an idea for filling in gaps in the table. They saw the pattern that for any of the numbers \(a\) and \(b\) in the input column of the table \[ \text{digit}(a \times b) = \text{digit}(a) + \text{digit}(b)\] This is true even when \(a=b\). For instance, digit(10)=1 and digit(10\(\times\) 10) = 2.

Consider the question what is digit(316.2278)? That seems a odd question unless you realize that \(316.2278 \times 316.2278 = 100,000\). Since digit(100000) = 5, it must be that digit(316.2278) = 5/2.

Another question: what is digit(17.7828)? This seems crazy, until you notice that \(17.7828^2 = 316.2278\). So digit(17.78279) = 5/4.

For a couple of thousand years mathematicians have known how to compute the square root of any number to a high precision. By taking square roots and dividing by two, it’s easy to fill in more rows in the digit()-function table. You get even more rows by noticing other simple patterns like \[\text{digit}(a/10) = \text{digit}(a) -1 \ \ \text{and} \ \ \ \text{digit}(10 a) = \text{digit}(a) + 1\]

Here are some additional rows in the table

| input | output | Why? |

|---|---|---|

| 316.2278 | 2.5 | From \(\sqrt{\strut100,000}\) |

| 17.17828 | 1.25 | From \(\sqrt{\strut 316.2278}\) |

| 4.21696 | 0.625 | From \(\sqrt{\strut 17.17828}\) |

| 31.62278 | 1.5 | From 316.2278/10 |

| 3.162279 | 0.5 | From 31.62278/10 |

You can play this game for weeks. We asked the computer to play the game for about half a second and expanded the original digit() table to 7975 rows.

Figure 15.9 plots the expanded digits() function table.

Figure 15.9: The digit function with more entries

Now we have a smooth function that plays by the digit rules of multiplication.

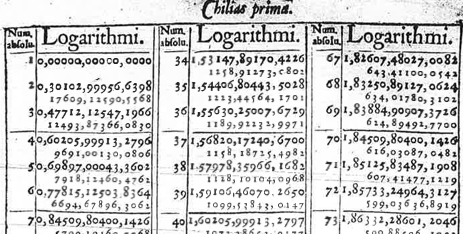

Henry Briggs and his assistants did a similar calculation by hand. Their work was published in 1617 as a table.

Figure 15.10: Part of the first page of Henry Briggs table of logarithms

The table was called the Chilias prima, Latin for “First group of one thousand.” True to its name, the table gives the output of digits() for the inputs 1, 2, 3, …, 998, 999, 1000. For instance, as you can see from the top row of the right-most column, digits(67) = 1.82607480270082.

In everyday speech, 67 has two digits. The authors of Chilias prima sensibly didn’t use the name “digit()” for the function. They chose something more abstract: “logarithm().” Nowadays, this function is named \(\log_{10}()\). In R, the function is called log10(). ![]() 1660

1660

log10(67)## [1] 1.826075Our main use for \(\log_{10}()\) (in R: log10()) will be to count digits in order to quickly compare the magnitude of numbers. The difference digits(\(x\)) - digits(\(y\)) tells how many factors of 10 separate the magnitude of the \(x\) and \(y\).

Another important logarithmic/digit-counting function is \(\log_2()\), written log2() in R. This counts how many *binary digits are in a number. For us, \(\log_2(x)\) tells how many times we need to double, starting at 1, in order to reach \(x\). For instance, \(\log_2(67) = 6.06609\), which indicates that \(67 = 2\times 2 \times 2 \times 2 \times 2 \times 2 \times 2^{0.06609}\)

\(\log_2(x)\) and \(\log_{10}(x)\) are proportional to one another. One way to think of this is that they both count “digits” but report the results in different units, much as you might report a temperature in either Celsius or Fahrenheit. For \(\log_2(x)\) the units of output are in bits. For \(\log_{10}(x)\) the output is in decades.

A third version of the logarithm function is called the natural logarithm and is denoted \(\ln()\) in math notation and simply log() in R. We’ll need additional calculus concepts before we can understand what justifies calling \(\ln()\) “natural.”

15.8 Exercises

Exercise 15.2:  ILXEG

ILXEG

Open a SANDBOX and make the following log-log plot of horsepower (BHP) versus displacement (in cc, cubic-centimeters) of the internal combustion engines listed in the Engines data frame.

gf_point(BHP ~ displacement, data = Engines) %>%

gf_refine(scale_x_log10(), scale_y_log10()) %>%

gf_labs(x = "displacement (cc)")

In the plot, you’ll see that the vertical axis has labels at 1, 10, 100, 1000, 10000. These numbers are hardly spaced evenly when plotted on a linear scale, but on the log scale they are evenly spaced. Since there is a factor of ten between consecutive labels, the interval between the labels is called a decade. On the horizontal axis, the labels are at 10, 1000, 100,000, and 10,000,000. Each of those intervals spans a factor of one hundred. For instance, from 1000 is one-hundred times 10, 100,000 is one-hundred times 1000, and so on. An interval of size 100 is said to span two decades, not 20 years but a factor of 100.

Based on the log-log plot, answer these questions.

How many engines have a displacement of 1 liter or less? ( ) none ( ) 7 (x ) 14 ( ) 25 [[Right. It's the $$10^3$$ tick that marks 1 liter, since 1 liter is 1000 cc.]]

Using the log-log plot, how many decades of BHP are spanned by the data? ( ) 4 (x ) 5 ( ) 100 [[Correct.]]

Exercise 15.4:  j3xe

j3xe

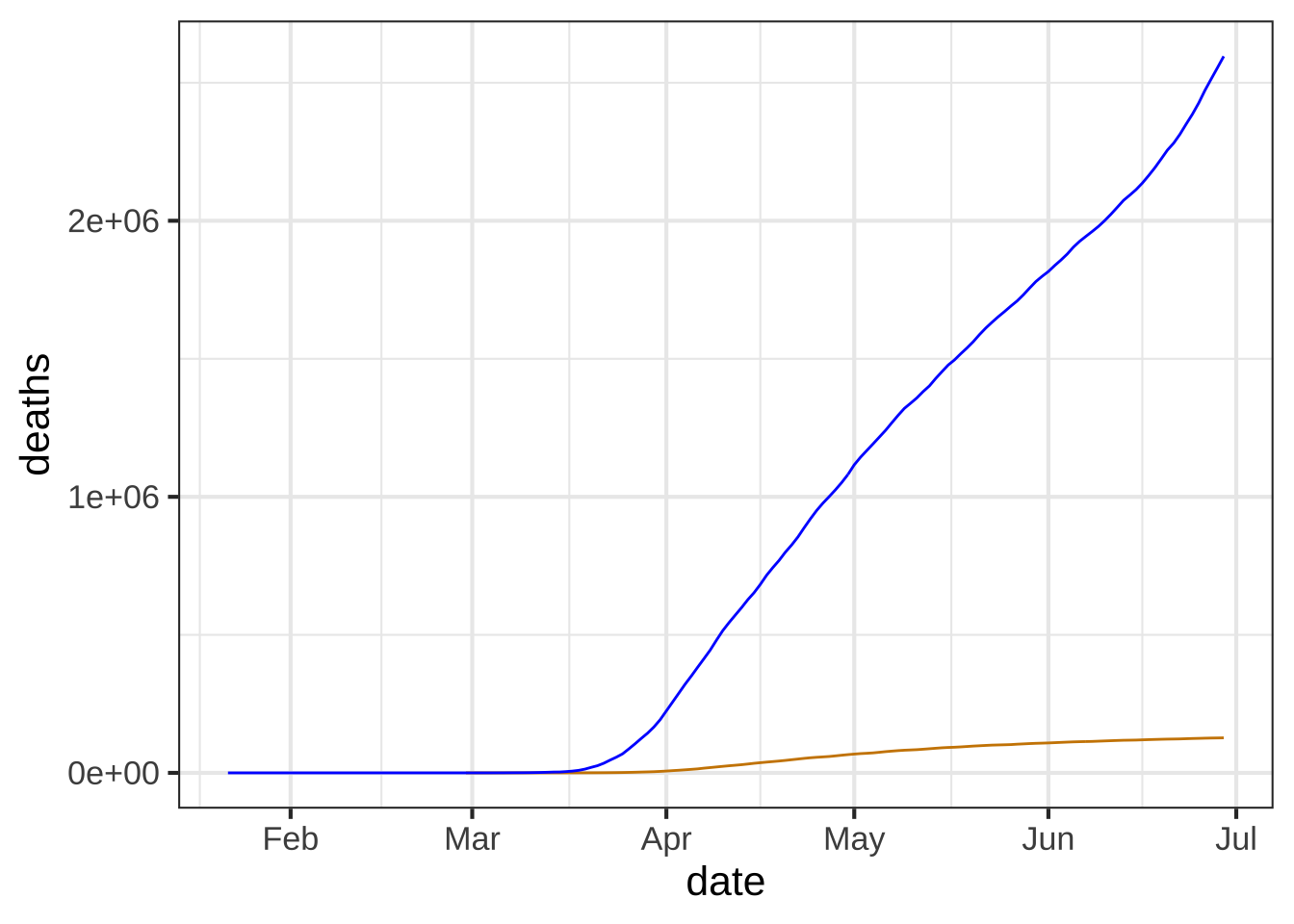

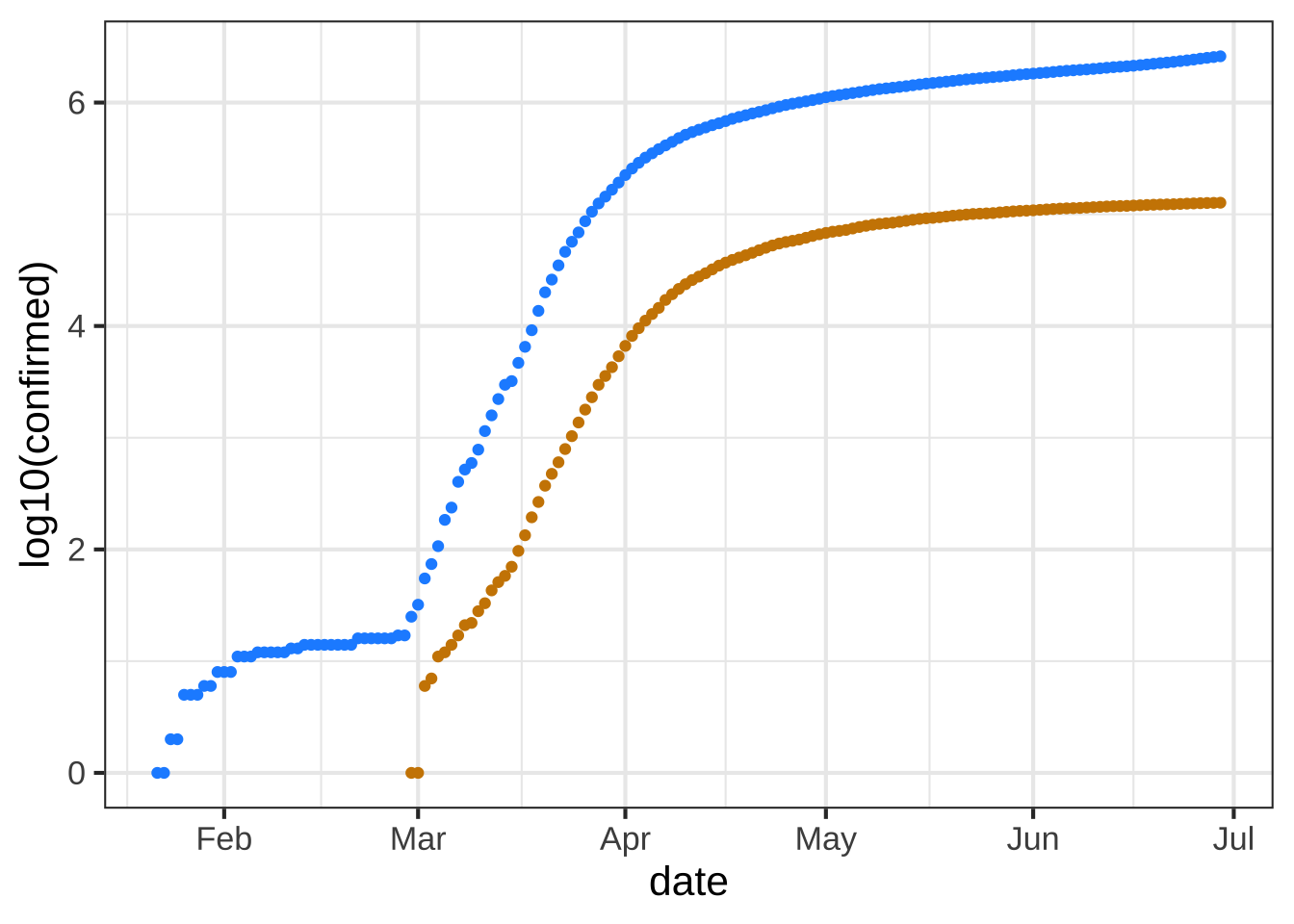

You have likely heard the phrase “exponential growth” used to describe the COVID-19 pandemic. Let’s explore this idea using actual data.

The COVID-19 Data Hub is a collaborative effort of universities, government agencies, and non-governmental organizaions (NGOs) to provide up-to-date information about the pandemic. We’re going to use the data about the US at the whole-country level. (There’s also data at state and county levels. Documentation is available via the link above.)

Perhaps the simplest display is to show the number of cumulative cases (the confirmed variable) and deaths as a function of time. We’ll focus on the data up to June 30, 2020.

The plot shows confirmed cases in blue and deaths in tan.

gf_line(deaths ~ date,

data = Covid_US %>% filter(date < as.Date("2020-06-30")),

color = "orange3") %>%

gf_line(confirmed ~ date, color = "blue") :::

:::As of mid June, 2020 about how many confirmed cases were there? (Note that the labeled tick marks refer to the beginning of the month, so the point labeled `Feb` is February 1.) ( ) about 50,000 ( ) about 200,000 ( ) about 500,000 ( ) about 1,000,000 (x ) about 2,000,000 ( ) about 5,000,000 [[Right!]]

Here’s the same graphic as above, but taking the logarithm (base 10) of the number of cases (that is, confirmed) and of the number of deaths. Since we’re taking the logarithm of only the y-variable, this is called a “semi-log” plot.

gf_point(log10(confirmed) ~ date,

data = Covid_US %>% filter(date < as.Date("2020-06-30")),

color = "dodgerblue") %>%

gf_point(log10(deaths) ~ date, color = "orange3")

Up through the beginning of March in the US, it is thought that most US cases were in people travelling into the US from hot spots such as China and Italy and the UK, as opposed to contagion between people within the US. (Such contagion is called “community spread.”) So let’s look at the data representing community spread, from the start of March onward.

Exponential growth appears as a straight-line on a semi-log plot. Obviously, the overall pattern of the curves is not a straight line. The explanation for this is that the exponential growth rate changes over time, perhaps due to public health measures (like business closures, mask mandates, etc.)

The first (official) US death from Covid-19 was recorded was recorded on Feb. 29, 2020. Five more deaths occurred two days later, bringing the cumulative number to 6.

The tan data points for Feb 29/March 1 show up at zero on the vertical scale for the semi-log plot. The tan data point for March 2 is at around 2 on the vertical scale. Is this consistent with the facts stated above?

( ) No. The data contradict the facts.

(x ) Yes. The vertical scale is in log (base 10) units, so 0 corresponds to 1 death, since $$\log_{10} 1 = 0$$.

( ) No. The vertical scale doesn't mean anything.

[[Nice!]]

One of the purposes of making a semi-log plot is to enable you to compare very large numbers with very small numbers on the same graph. For instance, in the semi-log plot, you can easily see when the first death occurred, a fact that is invisible in the plot of the raw counts (the first plot in this exercise).

Another feature of semi-log plots is that they preserve proportionality. Look at the linear plot of raw counts and note that the curve for the number of deaths is much shallower than the curve for the number of (confirmed) cases. Yet on the semi-log plot, the two curves are practically parallel.

On a semi-log plot, the arithmetic difference between the two curves tells you what the proportion is between those curves. The parallel curves mean that the proportion is practically constant. Calculate what the proportion between deaths and cases was in the month of May. Here’s a mathematical hint: \(\log_{10} \frac{a}{b} == \log_{10} a - \log_{10} b\). We are interested in \(\frac{a}{b}\).

What is the proportion of deaths to cases during the month of May?

( ) about 1%

( ) about 2%

(x ) about 5%

( ) about 25%

( ) about 75%

[[On the semi-log plot, the deaths curve is about 1.2 log10 units lower than the cases curve. $$10^{-1.2} = 0.063 = 6.3\%$$) separates the two curves.]]

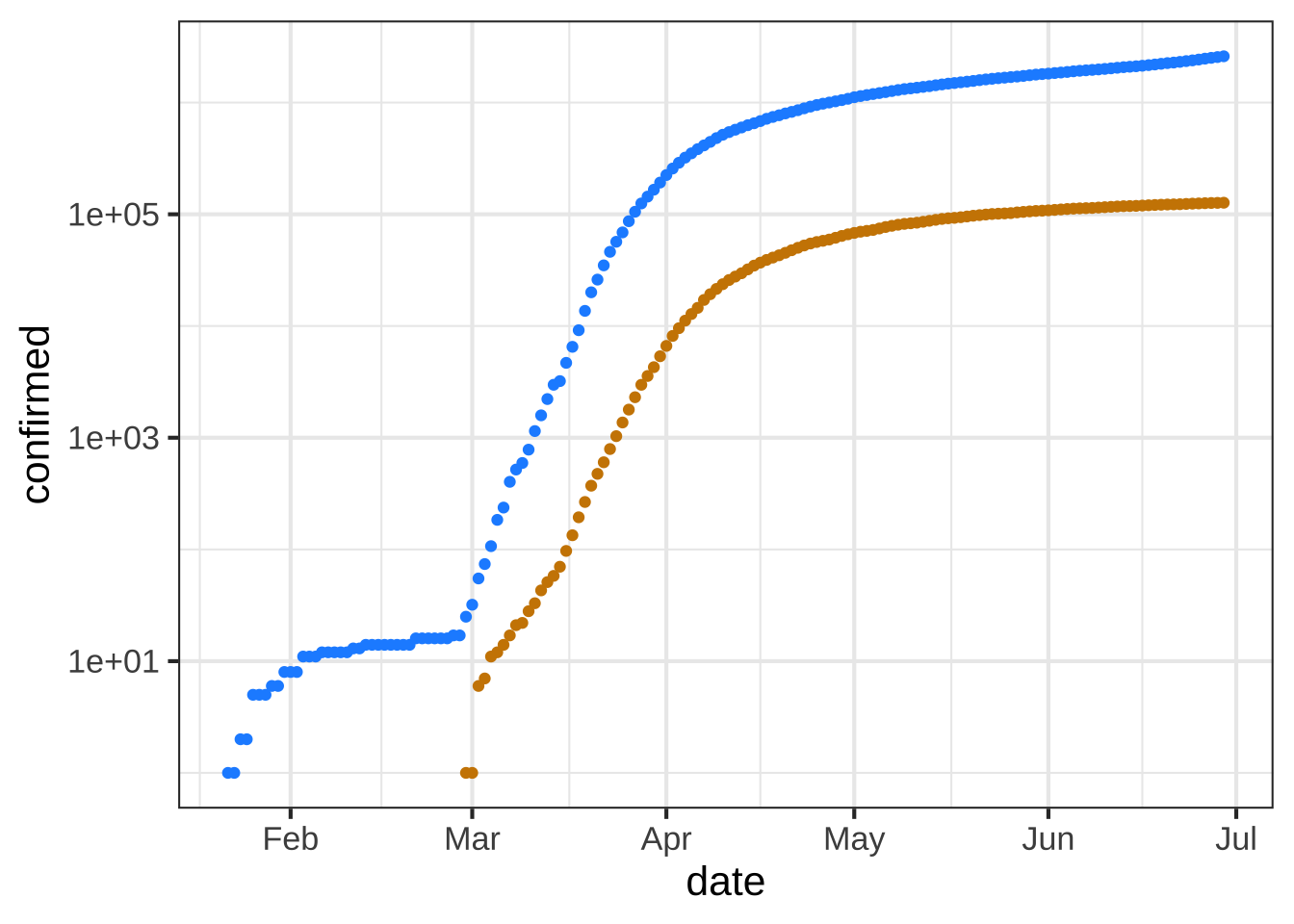

In many applications, people use semi-log plots to see whether a pattern is exponential or to compare very small and very large numbers. Often, people find it easier if the vertical scale is written in the original units rather than the log units. To accomplish both, the vertical scale can be ruled with raw units spaced logarithmically, like this:

gf_point(confirmed ~ date,

data = Covid_US %>% filter(date < as.Date("2020-06-30")),

color = "dodgerblue") %>%

gf_point(deaths ~ date, color = "orange3") %>%

gf_refine(scale_y_log10())

The labels on the vertical axis show the raw numbers, while the position shows the logarithm of those numbers.

The next question has to do with the meaning of the interval between grid lines on the vertical axis. Note that on the horizontal axis, the spacing between adjacent grid lines is half a month.

What is the numerical spacing (in terms of raw counts) between adjacent grid lines on the vertical axis? (Note: Two numbers are different by a "factor of 10" when one number is 10 times the other." Similarly, "a factor of 100" means that one number is 100 times the other. ( ) 10 cases ( ) 100 cases (x ) A factor of 10. ( ) A factor of 100. [[Right. Every time you move up by one grid line, the raw number increases ten-fold, so 10, 100, 1000, 10,000, and so on. The phrase `a factor of 10` means to multiply by 10, not to add 10.]]

Exercise 15.5:  RWESX

RWESX

Open a sandbox to carry out some calculations with Boyle’s data. To see how the data frame is organized, use the head(Boyle) and names(Boyle) commands.

The scaffolding here contains a command for plotting out Boyle’s data. It also includes a command, gf_lm() that will add a graph of the best straight-line model to the plotted points. Recall that the # symbol turns what follows on the line into a comment, which is ignored by R. By removing the # selectively you can turn on the display of log axes.

gf_point(pressure ~ volume, data = Boyle) %>%

gf_refine(

# scale_x_log10(),

# scale_y_log10()

) %>%

gf_lm()

In a sandbox, plot pressure versus volume using linear, semi-log, and log-log axes. Based on the plot, and the straight-line function drawn, which of these is a good model of the relationship between pressure and volume? ( ) linear ( ) exponential (x ) power-law [[Excellent!]]

Exercise 15.6:  gmZiWh

gmZiWh

Recall Robert Boyle’s data on pressure and volume of a fixed mass of gas held at constant pressure. In Section 15.5 of the text you saw a graphical analysis that enabled you to identify Boyle’s Law with a power-law relationship between pressure and volume: \[P(V) = a V^n\] On log-log axes, a power-law relationship shows up as a straight-line graphically.

Taking logarithms translates the relationship to a straight-line function:\[\text{lnP(lnV)} = \ln(a) + n\, \ln(V)\] To find the parameter \(n\), you can fit the model to the data. This R command will do the job:

fitModel(log(pressure) ~ log(a) + n*log(volume), data = Boyle) %>%

coefficients()Open a SANDBOX and run the model-fitting command. Then, interpret the parameters.

What is the slope produced by `fitModel()` when fitting a power law model? ( ) Roughly -1 (x ) Almost exactly -1 ( ) About -1.5 ( ) Slope $$> 0$$ [[Nice!]]

According to the appropriate model that you found in (A) and (B), interpret the function you found relating pressure and volume.

As the volume becomes very large, what happens to the pressure? (x ) Pressure becomes very small. ( ) Pressure stays constant ( ) Pressure also becomes large. ( ) None of the above [[Excellent!]]

Return to your use of fitModel() to find the slope of the straight-line fit to the appropriately log-transformed model. When you carried out the log transformation, you used the so-called “natural logarithm” with expressions like log(pressure). Alternatively, you could have used the log base-10 or the log base-2, with expressions like log(pressure, base = 10) or log(volume, base = 2). Whichever you use, you should use the same base for all the logarithmic transformations when finding the straight-line parameters.

**(D)** Does the **slope** of the straight line found by `fitModel()` depend on which base is used? (x ) No ( ) Yes ( ) There's no way to tell. [[Excellent!]]

**(E)** Does the **intercept** of the straight line found by `fitModel()` depend on which base is used?

(x ) Yes

( ) No

[[Good. But this will come out in the wash when you calculate the parameter $$C$$ in $$C x^b$$, since $$C$$ will be either $$2^\text{intercept}$$ or $$10^\text{intercept}$$ or $$e^\text{intercept}$$ depending on the base log you use.]]

Exercise 15.7:  EWLCI

EWLCI

Here is a plot of the power output (BHP) versus displacement (in cc) of 39 internal combustion engines.

gf_point(BHP ~ displacement, data = Engines) %>%

gf_lims(y = c(0, 30000))

Your study partner claims that the smallest engine in the data has a displacement of 2000 cc (that is, 2.0 liters) and 100 horsepower. Based only on the graph, is this claim plausible? (x ) Yes, because 2000 cc and 100 hp would look like (0, 0) on the scale of this graph. ( ) Yes, because that size engine is typical for a small car. ( ) No, the smallest engine is close to 0 cc. ( ) No, my study partner is always wrong. [[Good.]]

Semi-log scales

The next command will make a graph of the same engine data as before, but with a log scale on the horizontal axis. The vertical axis is still linear.

gf_point(BHP ~ displacement, data = Engines) %>%

gf_refine(scale_x_log10())

Using just the graph, answer this question: The engines range over how many decades of displacement? (Remember, a decade is a factor of 10.) (x ) 7 decades ( ) Can't tell ( ) $$10^7$$ decades ( ) About 3.5 decades [[Nice!]]

Exercise 15.8:  TLEXE

TLEXE

Consider the axis scales shown above. Which kind of scale is the horizontal axis? (x ) linear ( ) logarithmic ( ) semi-logarithmic ( ) log-log [[You can see this because a given length along the axis corresponds to the same arithmetic difference regardless of where you are on the axis. the distance between 0 and 50 is exactly the same as the difference between 50 and 100, or the distance between 150 and 200.]]

Which kind of scale is the vertical axis? ( ) linear (x ) logarithmic ( ) semi-logarithmic ( ) log-log [[Nice!]]

Given your answers to the previous two questions, what kind of plot would be made in the frame being displayed at the top of this question? (x ) semi-log ( ) log-log ( ) linear-linear [[Nice!]]

Exercise 15.9:  SELIX

SELIX

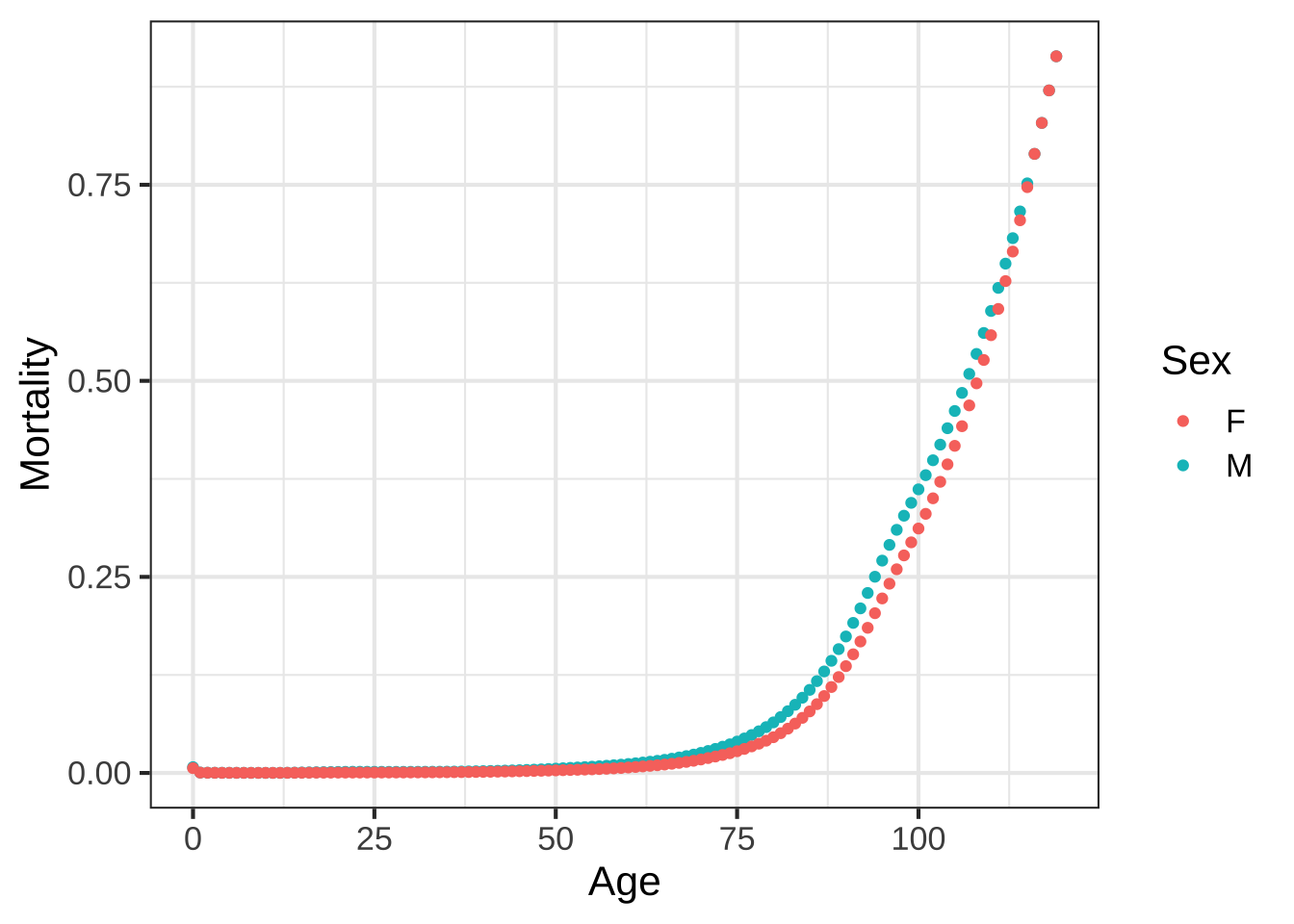

The data frame SSA_2007 comes from the US Social Security Administration and contains mortality in the US as a function of age and sex. (“Mortality” refers to the probability of dying in the next year.)

Open a sandbox and copy in the following scaffolding to see the organization of the data.

data(SSA_2007)

SSA_2007Once you understand the data organization, delete the old scaffolding and insert this:

data(SSA_2007)

gf_point(Mortality ~ Age, color = ~ Sex, data = SSA_2007) There is a slight mistake in the way the command is written,

so an error message will be generated. To figure out what’s wrong, read the error message, check the variable names, and so on until you successfully make the plot.

There is a slight mistake in the way the command is written,

so an error message will be generated. To figure out what’s wrong, read the error message, check the variable names, and so on until you successfully make the plot.

What was the mistake in the plotting command in the above code box? (x ) Variable names didn't match the ones in the data. ( ) The *tilde* in the argument `color = ~ sex` ( ) The data frame name is spelled wrong. ( ) There is no function `gf_point()`. [[Excellent!]]

Essay question tmp-4: What’s the obvious (simple) message of the above plot?

Now you are going to use semi-log and log-log scales to look at the mortality data again. To do this, you will use the gf_refine() function.

gf_point( __and_so_on__) %>%

gf_refine(

scale_y_log10(),

scale_x_log10()

)Fill in the __and_so_on__ details correctly and run the command in your sandbox.

As written, both vertical and horizontal axes will be on log scales. This may not be what you want in the end.

Arrange the plotting command to make a semi-log plot of mortality versus age. Interpret the plot to answer the following questions. Note that labels such as those along the vertical axis are often called “decade labels.”

The level of mortality in year 0 of life is how much greater than in year 1 and after? ( ) About twice as large. ( ) About five times as large (x ) About 10 times as large ( ) About 100 times as large [[Nice!]]

Near age 20, the mortality of males is how much compared to females? ( ) Less than twice as large. (x ) A bit more than three times as large ( ) About 8 times as large ( ) About 12 times as large [[Correct.]]

Between the ages of about 40 and 80, how does mortality change with age? ( ) It stays about the same. ( ) It increases as a straight line. (x ) It increases exponentially. ( ) It increases, then decreases, then increases again. [[Correct.]]

Remake the plot of mortality vs age once again, but this time put it on log-log axes. The sign of a power-law relationship is that it shows up as a straight line on log-log axes.

Between the ages of about 40 and 80 is the increase in mortality better modeled by an exponential or a power-law process? ( ) Power-law (x ) Exponential ( ) No reason to prefer one or the other. [[Right. The graph is much closer to a straight line on semi-log scales than on log-log scales.]]

Exercise 15.10:  PeQJCA

PeQJCA

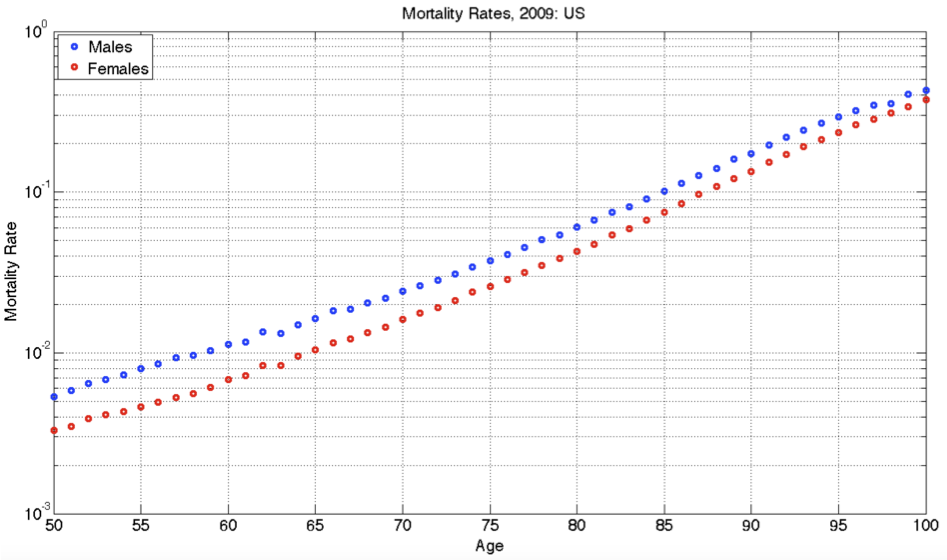

The graph comes from an online guide, “Retirement Income Analysis with Scenario Matrices,” published in 2019 by William F. Sharpe, winner of the Nobel Prize in economices in 1990. The guide is intended to be of interest to those planning for retirement income who also happen to have a sophisticated understanding of mathematics and computing. You may or may not be in the former group, but it is hoped that, as you follow this course, you are becoming a member of the later group.

Figure 15.11: Mortality rates for people aged 50+ in the US in 2009. The mortality rate is the probability of dying in one year. It’s shown here as a function of age and sex.

As you can see, the graph shows nearly parallel straight-line functions for both women and men, with women somewhat less likely than men to die at any given age.

What is the format of the graphics axes? ( ) linear (x ) semi-log ( ) log-log [[Excellent!]]

If the age axis had been logged, which of the following would be true? (x ) The interval from 50 to 55 would be graphically **larger** than the interval from 95 to 100. ( ) The interval from 50 to 55 would be graphically **smaller** than the interval from 95 to 100. ( ) The two intervals would have the same graphical length. [[Excellent!]]

At age **100**, which of these is closest to the mortality rate for men?

( ) About 10%

( ) About 20%.

(x ) About 40%.

( ) About 60%

[[40% is the *third* tick mark above the $$10^{-1}$$ mark.]]

At age **65**, women have a lower probability of dying than men. How much lower, proportionately? ( ) About 5% lower than men ( ) About 15% lower than men. (x ) About 40% lower than men. [[Women have a mortality rate of 1% while the rate for men is just under 2%.]]

A rough estimate for the absolute limit of the human lifespan can be made by extrapolating the lines out to a mortality of 100%. This extrapolation would be statistically uncertain, and the pattern might change in the future either up or down, but let's ignore that for now and simply extrapolate simply a line fitting the data from age 50 to 100. Which of these is the estimate made in that way for the absolute limit of the human lifespan? ( ) 105 years (x ) 110 years ( ) 120 years ( ) 130 years [[Right!]]

To judge from the graph, the function relating mortality to age is which of the following? ( ) A straight-line function with positive slope. ( ) A power-law with a positive exponent. (x ) An exponentially increasing function with a horizontal asymptote at mortality = 0. ( ) An exponentially decaying function, with a horizontal asymptote at mortality = 100%. [[A straight-line on semi-log axes---what we have here---is diagnostic of an exponential function. The function value would go to zero for age = $$- \infty$$, but that mathematical fact is hardly relevant to human lifespan.]]